In the rapidly evolving landscape of artificial intelligence, few advancements have captured the imagination of creators, technologists, and the public alike as profoundly as generative AI. This field, which empowers machines to synthesize visual content—from photorealistic images to dynamic videos—is redefining creativity, storytelling, and problem-solving across industries. Once confined to the realm of science fiction, tools like DALL·E, MidJourney, and DeepMind’s Sora now demonstrate that machines can not only replicate human creativity but also augment it in unprecedented ways.

Suggestion:Please read this blog in multiple sittings as it has lot to cover :-)

The rise of generative AI in image and video synthesis marks a paradigm shift in how we produce and interact with visual media. Whether crafting hyperrealistic digital art, restoring historical footage, generating virtual environments for gaming, or enabling personalized marketing content, these technologies are dissolving the boundaries between the real and the synthetic. Yet, with such power comes profound questions: How do these systems work? What ethical challenges do they pose? And how might they shape industries like entertainment, healthcare, and education in the years ahead?

This exploration begins by unpacking the foundational technologies behind generative AI—Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), diffusion models, and transformers—each acting as a building block for synthesizing visual content.

Foundational Concepts

Core Technologies

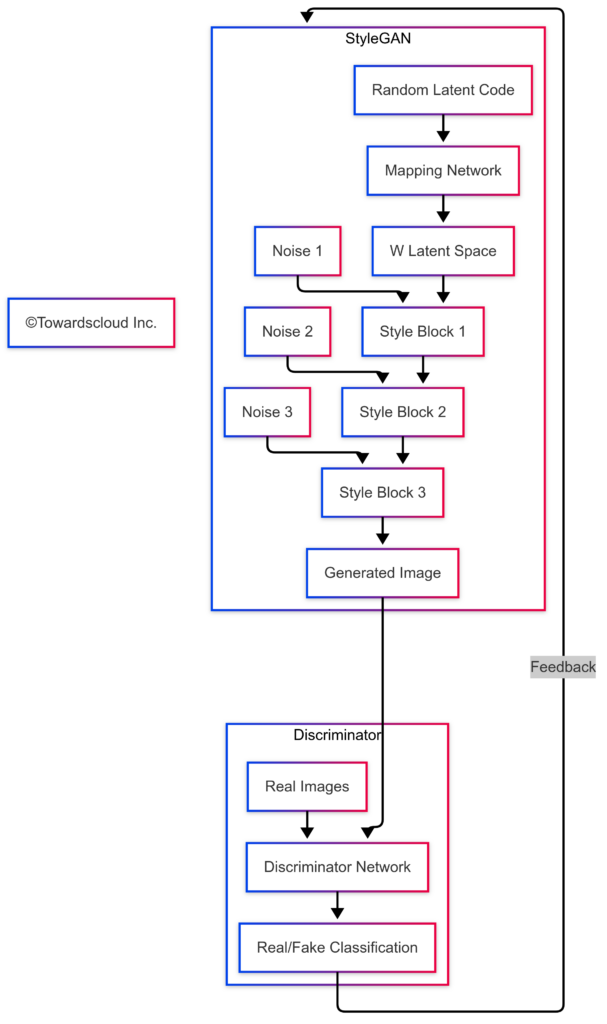

Generative Adversarial Networks (GANs): Systems with two neural networks—a generator creating images and a discriminator evaluating them—competing to improve output quality through iterative training.

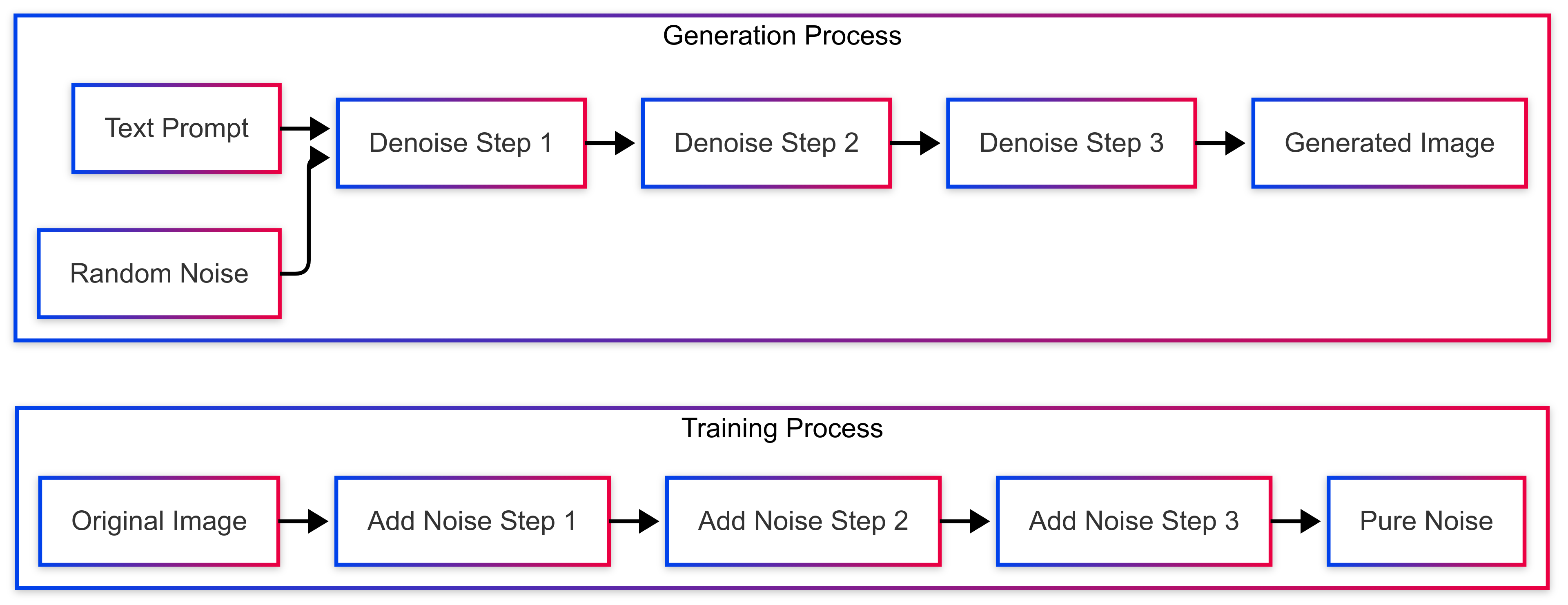

Diffusion Models: Algorithms that gradually add noise to images and then learn to reverse this process, creating new content by progressively removing noise from random patterns.

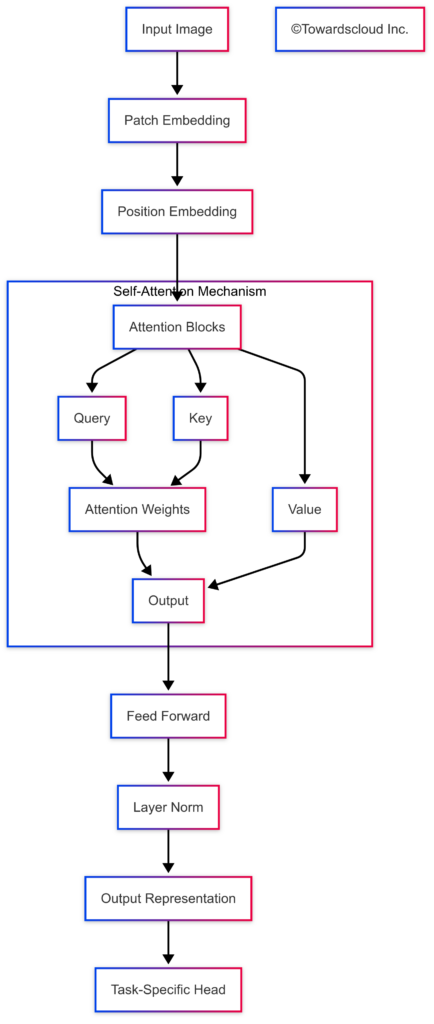

Transformers: Originally designed for language tasks, now adapted for visual generation by treating images as sequences of patches and applying self-attention mechanisms

Key Concepts

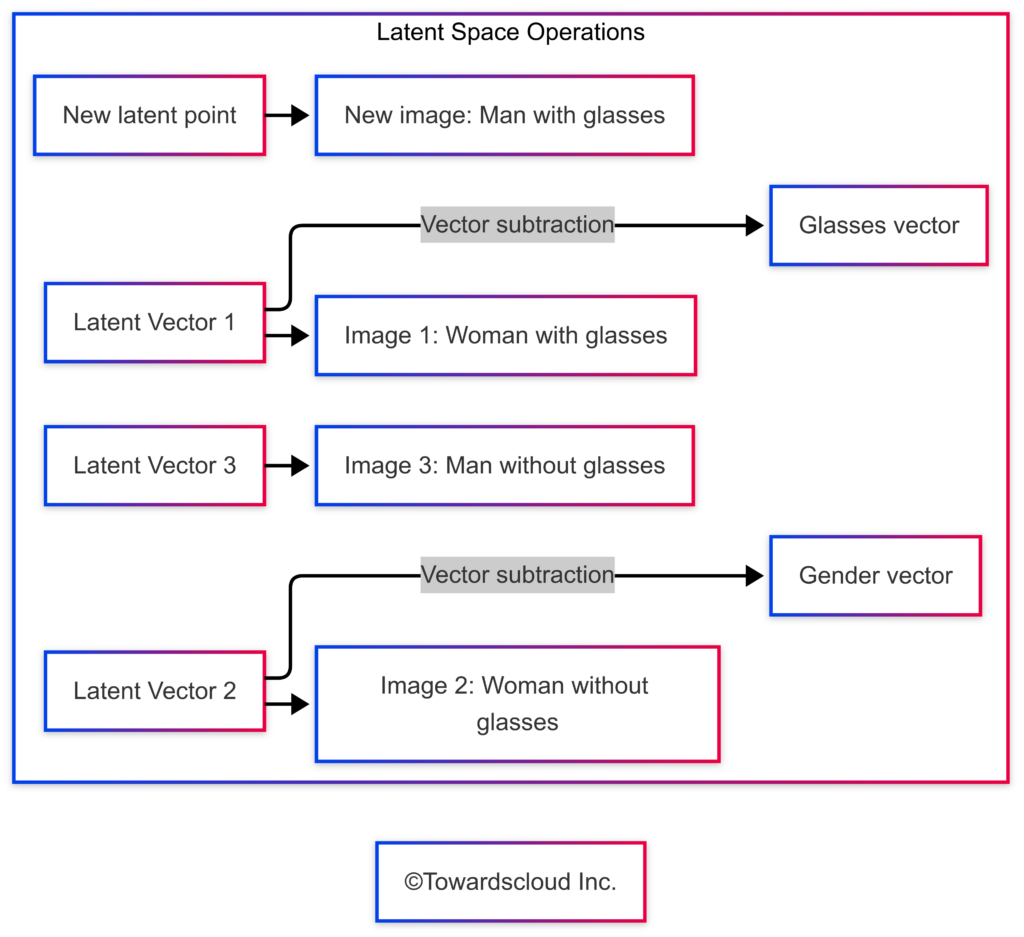

Latent Space: An abstract, compressed representation of visual data where similar concepts are positioned closely together, allowing for meaningful manipulation and interpolation.

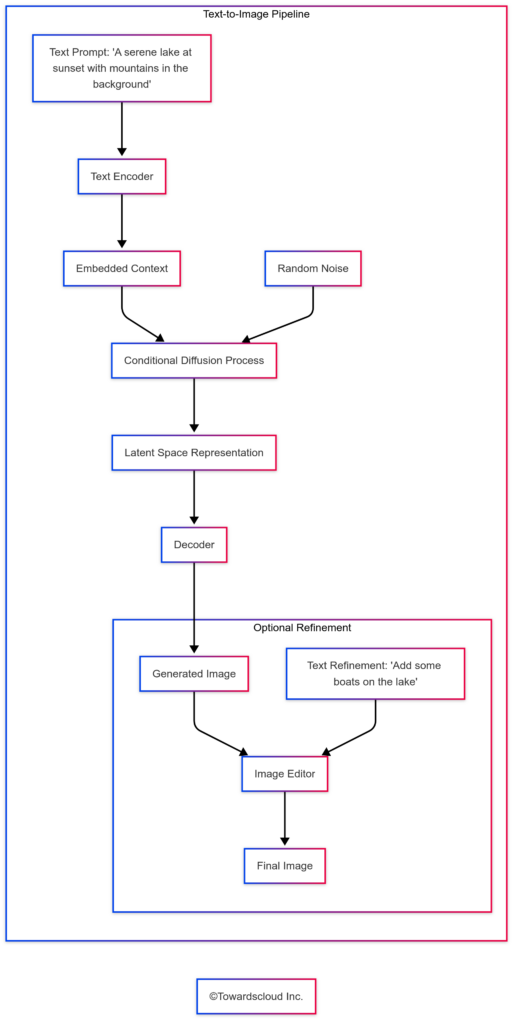

Text-to-Image Generation: Converting natural language descriptions into corresponding visual content using models trained on text-image pairs.

Image-to-Image Translation: Transforming images from one domain to another while preserving structural elements (e.g., turning sketches into photorealistic images).

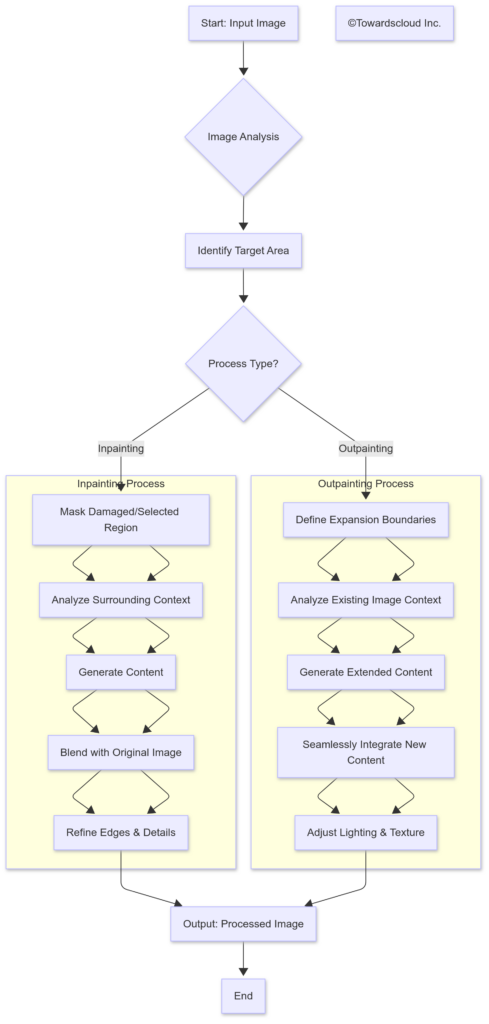

Inpainting and Outpainting: Filling in missing portions of images (inpainting) or extending images beyond their boundaries (outpainting).

Style Transfer: Applying the artistic style of one image to the content of another while maintaining the original content’s structure.

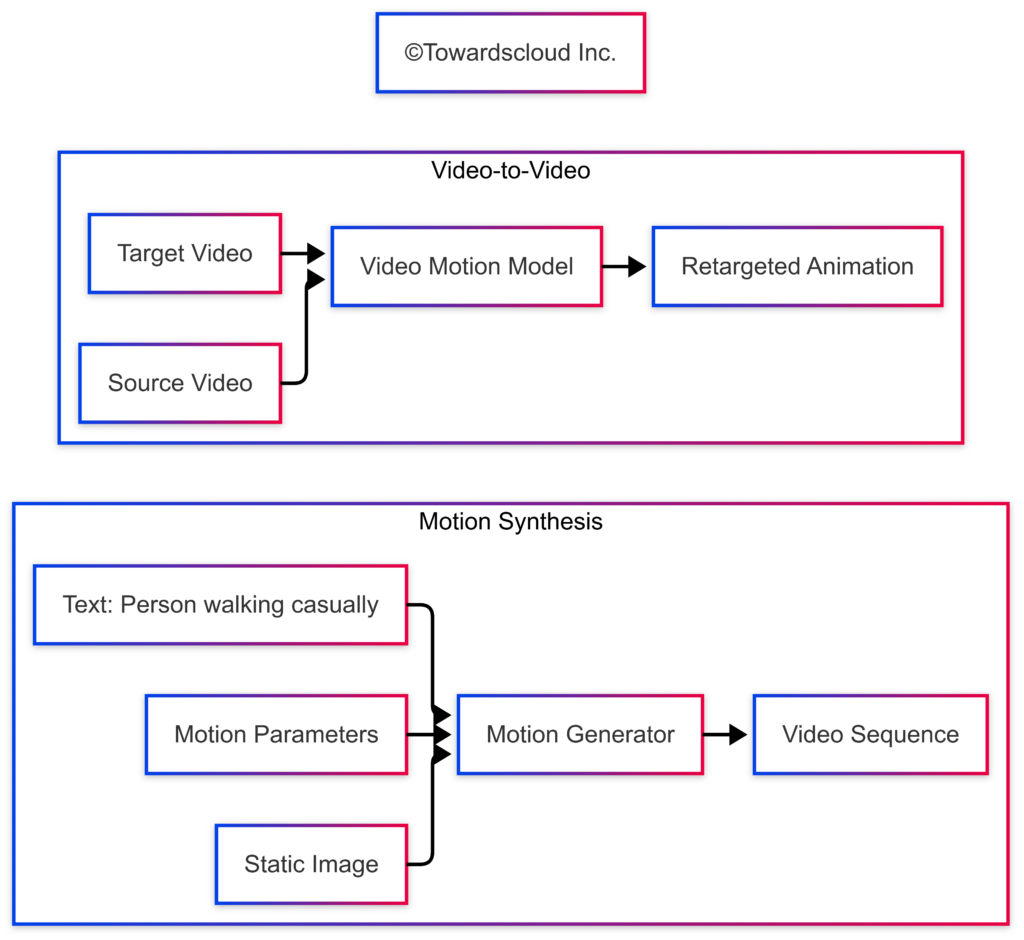

Motion Synthesis: Creating realistic movement in videos either from scratch or by animating static images.

Prompt Engineering: Crafting effective text descriptions that guide AI systems to produce desired visual outputs.

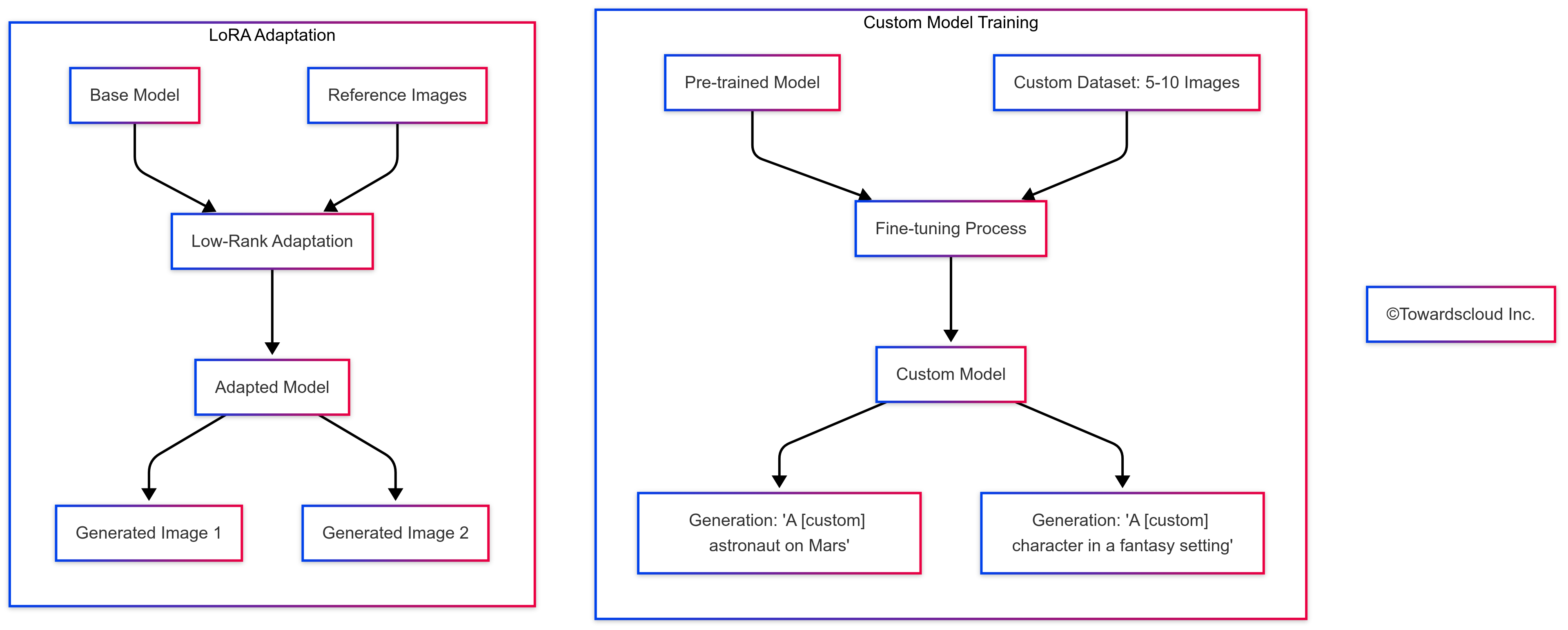

Fine-tuning: Adapting pre-trained models to specific visual styles or domains with smaller datasets.

Generative Adversarial Networks (GANs)

Real-World Example: StyleGAN

StyleGAN, developed by NVIDIA, revolutionized realistic face generation. Its architecture separates high-level attributes (gender, age) from stochastic details (freckles, hair texture).

Applications:

- ThisPersonDoesNotExist.com: Generates photorealistic faces of non-existent people

- Fashion design: Companies like Zalando use GANs to create virtual clothing models

- Game asset creation: Automating character and texture generation

Diffusion Models

Real-World Example: Stable Diffusion & DALL-E

Diffusion models have become the dominant approach for high-quality image generation, with Stable Diffusion being an open-source implementation that gained massive popularity.

Applications:

- Midjourney: Creates artistic renderings from text descriptions

- Product visualization: Companies generate product mockups before manufacturing

- Adobe Firefly: Integrates diffusion models into creative software for professional workflows

- Medical imaging: Generates synthetic medical images for training diagnostic systems

Transformers for Vision

Real-World Example: Sora by OpenAI

Sora uses a transformer-based architecture to generate high-definition videos from text prompts, understanding complex scenes, camera movements, and multiple characters.

Applications:

- Video generation: Creating complete short films from text descriptions

- Simulation: Generating synthetic training data for autonomous vehicles

- VFX automation: Generating background scenes or crowd simulations

- Educational content: Creating visual explanations from textual concepts

Latent Space Manipulation

Real-World Example: GauGAN by NVIDIA

NVIDIA’s GauGAN allows users to draw simple segmentation maps that are then converted to photorealistic landscapes, operating in a structured latent space.

Applications:

- Face editing: Modifying specific attributes like age, expression, or hairstyle

- Interior design: Changing room styles while maintaining layout

- Content creation tools: Allowing non-artists to generate professional-quality visuals

- Virtual try-on: Changing clothing items while preserving the person’s appearance

Text-to-Image Generation

Real-World Example: Midjourney

Midjourney has become renowned for its artistic renditions of text prompts, allowing users to specify styles, compositions, and content with natural language.

Applications:

- Marketing materials: Generating custom imagery for campaigns

- Book illustrations: Creating visual companions to written content

- Conceptual design: Rapid visualization of product ideas

- Social media content: Creating engaging visuals from descriptive prompts

Inpainting and Outpainting

Real-World Example: Photoshop Generative Fill

Adobe’s Photoshop now features “Generative Fill” powered by Firefly AI, which allows users to select areas of an image and replace them with AI-generated content based on text prompts.

Applications:

- Photo restoration: Filling in damaged portions of historical photos

- Object removal: Erasing unwanted elements from photos

- Creative expansion: Extending existing artwork beyond original boundaries

- Film restoration: Repairing damaged frames in old films

Motion Synthesis

Real-World Example: RunwayML’s Gen-2

RunwayML’s Gen-2 can animate still images or generate videos from text prompts, producing natural motion and maintaining visual consistency.

Applications:

- Character animation: Bringing illustrations to life with realistic movements

- Visual effects: Generating dynamic elements like fire, water, or crowds

- Digital avatars: Creating animated versions of static portraits

- Architectural visualization: Adding movement to static building renders

Fine-tuning and Personalization

Real-World Example: DreamBooth and LoRA

DreamBooth technology allows users to personalize diffusion models with just 3-5 images of a subject, enabling the generation of that subject in new contexts and styles.

Applications:

- Brand personalization: Training models to generate content in specific brand styles

- Personal avatars: Creating customized digital representations of individuals

- Product visualization: Generating variations of products in different contexts

- Character design: Maintaining consistent character appearance across multiple scenes

This comprehensive overview demonstrates how generative AI for image and video synthesis works at a foundational level, with real-world applications that are transforming creative industries, entertainment, design, and many other fields. Each of these technologies continues to evolve rapidly, with new capabilities emerging regularly.

Cloud Implementation Comparison

Now, let’s cover cloud implementations and some comarison across cloud providers.

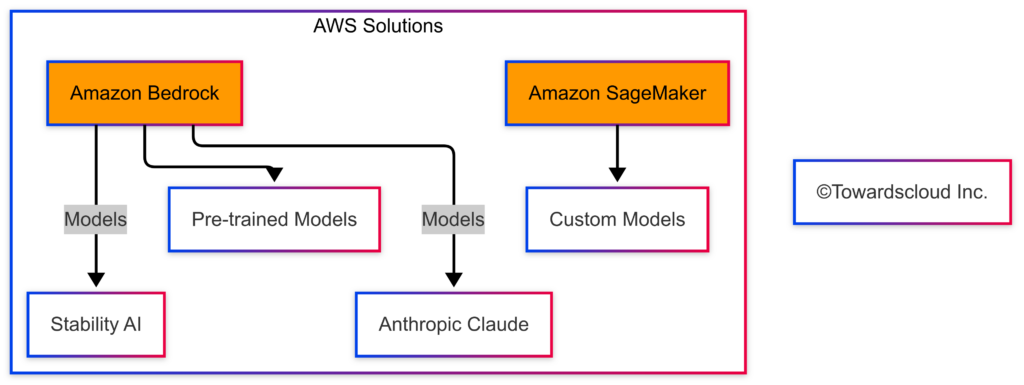

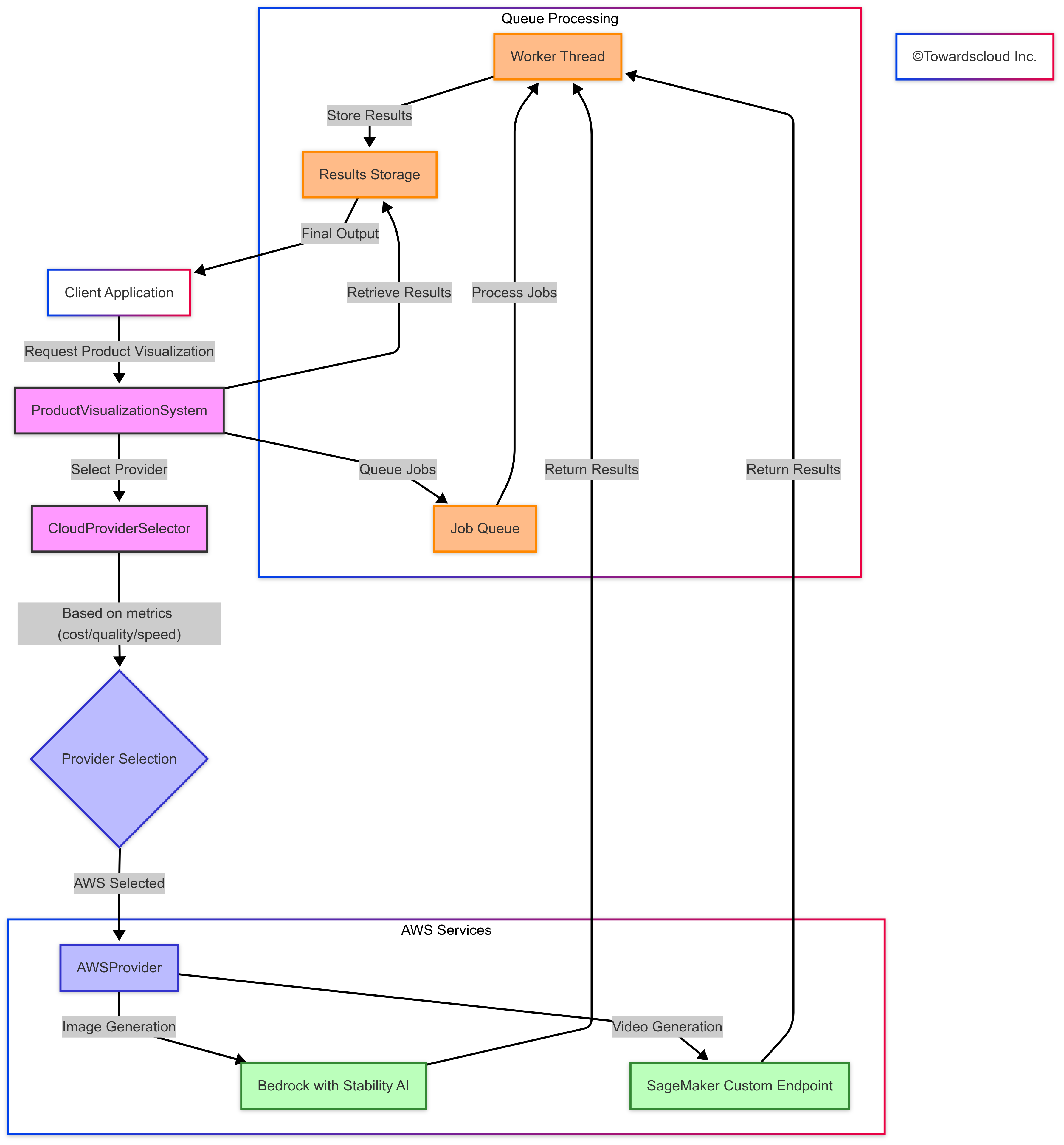

AWS Implementation: Amazon Bedrock and SageMaker

AWS offers multiple approaches for deploying generative AI for image and video synthesis.

Amazon Bedrock

Amazon Bedrock provides a fully managed service to access foundation models through APIs, including Stability AI’s models for image generation.

AWS Bedrock Image Generation with Stability AI

Amazon SageMaker with Custom Models

For more control and customization, you can deploy your own image synthesis models on SageMaker:

AWS SageMaker Custom Deployment for Stable Diffusion

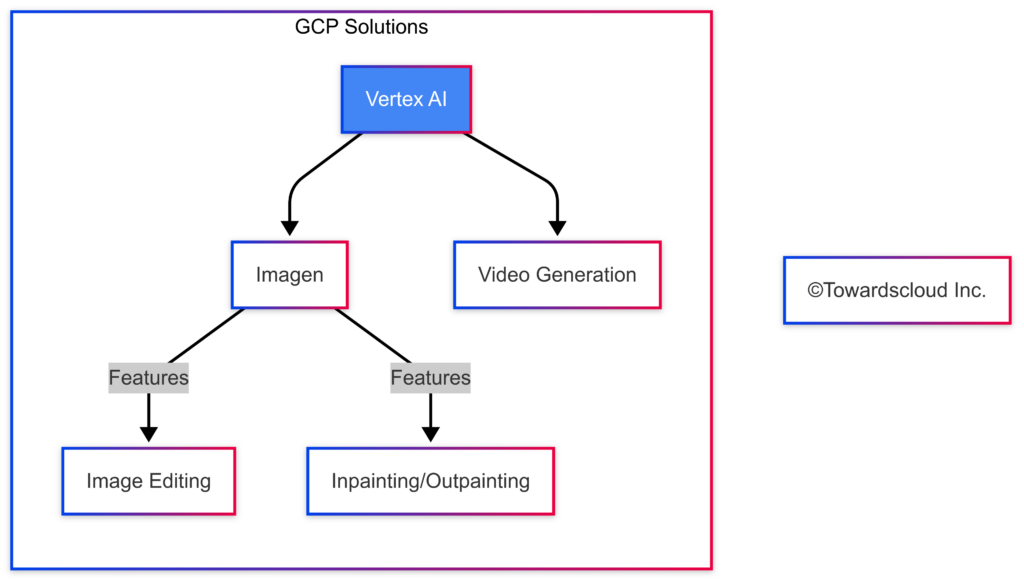

GCP Implementation: Vertex AI with Imagen

Google Cloud Platform offers Imagen on Vertex AI for image generation, providing a powerful and easy-to-use service for developers.

GCP Vertex AI Imagen Implementation

For Video Synthesis on GCP:

GCP Video Synthesis Implementation

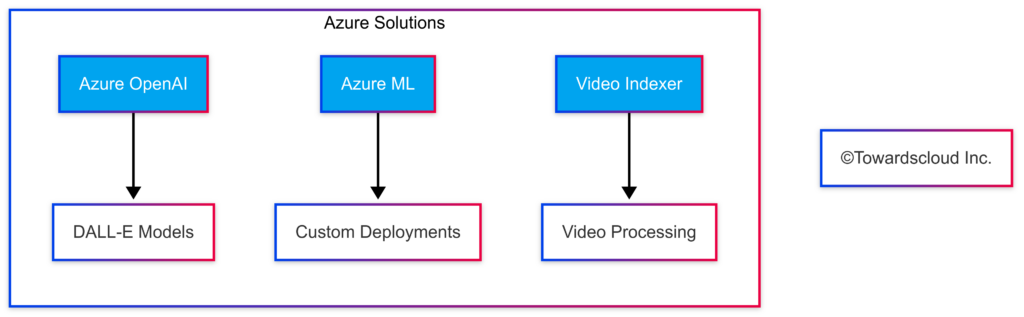

Azure Implementation: Azure OpenAI Service with DALL-E

Microsoft Azure provides the Azure OpenAI Service, which includes DALL-E models for image generation:

Azure OpenAI Service with DALL-E Implementation

Azure Video Indexer and Custom Video Synthesis

For video synthesis and processing, Azure offers Video Indexer along with custom solutions on Azure Machine Learning:

Azure Custom Video Synthesis

Independent Implementation: Using Open Source Models

If you prefer vendor-agnostic solutions, you can deploy open-source models like Stable Diffusion on your own infrastructure:

Independent Stable Diffusion Implementation

For video synthesis with open-source models:

Independent Video Synthesis Implementation

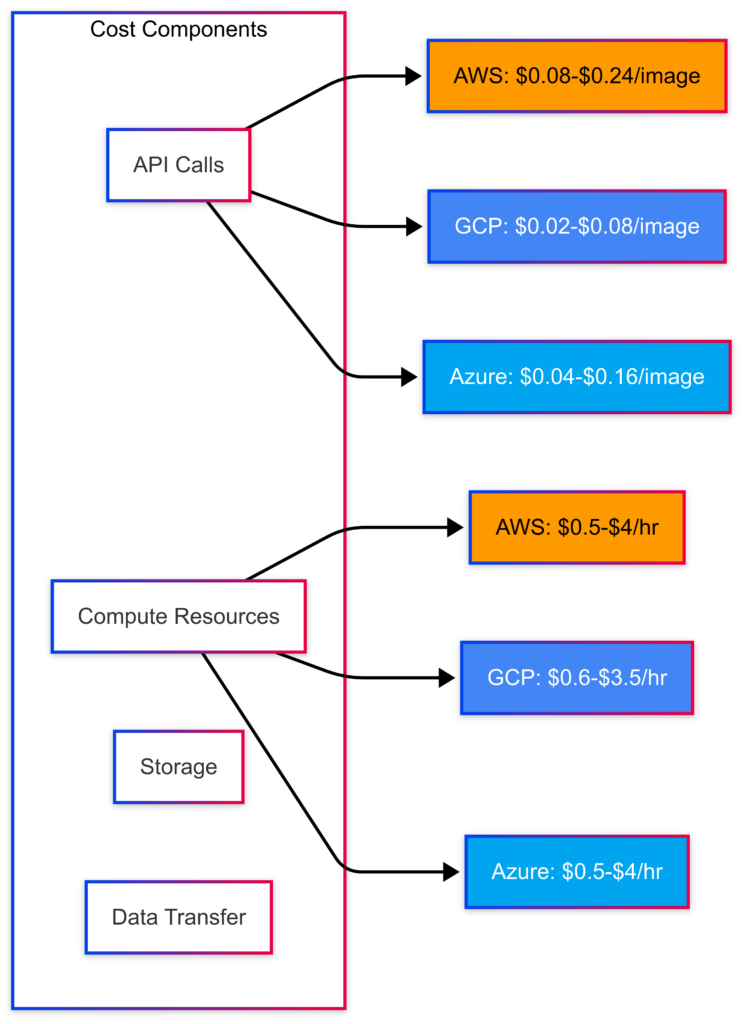

Cloud Implementation Comparison

Let’s compare the different cloud platforms for generative AI image and video synthesis:

Feature Comparison

| Feature | AWS | GCP | Azure |

|---|---|---|---|

| Pre-trained image models | ✅ Bedrock with Stability AI | ✅ Imagen on Vertex AI | ✅ DALL-E on Azure OpenAI |

| Custom model deployment | ✅ SageMaker | ✅ Vertex AI | ✅ Azure ML |

| Video synthesis | ⚠️ Limited native support | ✅ Built-in capabilities | ✅ Via custom models |

| Image editing | ✅ Via Stability AI | ✅ Native support | ✅ Via DALL-E |

| Fine-tuning support | ✅ With SageMaker | ✅ With Vertex AI | ✅ With Azure ML |

| API simplicity | ⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐ |

| Integration with other services | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐⭐⭐ |

AWS Cost Breakdown:

- Amazon Bedrock: $0.08-$0.24 per image with Stability AI models

- Amazon SageMaker: $0.5-$4.0 per hour for GPU instances (ml.g4dn.xlarge to ml.g5.4xlarge)

- Video synthesis: Additional costs for custom implementations

GCP Cost Breakdown:

- Vertex AI Imagen: $0.02-$0.08 per image generation (depends on resolution)

- Vertex AI custom deployment: $0.6-$3.5 per hour for GPU instances (n1-standard-8-gpu to a2-highgpu-1g)

- Video generation: $0.10-$0.30 per second of generated video

Azure Cost Breakdown:

- Azure OpenAI DALL-E: $0.04-$0.16 per image (standard vs. HD quality)

- Azure ML: $0.5-$4.0 per hour for GPU instances (Standard_NC6s_v3 to Standard_ND40rs_v2)

- Video Indexer: Pay per minute of processed video

Best Practices for Implementation

- Use managed services for simplicity:

- AWS Bedrock for quick image generation

- Vertex AI Imagen for high-quality images with simpler API

- Azure OpenAI for DALL-E integration

- Custom deployment for specialized needs:

- Fine-tune models on SageMaker/Vertex AI/Azure ML

- Batch processing for high-volume generation

- Integration with existing ML pipelines

- Cost optimization:

- Use serverless options for sporadic usage

- Reserved instances for consistent workloads

- Optimize image/video resolution based on needs

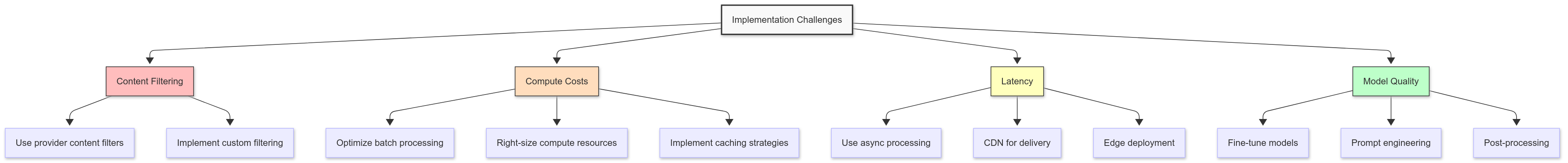

Implementation Challenges and Solutions

Real-World Application Example: Product Visualization System

To demonstrate a complete solution, let’s build a product visualization system that generates images and videos of products from different angles and environments.

Product Visualization System Architecture

Practical Use-Cases for Generative AI in Image and Video Synthesis

- E-commerce Product Visualization

- Generate product images from different angles

- Create 360° views and videos

- Show products in different contexts/environments

- Content Creation for Marketing

- Create promotional visuals at scale

- Generate scene variations for A/B testing

- Create product demonstrations

- Virtual Try-On and Customization

- Show clothing items on different body types

- Visualize product customizations (colors, materials)

- Create virtual fitting rooms

- Architectural and Interior Design

- Generate realistic renderings of designs

- Show spaces with different furnishings

- Create walkthrough videos

- Educational Content

- Create visual aids for complex concepts

- Generate diagrams and illustrations

- Create educational animations

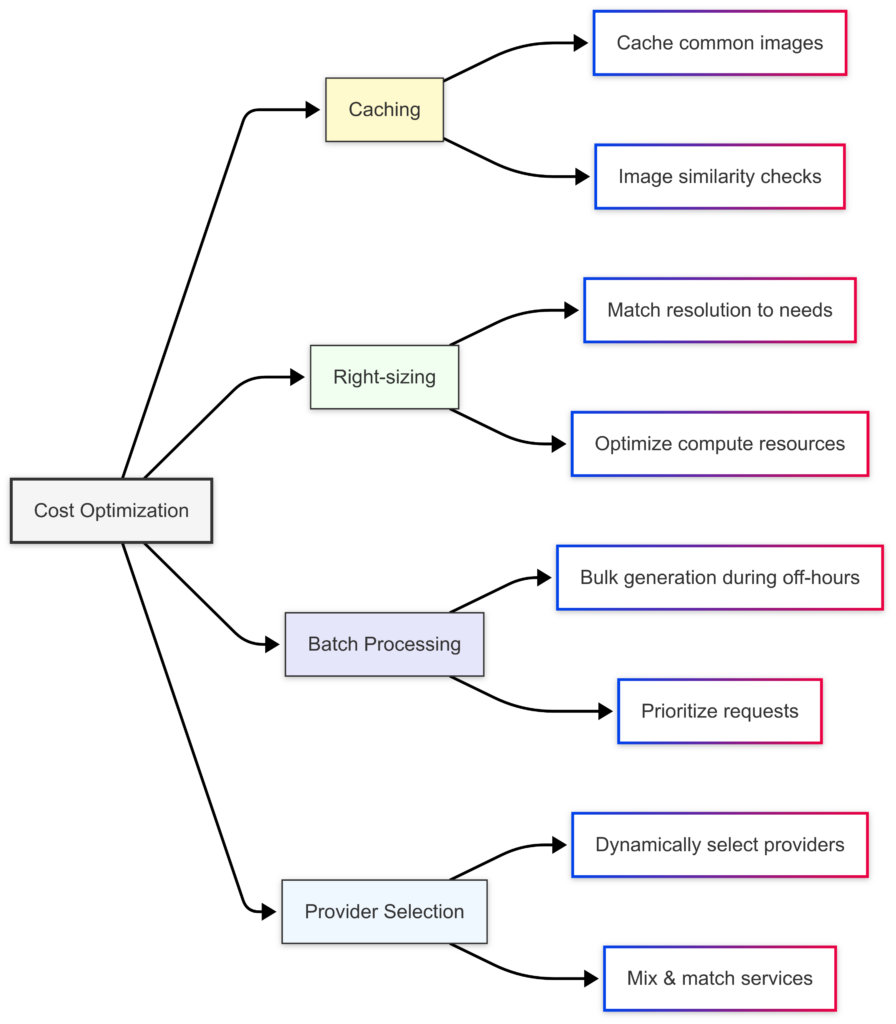

Cost Optimization Strategies

- Implement caching strategies:

- Store and reuse generated images when possible

- Use image similarity detection to avoid regenerating similar content

- Implement CDN for faster delivery and reduced API calls

- Right-size your requests:

- Use appropriate resolutions for your needs (lower for thumbnails, higher for showcases)

- Match compute resources to workload patterns

- Implement auto-scaling for fluctuating demand

- Optimize prompts:

- Well-crafted prompts reduce the need for regeneration

- Use negative prompts to avoid undesired elements

- Document successful prompts for reuse

- Multi-cloud strategy:

- Use GCP for cost-effective image generation

- AWS for custom model deployments

- Azure for high-quality outputs when needed

Performance Considerations

- Latency management:

- Implement asynchronous processing for large batch jobs

- Pre-generate common images

- Use edge deployments for latency-sensitive applications

- Scaling considerations:

- Implement queue systems for high-volume processing

- Use containerization for flexible deployments

- Consider serverless for sporadic workloads

- Quality vs. speed tradeoffs:

- Adjust inference steps based on quality requirements

- Use progressive loading techniques for web applications

- Implement post-processing for quality improvements

Security and Compliance Considerations

- Content filtering:

- Implement pre and post-generation content filters

- Use provider-supplied safety measures

- Review generated content for sensitive applications

- Data handling:

- Ensure prompts don’t contain PII

- Understand provider data retention policies

- Implement proper access controls

- Attribution and usage rights:

- Understand licensing terms for generated content

- Implement proper attribution where required

- Review terms of service for commercial usage

Conclusion

Generative AI for image and video synthesis offers powerful capabilities across all major cloud platforms. Each provider has its strengths:

- AWS excels in customization and integration with other AWS services

- GCP offers simplicity and cost-effectiveness for straightforward image generation

- Azure provides high-quality outputs with strong integration into Microsoft ecosystems

For most applications, a hybrid approach leveraging the strengths of multiple providers can offer the best balance of cost, quality, and performance. Our sample architecture demonstrates how to build a flexible system that can dynamically select the best provider for each task.

As these technologies continue to evolve, we can expect even more powerful capabilities, improved quality, and reduced costs. By implementing the strategies outlined in this post, you’ll be well-positioned to leverage generative AI for image and video synthesis in your applications.

While this blog post grew to a substantial length, we believe it’s important to cover the topic thoroughly from foundational concepts to practical implementation. Towardscloud encourage you to approach it in sections, perhaps dividing your reading into logical parts: first understanding the core concepts, then exploring the implementation details, and finally examining the practical applications. This way, you can digest the information more effectively without feeling overwhelmed by the scope of the content. Thank you and happy reading!