Introduction to Transformers

Transformers have become the backbone of modern generative AI, powering everything from chatbots to image generation systems. First introduced in the 2017 paper “Attention Is All You Need” by Vaswani et al., these neural network architectures have revolutionized how machines understand and generate content.

Call to Action: Have you noticed how AI-generated content has improved dramatically in recent years? The transformer architecture is largely responsible for this leap forward. Read on to discover how this innovation is changing our digital landscape!

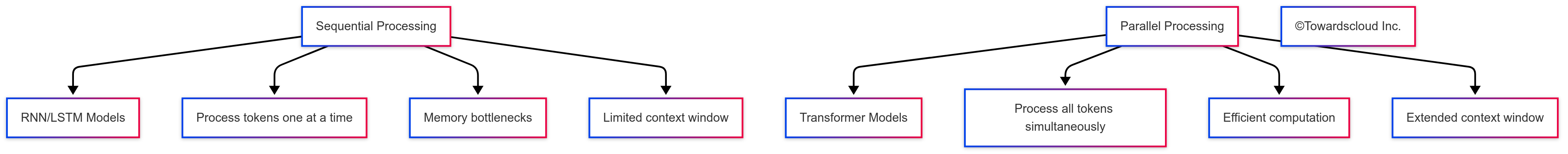

From Sequential Models to Parallel Processing

Before transformers, recurrent neural networks (RNNs) and long short-term memory networks (LSTMs) were the standard for sequence-based tasks. However, these models had significant limitations:

Key Advantages of Transformers

| Feature | Traditional Models (RNN/LSTM) | Transformer Models |

|---|---|---|

| Processing | Sequential (one token at a time) | Parallel (all tokens simultaneously) |

| Training Speed | Slower due to sequential nature | Faster due to parallelization |

| Long-range Dependencies | Struggles with distant relationships | Excels at capturing relationships regardless of distance |

| Context Window | Limited by vanishing gradients | Much larger (thousands to millions of tokens) |

| Scalability | Difficult to scale | Highly scalable to billions of parameters |

Call to Action: Think about how your favorite AI tools have improved over time. Have you noticed they’re better at understanding context and generating coherent, long-form content? Share your experiences in the comments!

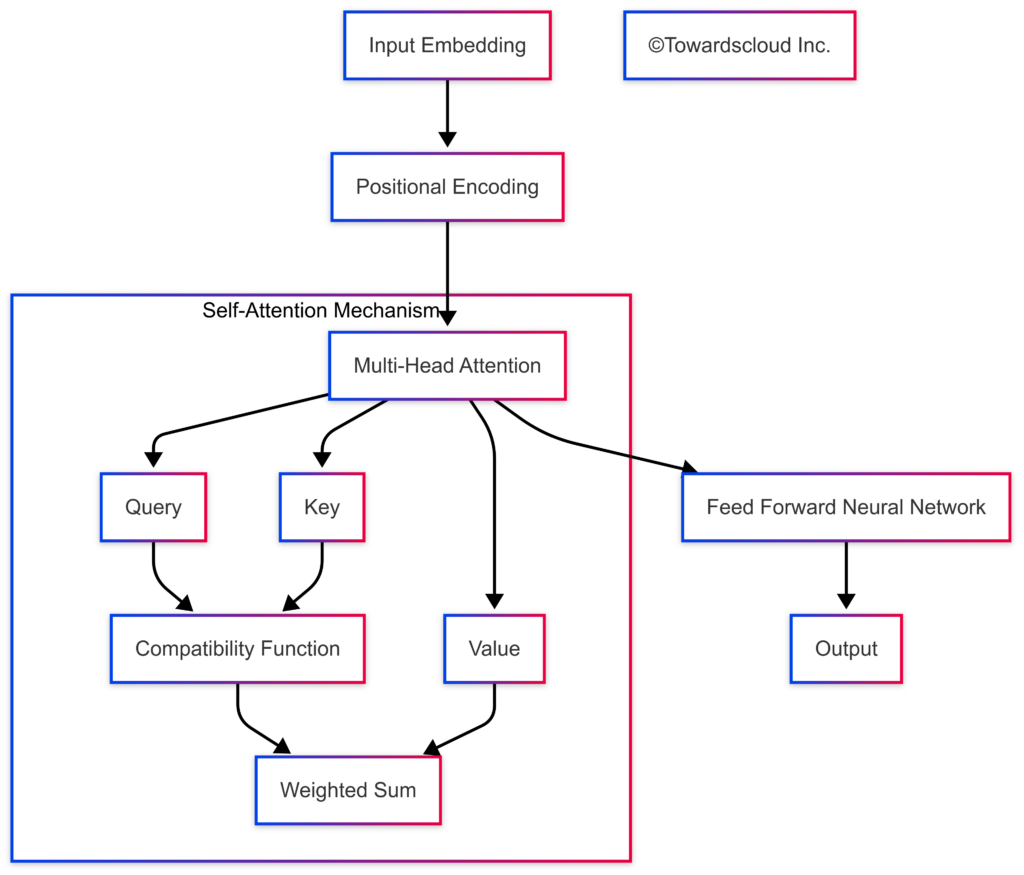

The Self-Attention Mechanism: The Heart of Transformers

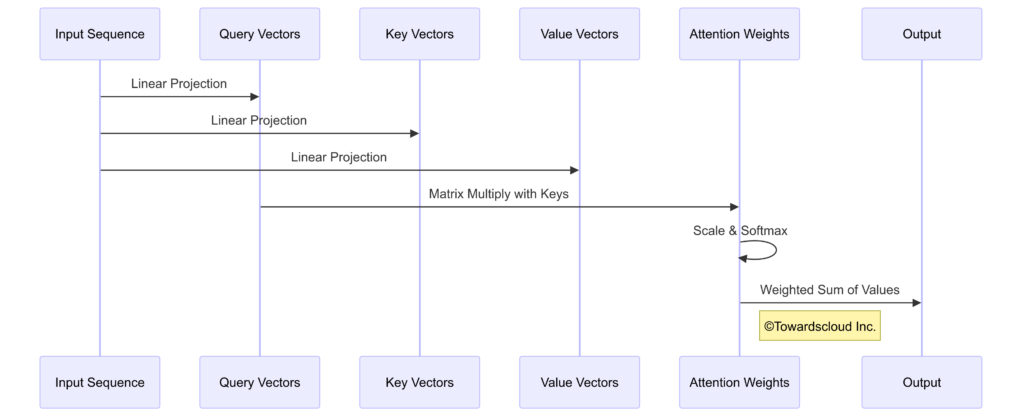

The breakthrough element of transformers is the self-attention mechanism, which allows the model to focus on different parts of the input sequence when producing each element of the output.

How Self-Attention Works in Simple Terms

Imagine you’re reading a sentence and trying to understand the meaning of each word. As you read each word, you naturally pay attention to other words in the sentence that help clarify its meaning.

For example, in the sentence “The animal didn’t cross the street because it was too wide,” what does “it” refer to? A human reader knows “it” refers to “the street,” not “the animal.”

Self-attention works similarly:

- For each word (token), it calculates how much attention to pay to every other word in the sequence

- It weighs the importance of these relationships

- It uses these weighted relationships to create a context-rich representation of each word

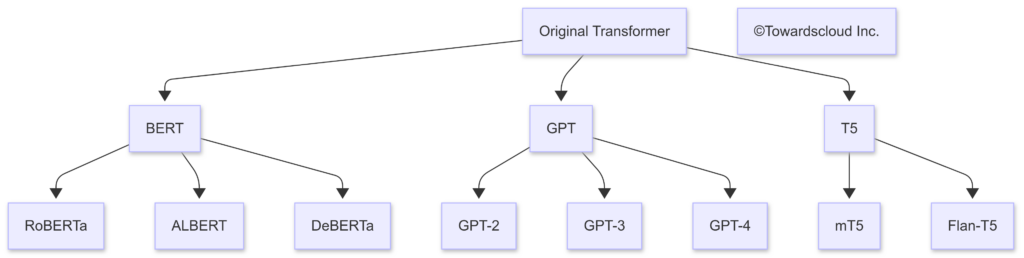

Transformer-Based Architectures in Generative AI

Since the original transformer paper, numerous architectures have built upon this foundation:

Major Transformer-Based Models and Their Applications

| Model Family | Architecture Type | Primary Applications | Notable Examples |

|---|---|---|---|

| BERT | Encoder-only | Understanding, classification, sentiment analysis | Google Search, BERT-based chatbots |

| GPT | Decoder-only | Text generation, creative writing, conversational AI | ChatGPT, GitHub Copilot |

| T5 | Encoder-decoder | Translation, summarization, question answering | Google Translate, Bard |

| CLIP | Multi-modal | Image-text understanding, zero-shot classification | DALL-E, Midjourney |

Call to Action: Which of these transformer models have you interacted with? Many popular AI tools like ChatGPT, GitHub Copilot, and Google Translate are powered by these architectures. Have you noticed differences in their capabilities?

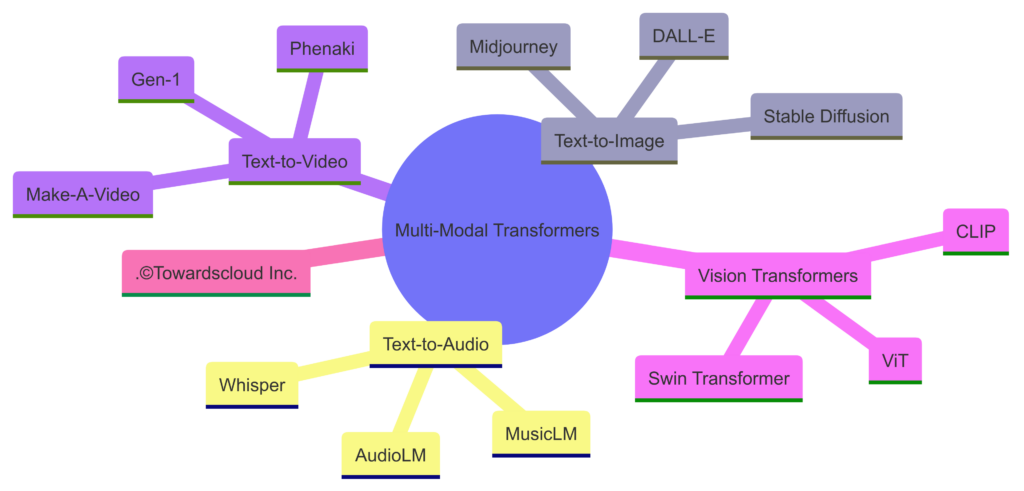

Transformers Beyond Text: Multi-Modal Applications

While transformers began in the realm of natural language processing, they’ve expanded to handle multiple types of data:

Text-to-Image Generation

Models like DALL-E 2, Stable Diffusion, and Midjourney use transformer-based architectures to convert text descriptions into stunning images. These systems understand the relationships between words in your prompt and generate corresponding visual elements.

Vision Transformers

The Vision Transformer (ViT) applies the transformer architecture to computer vision tasks by treating images as sequences of patches, similar to how text is treated as sequences of tokens.

Multi-Modal Understanding

CLIP (Contrastive Language-Image Pre-training) can understand both images and text, creating a shared embedding space that allows for remarkable zero-shot capabilities.

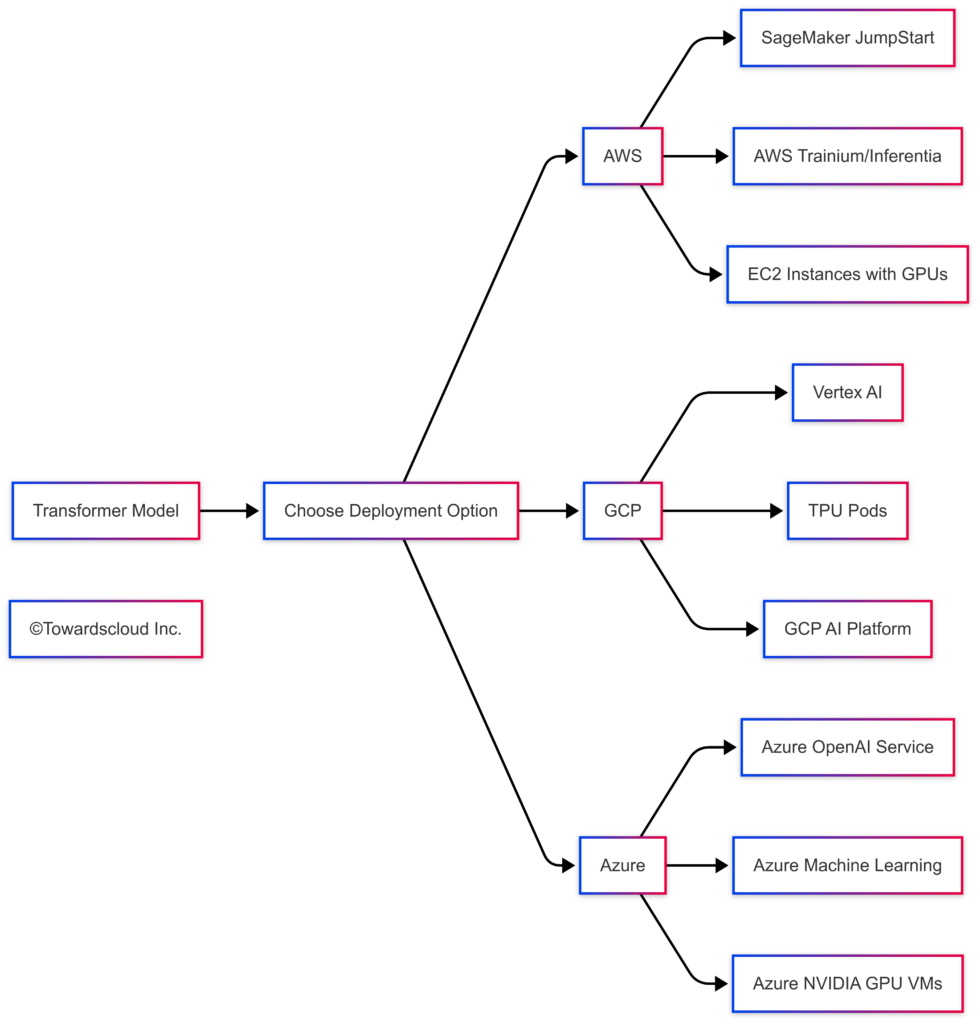

Cloud Infrastructure for Transformer Models

All major cloud providers offer specialized infrastructure for deploying and running transformer-based generative AI models:

| Cloud Provider | Key Services | Transformer-Specific Features |

|---|---|---|

| AWS | SageMaker JumpStart, AWS Trainium | Pre-trained transformer models, custom inference chips |

| GCP | Vertex AI, TPU | TPU architecture optimized for transformers, model garden |

| Azure | Azure OpenAI Service, Azure ML | Direct access to GPT models, specialized inference endpoints |

Call to Action: Are you currently deploying AI models on cloud infrastructure? What challenges have you faced with transformer-based models? Share your experiences and best practices in the comments!

Technical Deep Dive: Key Components of Transformers

Let’s explore the essential components that make transformers so powerful:

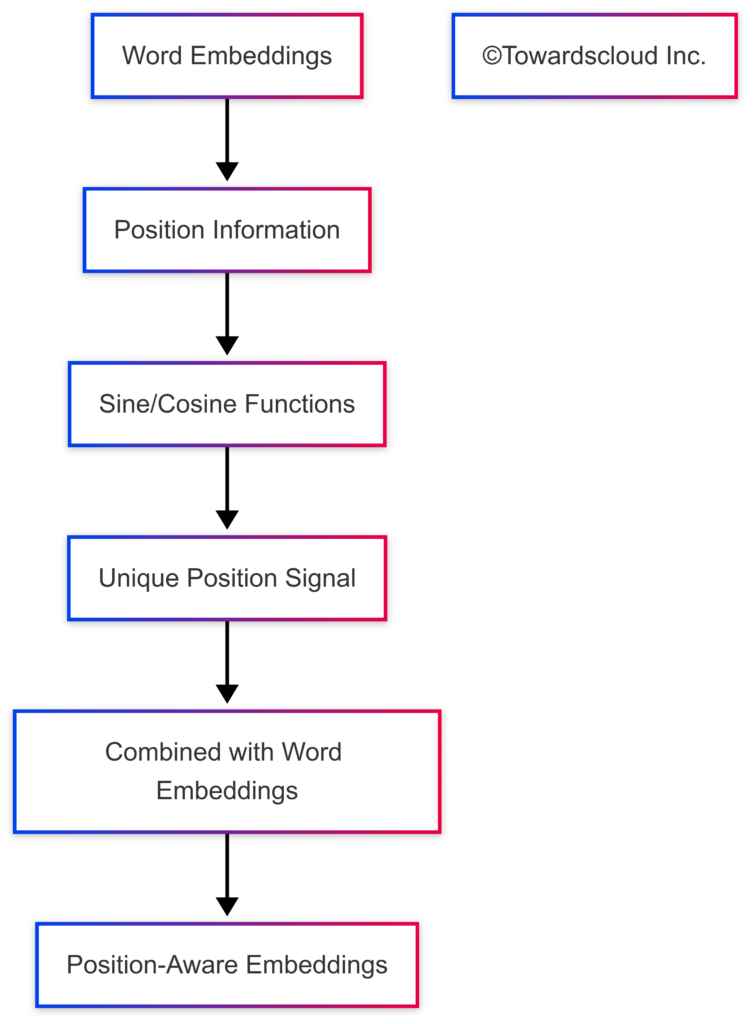

1. Positional Encoding

Since transformers process all tokens in parallel, they need a way to understand the order of tokens in a sequence:

Positional encoding uses sine and cosine functions at different frequencies to create a unique position signal for each token.

2. Multi-Head Attention

Transformers use multiple attention “heads” that can focus on different aspects of the data in parallel:

# Simplified Multi-Head Attention in PyTorch

class MultiHeadAttention(nn.Module):

def __init__(self, d_model, num_heads):

super().__init__()

self.d_model = d_model

self.num_heads = num_heads

self.head_dim = d_model // num_heads

self.q_linear = nn.Linear(d_model, d_model)

self.k_linear = nn.Linear(d_model, d_model)

self.v_linear = nn.Linear(d_model, d_model)

self.out = nn.Linear(d_model, d_model)

def forward(self, query, key, value, mask=None):

batch_size = query.shape[0]

# Linear projections and reshape for multi-head

q = self.q_linear(query).view(batch_size, -1, self.num_heads, self.head_dim).transpose(1, 2)

k = self.k_linear(key).view(batch_size, -1, self.num_heads, self.head_dim).transpose(1, 2)

v = self.v_linear(value).view(batch_size, -1, self.num_heads, self.head_dim).transpose(1, 2)

# Attention scores

scores = torch.matmul(q, k.transpose(-2, -1)) / math.sqrt(self.head_dim)

# Apply mask if provided

if mask is not None:

scores = scores.masked_fill(mask == 0, -1e9)

# Softmax and apply to values

attention = torch.softmax(scores, dim=-1)

output = torch.matmul(attention, v)

# Reshape and apply output projection

output = output.transpose(1, 2).contiguous().view(batch_size, -1, self.d_model)

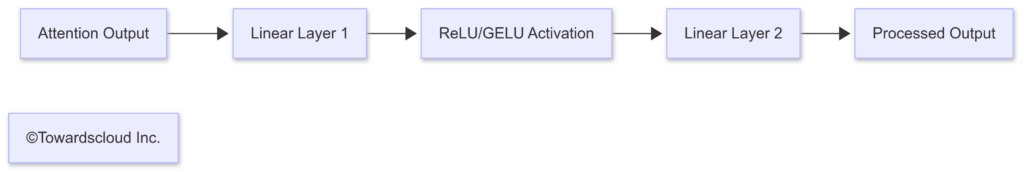

return self.out(output)3. Feed-Forward Networks

Between attention layers, transformers use feed-forward neural networks to process the information:

These networks typically expand the dimensionality in the first layer and then project back to the original dimension, allowing for more complex representations.

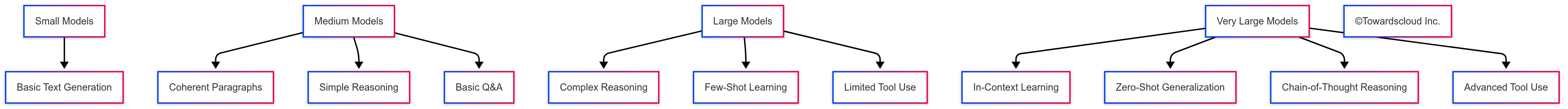

Scaling Laws and Emergent Abilities

One of the most fascinating aspects of transformer models is how they exhibit emergent abilities as they scale:

As transformers grow larger, they don’t just get incrementally better at the same tasks—they develop entirely new capabilities. Research from Anthropic, OpenAI, and others has shown that these emergent abilities often appear suddenly at certain scale thresholds.

Call to Action: Have you noticed how larger language models seem to “understand” tasks they weren’t explicitly trained for? This emergence of capabilities is one of the most exciting areas of AI research. What emergent abilities have you observed in your interactions with advanced AI systems?

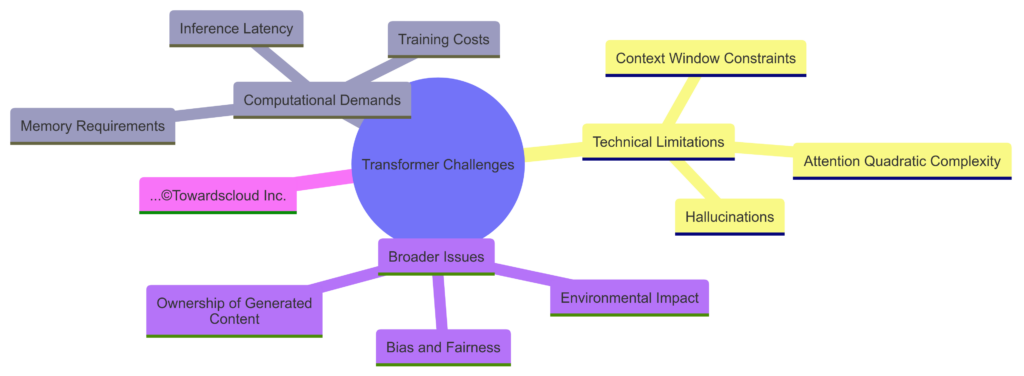

Challenges and Limitations of Transformers

Despite their tremendous success, transformers face several significant challenges:

1. Computational Efficiency

The self-attention mechanism scales quadratically with sequence length (O(n²)), creating significant computational demands for long sequences.

2. Context Window Limitations

Traditional transformers have limited context windows, though recent innovations like Anthropic’s Constitutional AI and Google’s Gemini have pushed these boundaries considerably.

3. Hallucinations and Factuality

Transformers can generate plausible-sounding but factually incorrect information, presenting challenges for applications requiring high accuracy.

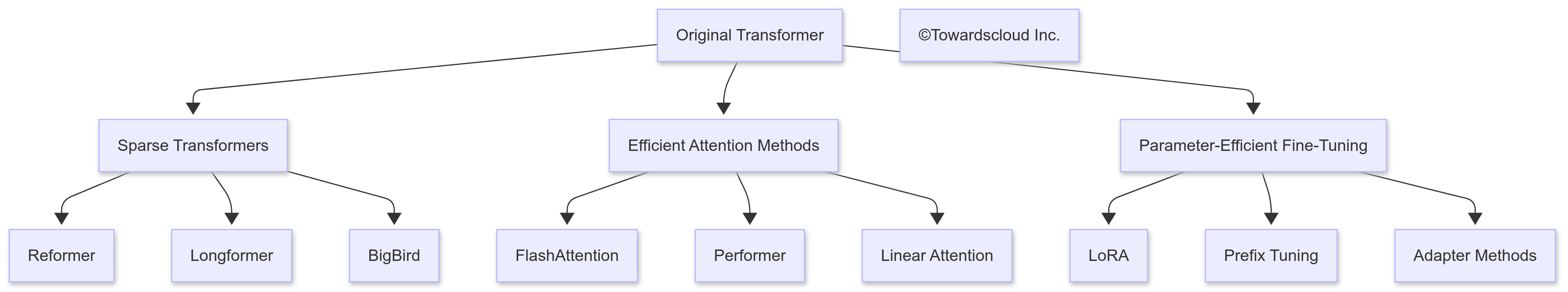

Recent Innovations in Transformer Architecture

Researchers continue to improve and extend the transformer architecture:

Efficient Attention Mechanisms

Models like Reformer, Longformer, and BigBird reduce the quadratic complexity of attention through techniques like locality-sensitive hashing and sparse attention patterns.

Parameter-Efficient Fine-Tuning

Methods like LoRA (Low-Rank Adaptation) and Prefix Tuning allow for efficient adaptation of large pre-trained models without modifying all parameters.

Attention Optimizations

Techniques like FlashAttention optimize the memory usage and computational efficiency of attention calculations, enabling faster training and inference.

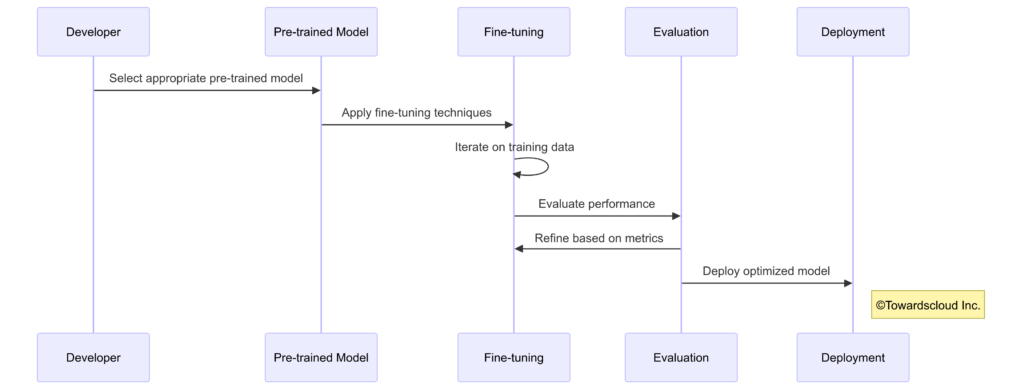

Building and Fine-Tuning Transformer Models

For developers looking to work with transformer models, here’s a practical approach:

1. Leverage Pre-trained Models

Most developers will start with pre-trained models available through libraries like Hugging Face Transformers:

# Loading a pre-trained transformer model

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load model and tokenizer

model_name = "gpt2"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Generate text

input_text = "The transformer architecture has revolutionized"

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

output = model.generate(input_ids, max_length=100)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)2. Fine-Tuning for Specific Tasks

Fine-tuning adapts pre-trained models to specific tasks with much less data than full training:

| Fine-Tuning Method | Description | Best For |

|---|---|---|

| Full Fine-Tuning | Update all model parameters | When you have sufficient data and computational resources |

| LoRA | Low-rank adaptation of specific layers | Resource-constrained environments, preserving general capabilities |

| Prefix Tuning | Adding trainable prefix tokens | When you want to maintain the original model intact |

| Instruction Tuning | Fine-tuning on instruction-following examples | Improving alignment with human preferences |

Call to Action: Have you experimented with fine-tuning transformer models? What approaches worked best for your use case? Share your experiences in the comments section!

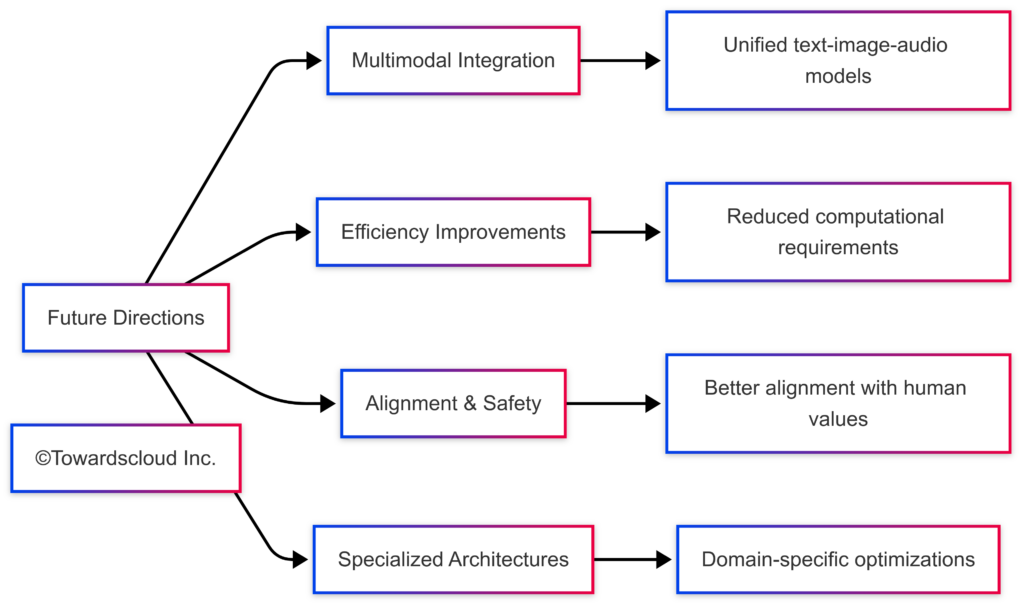

The Future of Transformers in Generative AI

As we look ahead, several trends are shaping the future of transformer-based generative AI:

1. Multimodal Unification

Future transformers will increasingly integrate multiple modalities (text, image, audio, video) into unified models that can seamlessly translate between different forms of media.

2. Efficiency at Scale

Research into more efficient attention mechanisms, model compression, and specialized hardware will continue to reduce the computational demands of transformer models.

3. Improved Alignment and Safety

Techniques like Constitutional AI and Reinforcement Learning from Human Feedback (RLHF) will lead to models that better align with human values and expectations.

4. Domain-Specific Transformers

We’ll likely see more specialized transformer architectures optimized for specific domains like healthcare, legal, scientific research, and creative content.

Conclusion

Transformers have fundamentally transformed the landscape of generative AI, enabling capabilities that seemed impossible just a few years ago. From their humble beginnings as a new architecture for machine translation, they’ve evolved into the foundation for systems that can write, converse, generate images, understand multiple languages, and much more.

As cloud infrastructure continues to evolve to support these models, the barriers to developing and deploying transformer-based AI continue to fall, making this technology accessible to an ever-wider range of developers and organizations.

The future of transformers in generative AI is bright, with ongoing research promising even more impressive capabilities, greater efficiency, and better alignment with human needs and values.

Call to Action: What excites you most about the future of transformer-based generative AI? Are you working on any projects that leverage these models? Share your thoughts, questions, and experiences in the comments below, and don’t forget to subscribe to our newsletter for more in-depth content on AI and cloud technologies!