Deepfakes represent one of the most significant technological challenges of our time, blending advanced AI capabilities with potential societal impacts. Let’s explore this fascinating yet concerning technology, its implementations across cloud platforms, and the associated costs.

What Are Deepfakes?

Deepfakes are synthetic media where a person’s likeness is replaced with someone else’s using deep learning techniques. These technologies typically leverage:

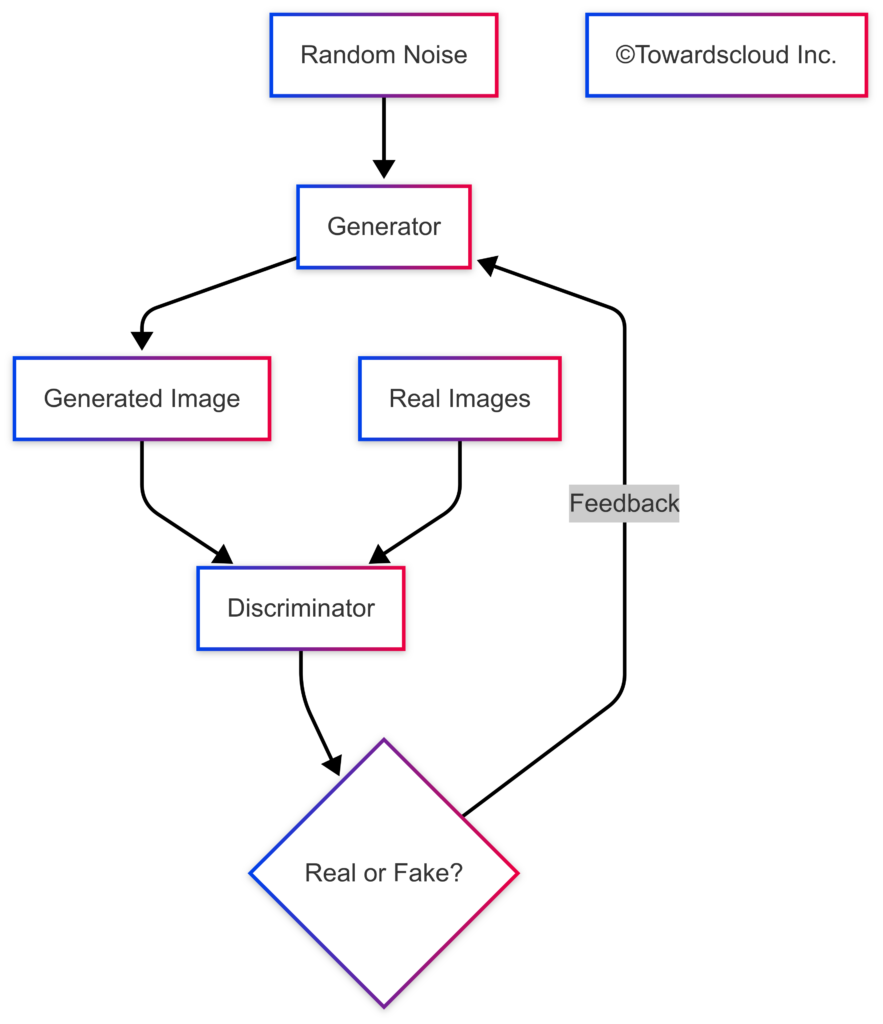

- Generative Adversarial Networks (GANs) – Two neural networks (generator and discriminator) work against each other

- Autoencoders – Neural networks that learn efficient data representations

- Diffusion Models – Advanced models that progressively add and remove noise from data

Real-World Impacts of Deepfakes

Deepfakes have several implications across various domains:

- Misinformation & Disinformation – Creation of fake news, political manipulation

- Identity Theft & Fraud – Impersonation for financial gain

- Online Harassment – Non-consensual synthetic content

- Entertainment & Creative Applications – Film production, advertising

- Training & Education – Simulations in healthcare and other fields

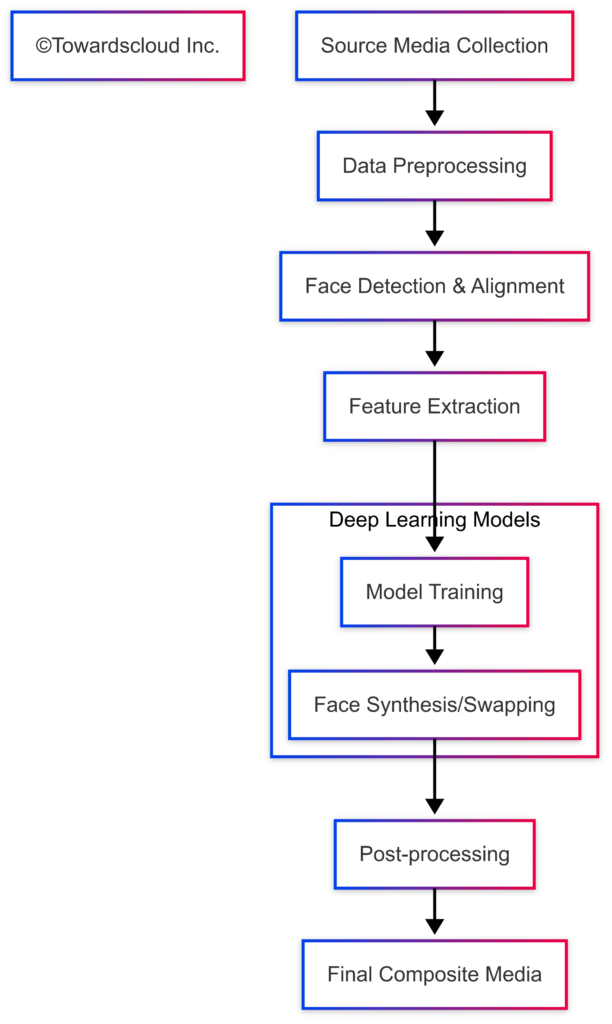

How Deepfakes Are Created

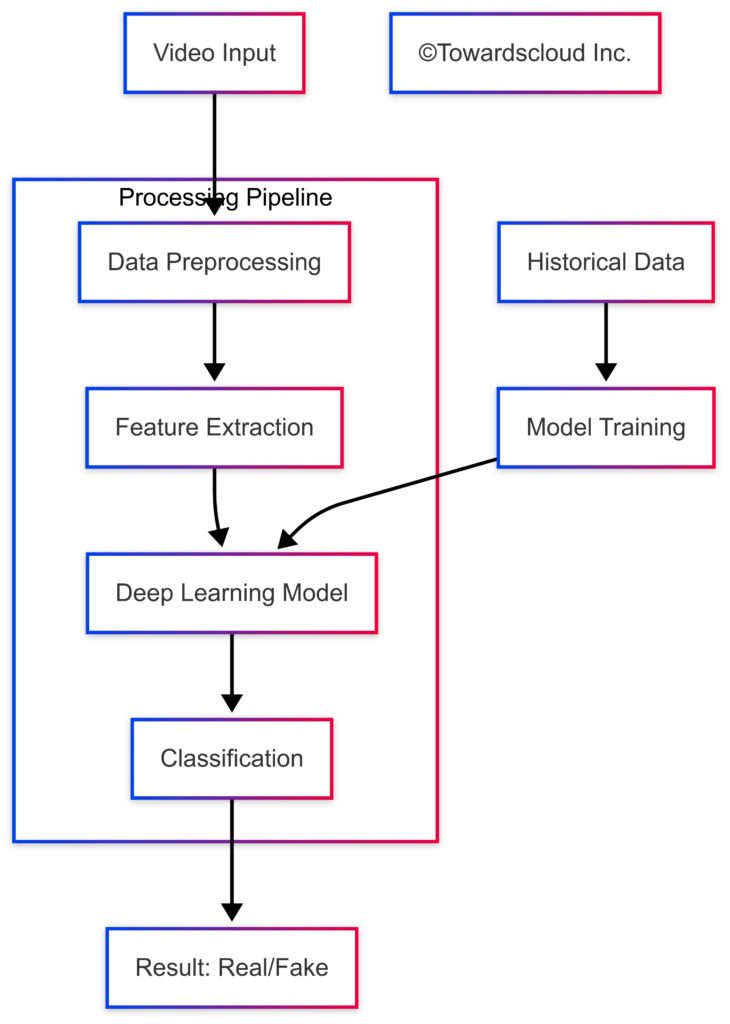

Deepfakes are created through sophisticated AI processes that manipulate or generate visual and audio content. Let’s explore the technical pipeline behind deepfake creation:

The Technical Process Behind Deepfakes

1. Data Collection

The first step involves gathering source material:

- Target Media: The video/image where faces will be replaced

- Source Media: The face that will be swapped into the target

- High-Quality Data: Better results require diverse expressions, angles, and lighting conditions

- Volume Requirements: Most deepfake models need hundreds to thousands of images for realistic results

2. Preprocessing & Feature Extraction

Before training, the data undergoes extensive preparation:

Deepfake Preprocessing Pipeline

Key preprocessing steps include:

- Face Detection: Identifying and isolating facial regions

- Facial Landmark Detection: Locating key points like eyes, nose, and mouth

- Alignment: Normalizing face orientation

- Color Correction: Ensuring consistent lighting and contrast

- Resizing: Standardizing dimensions for model input

3. Model Training

The core of deepfake creation relies on specialized neural network architectures:

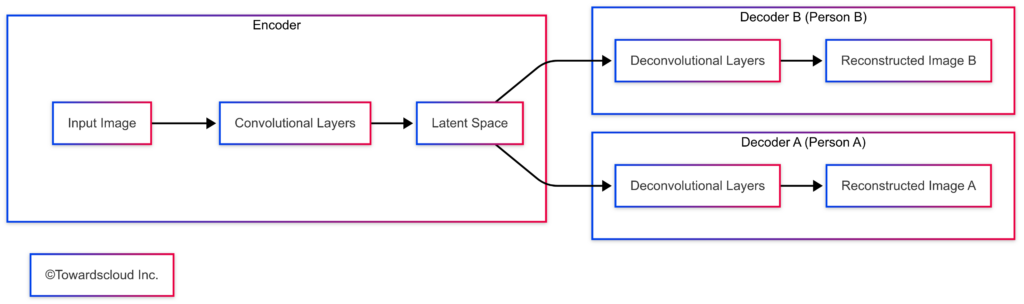

Autoencoder Architecture

GAN Architecture

Common model architectures include:

- Autoencoder-based Methods:

- Uses a shared encoder and two separate decoders

- The encoder learns to represent facial features in a latent space

- Each decoder reconstructs a specific person’s face

- GAN-based Methods (Generative Adversarial Networks):

- Generator creates synthetic faces

- Discriminator identifies real vs. fake images

- The two networks compete, improving quality

- Diffusion Models:

- Gradually add and remove noise from images

- Currently producing some of the most realistic results

Autoencoder-based Deepfake Model Training

4. Face Synthesis & Swapping

Once trained, the models can generate the actual deepfake:

- Generation Process:

- The encoder extracts facial features from the source image

- The target person’s decoder reconstructs the face with the source facial attributes

- For video, this process is applied frame-by-frame

- Key Techniques:

- Face Swapping: Replacing an existing face with another

- Face Reenactment: Transferring expressions from one face to another

- Puppeteering: Animating a face using another person’s movements

5. Post-processing & Refinement

The raw generated faces typically need additional refinement:

Deepfake Post-processing

Key post-processing techniques include:

- Color Correction: Matching skin tone and lighting

- Blending & Feathering: Creating seamless transitions at boundaries

- Temporal Consistency: Ensuring smooth transitions between frames

- Artifact Removal: Fixing glitches and artifacts

- Resolution Enhancement: Improving detail in the final output

6. Audio Synthesis (For Video Deepfakes)

Modern deepfakes often include voice cloning:

- Voice Conversion: Transforming one person’s voice into another’s while preserving content

- Text-to-Speech: Generating entirely new speech from text using a voice model

- Lip Synchronization: Aligning generated audio with facial movements

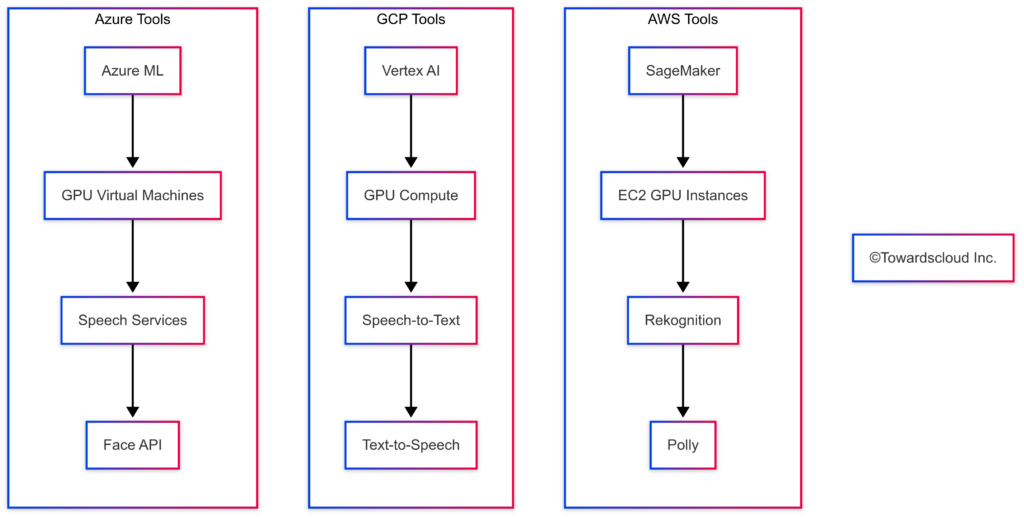

Implementation Comparison Across Cloud Platforms

Let’s compare how each major cloud provider supports deepfake creation (for legitimate purposes):

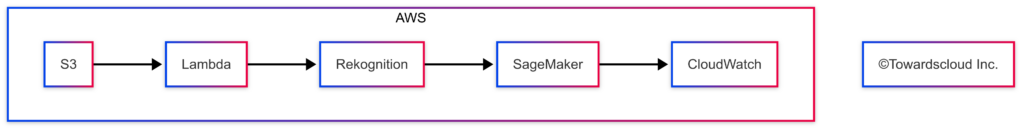

AWS Implementation

AWS supports deepfake creation with services like:

- Amazon SageMaker: For model training and deployment

- EC2 G4/P4 Instances: GPU-optimized computing

- Amazon Rekognition: Face detection and analysis

- Amazon Polly: Text-to-speech capabilities

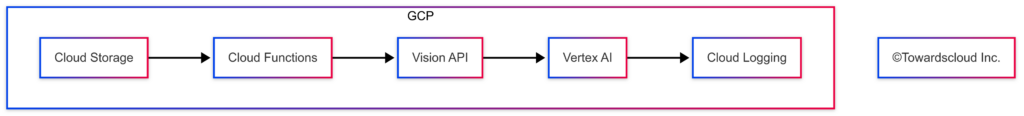

GCP Implementation

Google Cloud offerings include:

- Vertex AI: ML model training and deployment

- T4/V100 GPU Instances: High-performance computing

- Speech-to-Text/Text-to-Speech API: Voice synthesis

- Vision AI: Facial analysis and detection

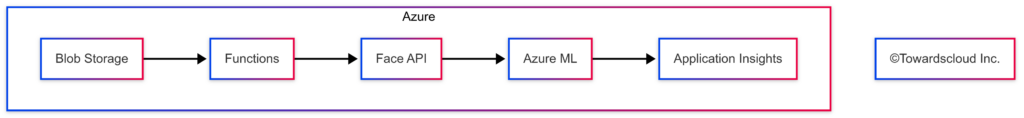

Azure Implementation

Microsoft Azure provides:

- Azure Machine Learning: Model development platform

- NVIDIA GPU VMs: Compute resources

- Speech Services: Voice cloning capabilities

- Face API: Facial detection and analysis

Ethical & Security Implications

It’s crucial to understand that deepfake creation technology has both legitimate uses and potential for misuse:

Legitimate Applications

- Film and entertainment (special effects)

- Privacy protection (anonymizing individuals)

- Educational simulations and demonstrations

- Accessibility solutions (e.g., personalized content)

Ethical Concerns

- Non-consensual creation of synthetic media

- Political misinformation and propaganda

- Identity theft and fraud

- Erosion of trust in visual media

Real-World Impacts of Deepfakes

Deepfakes have several implications across various domains:

- Misinformation & Disinformation – Creation of fake news, political manipulation

- Identity Theft & Fraud – Impersonation for financial gain

- Online Harassment – Non-consensual synthetic content

- Entertainment & Creative Applications – Film production, advertising

- Training & Education – Simulations in healthcare and other fields

AWS Implementation

AWS provides robust services for building deepfake detection systems:

AWS Deepfake Detection Implementation

GCP Implementation

Google Cloud offers several services ideal for deepfake detection:

GCP Deepfake Detection Implementation

Azure Implementation

Microsoft Azure provides powerful services for deepfake detection:

Azure Deepfake Detection Implementation

Implementing a Custom Deepfake Detection Model

For those wanting to deploy a platform-independent solution:

Custom Deepfake Detection Model

Comparing Cloud Implementations

Cost Comparison

Let’s analyze the costs for implementing a deepfake detection system across cloud providers (monthly basis):

| Service Component | AWS | GCP | Azure |

|---|---|---|---|

| Storage (1TB) | S3: $21.85 | Cloud Storage: $19.00 | Blob Storage: $17.48 |

| Compute (10M invocations) | Lambda: $16.34 | Cloud Functions: $15.68 | Functions: $15.52 |

| Face Detection (1M images) | Rekognition: $1,000.00 | Vision API: $1,200.00 | Face API: $1,000.00 |

| ML Inference | SageMaker: $209.00 (ml.g4dn.xlarge) | Vertex AI: $235.00 (n1-standard-4 + T4 GPU) | Azure ML: $240.00 (Standard_NC6s_v3) |

| Monitoring | CloudWatch: $12.00 | Cloud Logging: $9.50 | Application Insights: $16.50 |

| Total (approx.) | $1,259.19 | $1,479.18 | $1,289.50 |

Note: Costs are approximations based on 2025 pricing trends and will vary based on exact usage patterns, regional pricing differences, and any additional promotional discounts. Check billing service for respective cloud provider for latest charges.

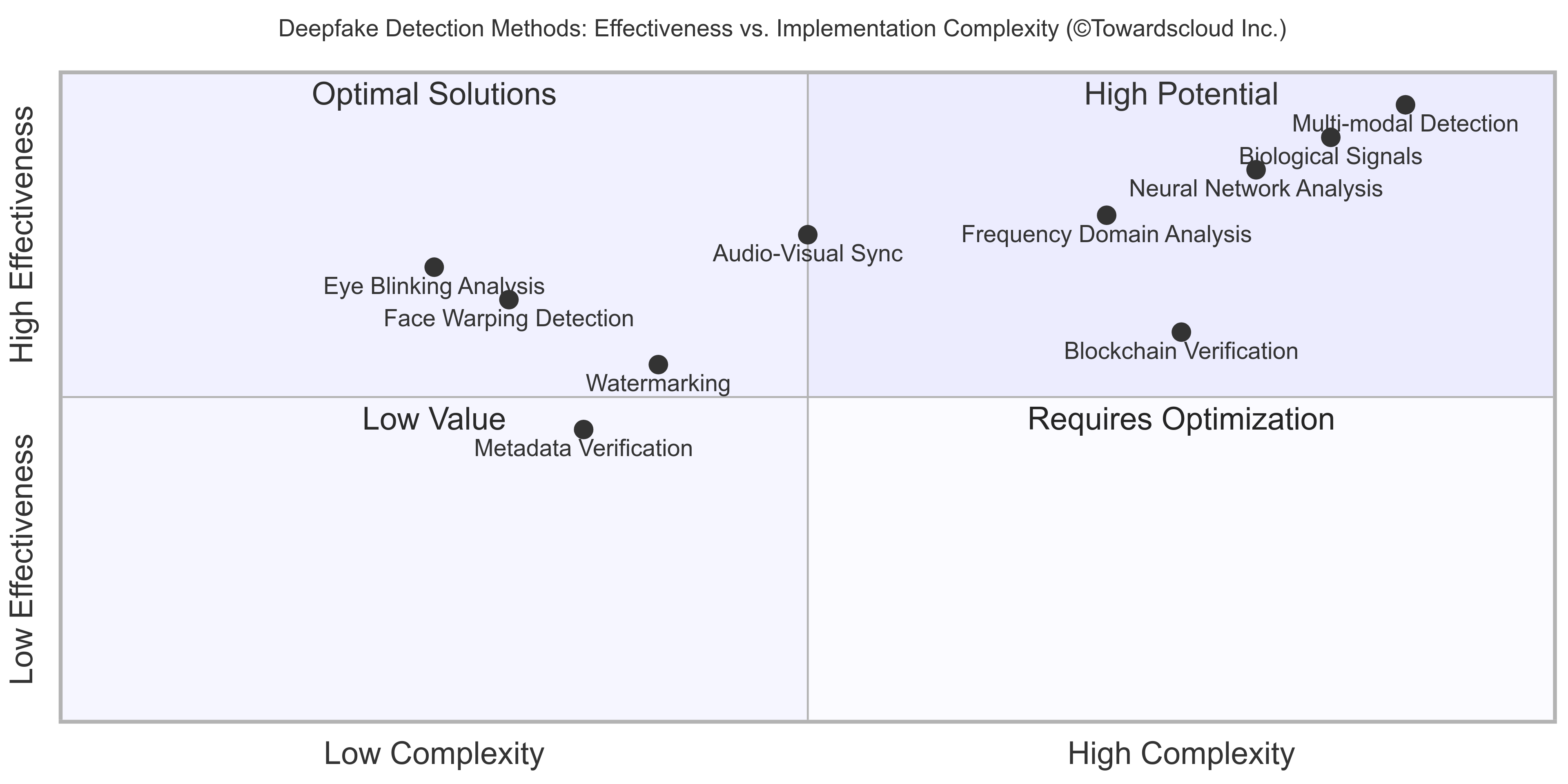

Mitigating Deepfakes: Detection and Prevention

Detection Techniques

- Visual Inconsistencies Analysis

- Eye blinking patterns

- Facial texture analysis

- Lighting inconsistencies

- Unnatural movements

- Audio-Visual Synchronization

- Lip-sync analysis

- Voice pattern matching

- Metadata Analysis

- Digital fingerprinting

- Hidden watermarks

Prevention Strategies

- Digital Content Provenance

- Content Authentication Initiative (CAI)

- Blockchain verification

- Media Literacy Education

- Public awareness campaigns

- Educational programs in schools

- Regulatory Frameworks

- Legal protections

- Industry standards

Ethical and Legal Considerations

Implementing deepfake detection systems raises several considerations:

- Privacy Concerns

- Facial data collection and storage

- Biometric data protection regulations (GDPR, CCPA)

- False Positives/Negatives

- Impact of wrongful identification

- Liability considerations

- Regulatory Compliance

- Regional variations in content laws

- Cross-border data transfer requirements

Future Developments

The field of deepfake detection continues to evolve rapidly:

- Real-time Detection Systems

- Low-latency detection in video streams

- In-browser verification tools

- Multimodal Analysis

- Combined audio-visual-textual verification

- Physiological impossibility detection

- Adversarial Training

- Constantly updating models against new techniques

- Self-improving systems through GANs

Conclusion

Deepfake creation involves sophisticated AI techniques combining computer vision, deep learning, and digital media processing. While the technical aspects are fascinating, it’s essential to approach this technology responsibly and with awareness of potential ethical implications.

Cloud providers offer powerful tools that enable the creation of deepfakes for legitimate purposes, but users must adhere to terms of service and ethical guidelines when implementing these technologies.

The AWS solution offers the best overall value, with GCP providing the most advanced AI capabilities at a premium price point. Azure represents a middle ground with strong integration into enterprise environments.

As deepfake technology continues to evolve, detection systems must keep pace through continuous model improvement, multi-modal analysis, and real-time capabilities. The ethical dimensions of this technology also require careful consideration, particularly around privacy, false identification, and regulatory compliance.

Stay tuned to for exciting articles on Towardscloud.