Generative vs. Discriminative Models: What’s the Difference?

Introduction

When we dive into the world of machine learning, two fundamental approaches stand out: generative and discriminative models. While they may sound like technical jargon, these approaches represent two different ways of thinking about how machines learn from data. In this article, we’ll break down these concepts into easy-to-understand explanations with real-world examples that show how these models work and why they matter in the rapidly evolving cloud computing landscape.

Call to Action: As you read through this article, try to think about classification problems you’ve encountered in your work or daily life. Which approach would you use to solve them?

The Fundamental Distinction

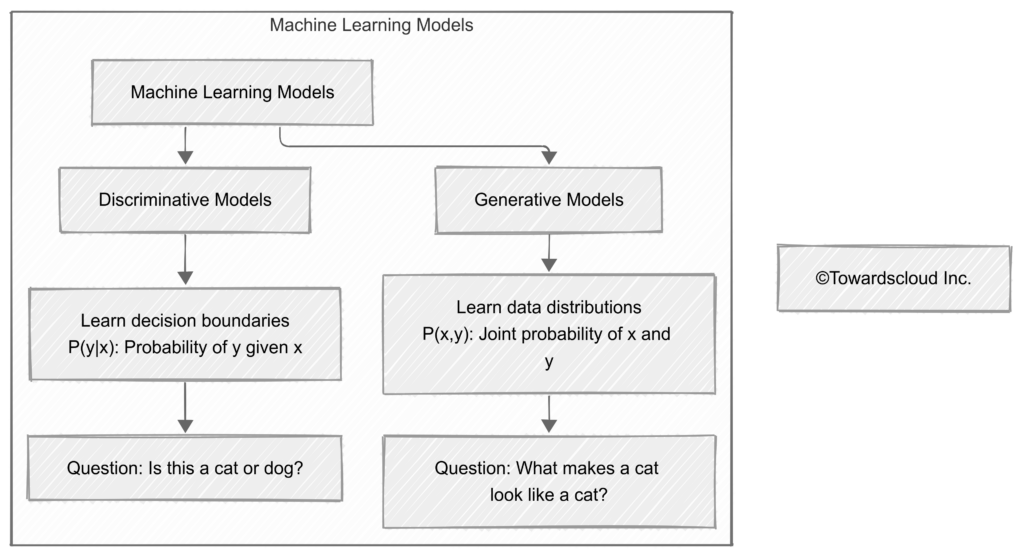

At their core, generative and discriminative models differ in what they’re trying to learn:

- Discriminative models learn the boundaries between classes—they focus on making decisions by finding what differentiates one category from another.

- Generative models learn the underlying distribution of each class—they understand what makes each category unique by learning to generate examples that resemble the training data.

Real-World Analogy: The Coffee Shop Example

Let’s use a simple, everyday example to understand these approaches better:

Imagine you’re trying to determine whether a customer is going to order a latte or an espresso at a coffee shop.

The Discriminative Approach

A discriminative model would be like a barista who notices patterns like:

- Customers in business attire usually order espressos

- Customers who come in the morning typically choose lattes

- Customers who seem in a hurry tend to prefer espressos

The barista doesn’t try to understand everything about each type of customer—they just identify features that help predict the order.

The Generative Approach

A generative model would be like a coffee shop owner who creates detailed customer profiles:

- The typical latte drinker arrives between 7-9 AM, spends 15-20 minutes in the shop, often wears casual clothes, and may use the shop’s Wi-Fi

- The typical espresso drinker arrives throughout the day, stays for less than 5 minutes, often wears formal clothes, and rarely sits down

The owner understands the entire “story” behind each type of customer, not just the differences between them.

Call to Action: Think about how you make predictions in your daily life. Do you use more discriminative approaches (focusing on key differences) or generative approaches (building complete mental models)? Try applying both ways of thinking to a problem you’re facing right now!

Mathematical Perspective

To understand these models more deeply, let’s look at the mathematical foundation:

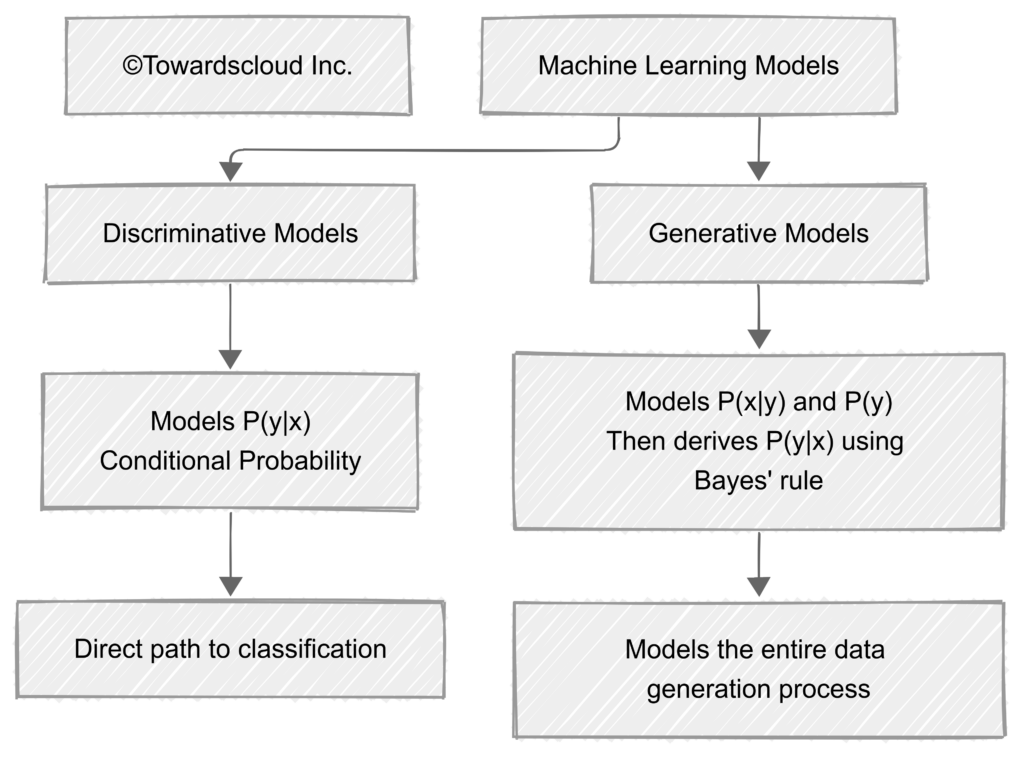

For Discriminative Models:

- They model P(y|x): The probability of a label y given the features x

- Example: What’s the probability this email is spam given its content?

For Generative Models:

- They model P(x|y) and P(y): The probability of observing features x given the class y, and the prior probability of class y

- They can derive P(y|x) using Bayes’ rule: P(y|x) = P(x|y)P(y)/P(x)

- Example: What’s the typical content of spam emails, and what portion of all emails are spam?

Common Examples of Each Model Type

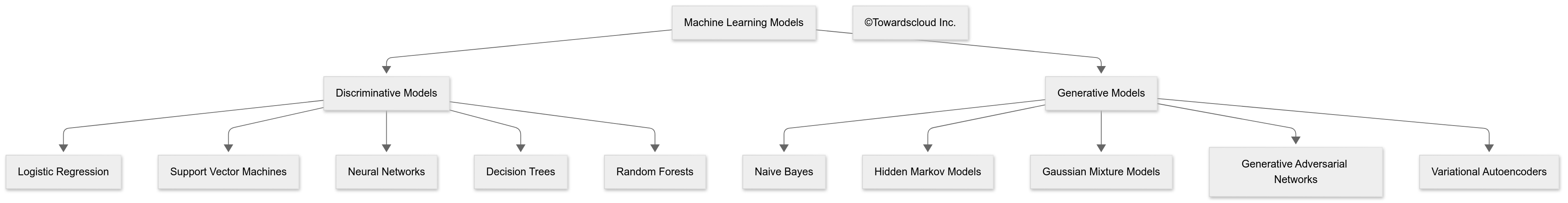

Let’s explore some common algorithms in each category:

Discriminative Models:

- Logistic Regression

- Support Vector Machines (SVMs)

- Neural Networks (most architectures)

- Decision Trees and Random Forests

- Conditional Random Fields

Generative Models:

- Naive Bayes

- Hidden Markov Models

- Gaussian Mixture Models

- Latent Dirichlet Allocation

- Generative Adversarial Networks (GANs)

- Variational Autoencoders (VAEs)

Call to Action: Have you used any of these models in your projects? Share your experience on our community forum and discover how others are applying these techniques in creative ways!

Detailed Comparison: Strengths and Weaknesses

Let’s dive deeper into how these models compare across different dimensions:

| Aspect | Discriminative Models | Generative Models |

| Primary Goal | Learn decision boundaries | Learn data distributions |

| Mathematical Foundation | Model P(y|x) directly | Model P(x|y) and P(y) |

| Data Efficiency | Often require more data | Can work with less data |

| Handling Missing Features | Struggle with missing data | Can handle missing features better |

| Computational Complexity | Generally faster to train | Often more computationally intensive |

| Interpretability | Can be black boxes (especially neural networks) | Often more interpretable |

| Performance with Limited Data | May overfit with limited data | Often perform better with limited data |

| Ability to Generate New Data | Cannot generate new samples | Can generate new, similar samples |

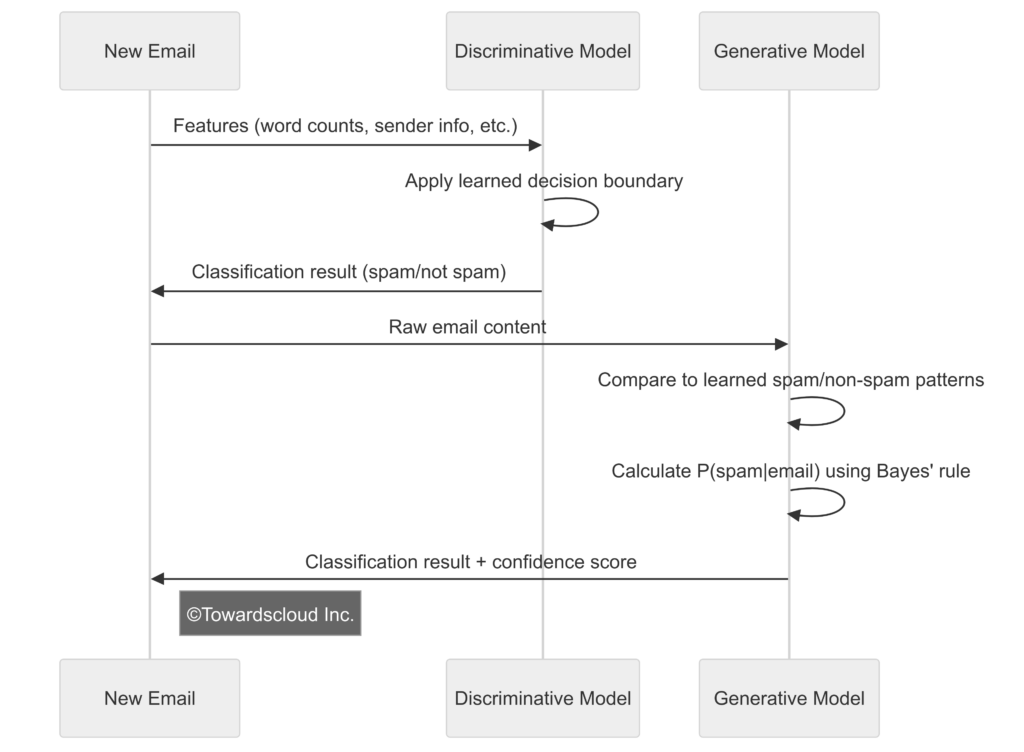

Real-World Application: Email Classification

Let’s see how these approaches would tackle a common problem: email spam classification.

Discriminative Approach (e.g., SVM):

- Extract features from emails (word frequency, sender information, etc.)

- Train the model to find a boundary between spam and non-spam based on these features

- For new emails, check which side of the boundary they fall on

Generative Approach (e.g., Naive Bayes):

- Learn the typical characteristics of spam emails (what words frequently appear, typical formats)

- Learn the typical characteristics of legitimate emails

- For a new email, compare how well it matches each category and classify accordingly

Real-World Application: Email Classification

Let’s see how these approaches would tackle a common problem: email spam classification.

Discriminative Approach (e.g., SVM):

- Extract features from emails (word frequency, sender information, etc.)

- Train the model to find a boundary between spam and non-spam based on these features

- For new emails, check which side of the boundary they fall on

Generative Approach (e.g., Naive Bayes):

- Learn the typical characteristics of spam emails (what words frequently appear, typical formats)

- Learn the typical characteristics of legitimate emails

- For a new email, compare how well it matches each category and classify accordingly

Applications in Cloud Services

Both model types are extensively used in cloud services across AWS, GCP, and Azure:

AWS Services:

- Amazon SageMaker: Supports both generative and discriminative models

- Amazon Comprehend: Uses discriminative models for text analysis

- Amazon Polly: Uses generative models for text-to-speech

GCP Services:

- Vertex AI: Provides tools for both types of models

- Google AutoML: Leverages discriminative models for classification tasks

- Google Cloud Natural Language: Uses various model types for text analysis

Azure Services:

- Azure Machine Learning: Supports both model paradigms

- Azure Cognitive Services: Uses discriminative models for vision and language tasks

- Azure OpenAI Service: Incorporates large generative models

Call to Action: Which cloud provider offers the best tools for your specific modeling needs? Consider experimenting with services from different providers to find the best fit for your use case!

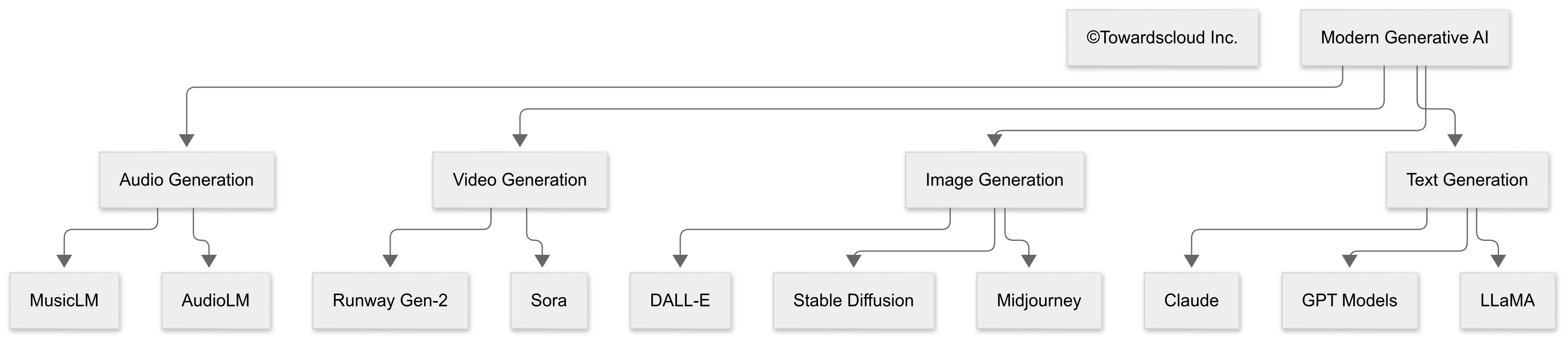

Deep Dive: Generative AI and Modern Applications

The recent explosion of interest in AI has largely been driven by advances in generative models. Let’s explore some cutting-edge examples:

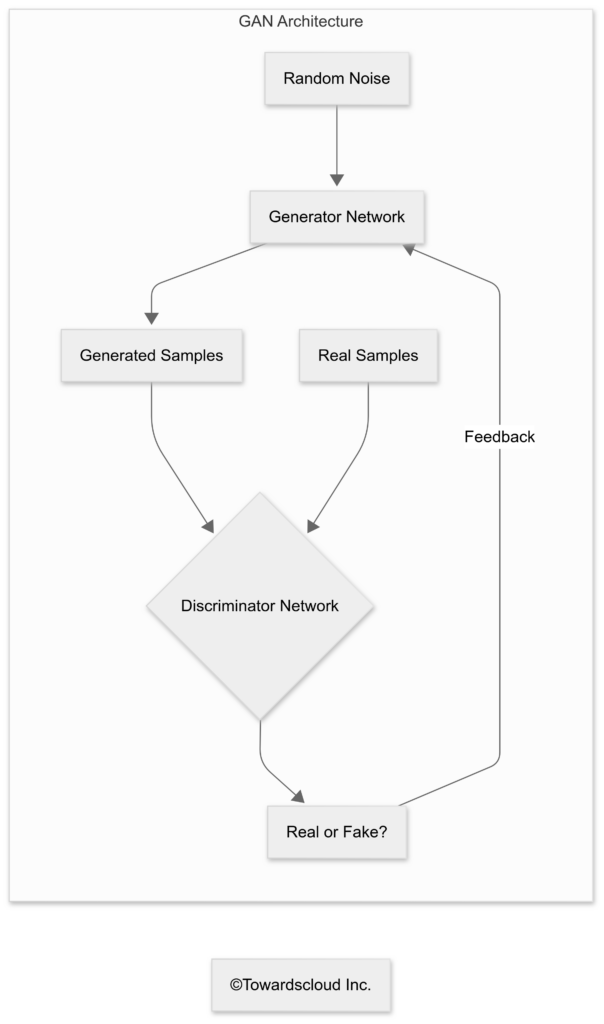

Generative Adversarial Networks (GANs)

GANs represent a fascinating advancement in generative models, consisting of two neural networks—a generator and a discriminator—engaged in a competitive process:

- Generator: Creates fake data samples

- Discriminator: Tries to distinguish fake samples from real ones

- Through training, the generator gets better at creating realistic samples, and the discriminator gets better at spotting fakes

- Eventually, the generator produces samples that are indistinguishable from real data

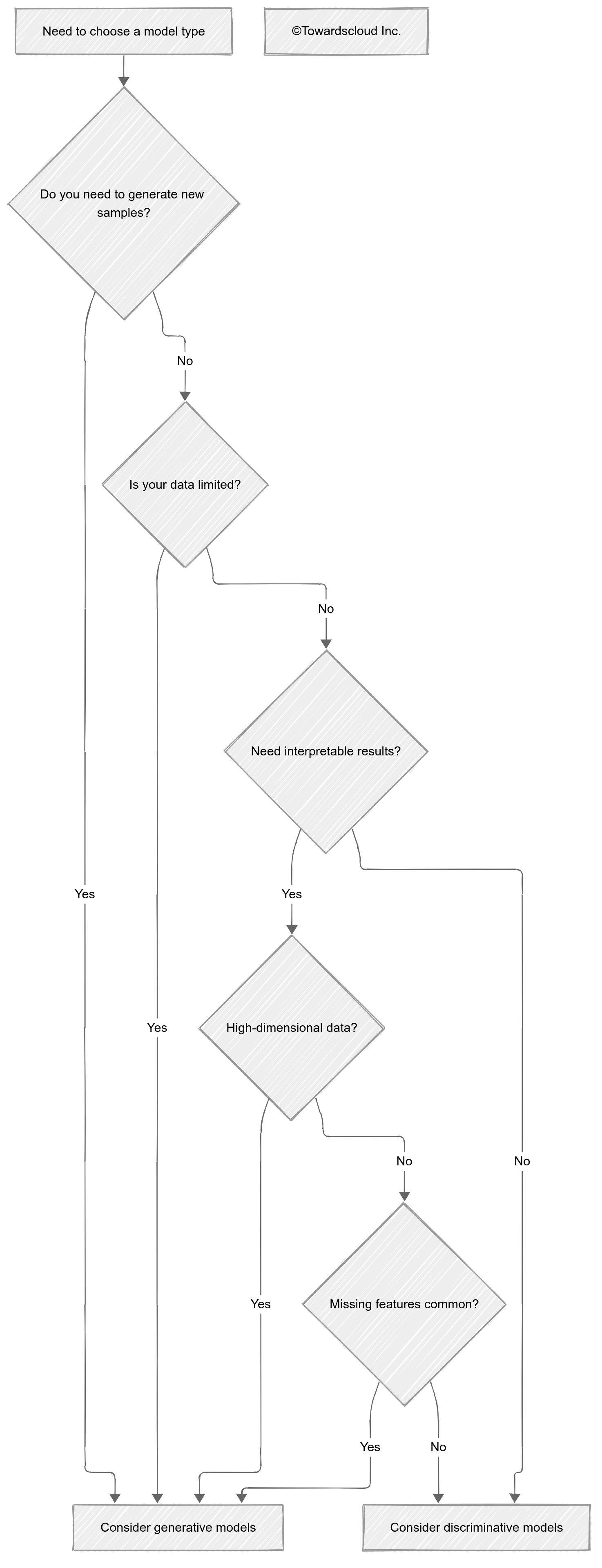

Choosing Between Generative and Discriminative Models

When deciding which approach to use, consider the following factors:

Use Generative Models When:

- You need to generate new, synthetic examples

- You have limited training data

- You need to handle missing features

- You want a model that explains why something is classified a certain way

- You’re working with structured data where the relationships between features matter

Use Discriminative Models When:

- Your sole focus is classification or regression accuracy

- You have large amounts of labeled training data

- All features will be available during inference

- Computational efficiency is important

- You’re working with high-dimensional, unstructured data like images

Call to Action: For your next machine learning project, try implementing both a generative and discriminative approach to the same problem. Compare not just the accuracy, but also training time, interpretability, and ability to handle edge cases!

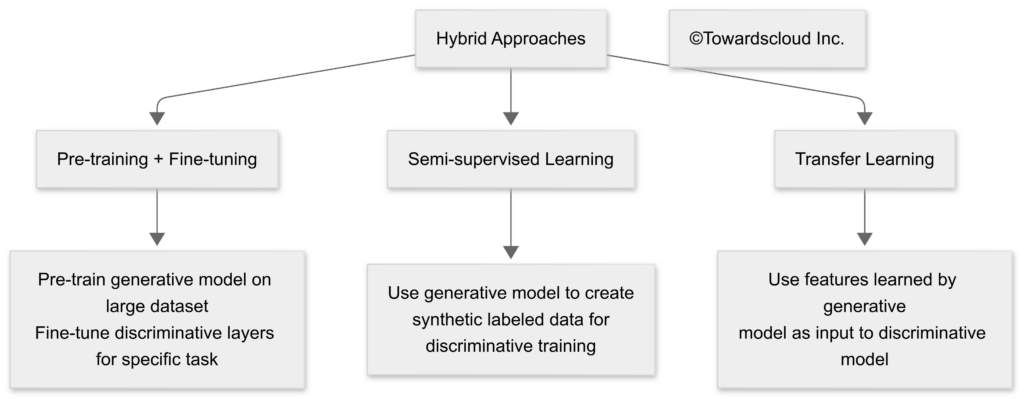

Hybrid Approaches: Getting the Best of Both Worlds

Modern machine learning increasingly blends generative and discriminative approaches:

Recent advancements include:

- Semi-supervised learning: Using generative models to create additional training data for discriminative models

- Transfer learning: Pre-training generative models on large datasets, then fine-tuning discriminative layers for specific tasks

- Foundation models: Large generative models that can be adapted to specific discriminative tasks through fine-tuning

Implementation in Cloud Environments

Here’s how you might implement these models in different cloud environments:

AWS Implementation:

# Example: Training a discriminative model (Logistic Regression) on AWS SageMaker

import sagemaker

from sagemaker.sklearn.estimator import SKLearn

estimator = SKLearn(

entry_point='train.py',

role=sagemaker.get_execution_role(),

instance_count=1,

instance_type='ml.c5.xlarge',

framework_version='0.23-1'

)

estimator.fit({'train': 's3://my-bucket/train-data'})GCP Implementation:

# Example: Training a generative model (Variational Autoencoder) on Vertex AI

from google.cloud import aiplatform

job = aiplatform.CustomTrainingJob(

display_name="vae-training",

script_path="train_vae.py",

container_uri="gcr.io/my-project/vae-training:latest",

requirements=["tensorflow==2.8.0", "numpy==1.22.3"]

)

job.run(

replica_count=1,

machine_type="n1-standard-8",

accelerator_type="NVIDIA_TESLA_T4",

accelerator_count=1

)

Azure Implementation:

# Example: Training a GAN on Azure Machine Learning

from azureml.core import Workspace, Experiment, ScriptRunConfig

from azureml.core.compute import ComputeTarget

ws = Workspace.from_config()

compute_target = ComputeTarget(workspace=ws, name='gpu-cluster')

config = ScriptRunConfig(

source_directory='./gan-training',

script='train.py',

compute_target=compute_target,

environment_definition='gan-env'

)

experiment = Experiment(workspace=ws, name='gan-training')

run = experiment.submit(config)

Conclusion: The Complementary Nature of Both Approaches

Generative and discriminative models represent two fundamental perspectives in machine learning, each with its own strengths and applications. While discriminative models excel at classification tasks with clear boundaries, generative models offer deeper insights into data structure and can create new, synthetic examples.

As cloud technologies continue to evolve, we’re seeing increasing integration of both approaches, with hybrid systems leveraging the strengths of each. The most sophisticated AI systems now use generative models for understanding and creating content, while discriminative components handle specific classification and decision tasks.

The future of machine learning in cloud environments will likely continue this trend of combining approaches, with specialized services making both types of models more accessible and easier to deploy for businesses of all sizes.

Final Call to Action: What challenges are you facing that might benefit from either generative or discriminative approaches? Join our community forum at towardscloud.com/community to discuss your use cases and get insights from other cloud practitioners!

Further Reading

- Understanding Deep Learning by Goodfellow, Bengio, and Courville

- AWS Machine Learning Blog

- Google Cloud AI Blog

- Microsoft Azure AI Blog

This article is part of our comprehensive guide to machine learning fundamentals in cloud environments. Check back next time for our next piece!