Welcome to today’s edition of “Word Up! Bot” where we explore the fascinating world of Generative AI for text generation across the major cloud platforms. Whether you’re a seasoned AI professional or just starting your journey, this guide will walk you through everything you need to know about implementing text generation AI in AWS, GCP, and Azure.

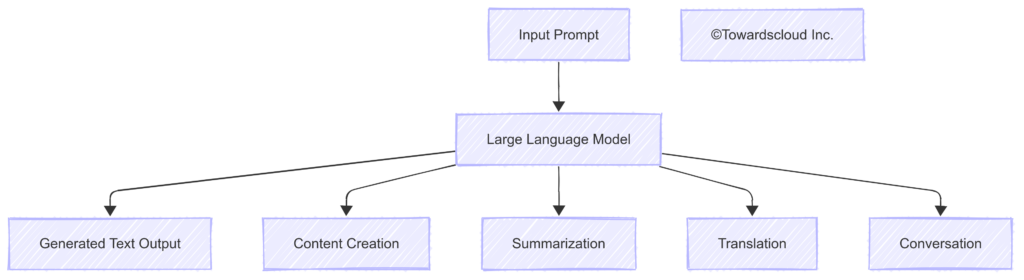

What is Generative AI for Text?

Generative AI for text refers to artificial intelligence systems that can create human-like text based on the input they receive. Think of it as having a smart assistant that can write emails, summarize documents, create content, or even have conversations with your customers.

Understanding Large Language Models (LLMs)

Before diving into cloud implementations, let’s understand the foundation of modern text generation: Large Language Models or LLMs.

What is an LLM?

A Large Language Model (LLM) is a type of artificial intelligence that has been trained on massive amounts of text data. Imagine if someone read virtually everything on the internet, thousands of books, and countless articles – and could use all that knowledge to write new text. That’s essentially what an LLM does.

In simple terms, an LLM is:

- A mathematical system that has “seen” billions or even trillions of examples of human-written text

- Capable of recognizing patterns in language and generating new text that follows similar patterns

- Able to “understand” context and produce relevant responses

A Real-World Analogy for LLMs

Think of an LLM like a super-advanced predictive text system on your phone, but on a massive scale:

- Your phone’s predictive text: Suggests the next word based on the few words you’ve typed and your past texting habits

- An LLM: Predicts not just the next word, but can continue generating entire paragraphs, essays, or conversations based on all the text it was trained on

An Example of LLM in Action

Let’s see a simple example of how an LLM works:

Prompt: “Write a short email to reschedule a meeting with a client.”

LLM-Generated Response:

Subject: Request to Reschedule Our Upcoming Meeting

Dear [Client Name],

I hope this email finds you well. I'm writing regarding our meeting scheduled for [original date/time]. Unfortunately, I need to request a rescheduling due to an unexpected conflict that has arisen.

Would any of the following alternative times work for you?

- Thursday, March 12th at 2:00 PM

- Friday, March 13th at 10:00 AM

- Monday, March 16th at 3:30 PM

I apologize for any inconvenience this may cause and appreciate your flexibility. Please let me know which option works best for you, or feel free to suggest another time that better suits your schedule.

Thank you for your understanding.

Best regards,

[Your Name]

The LLM didn’t just complete a sentence – it understood the context of “rescheduling a meeting” and generated a complete, professional email with all the appropriate components.

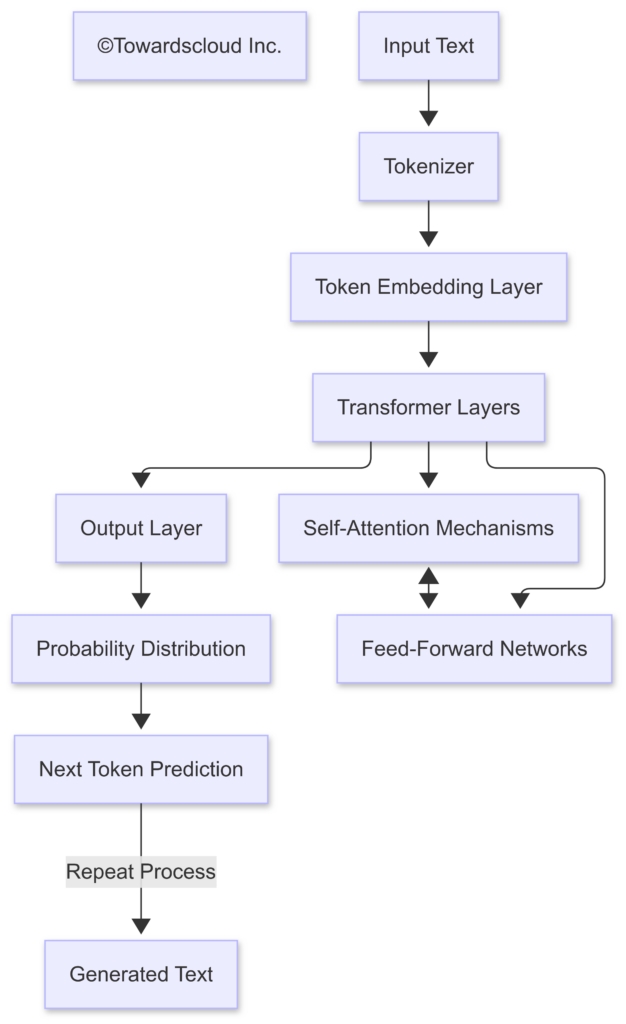

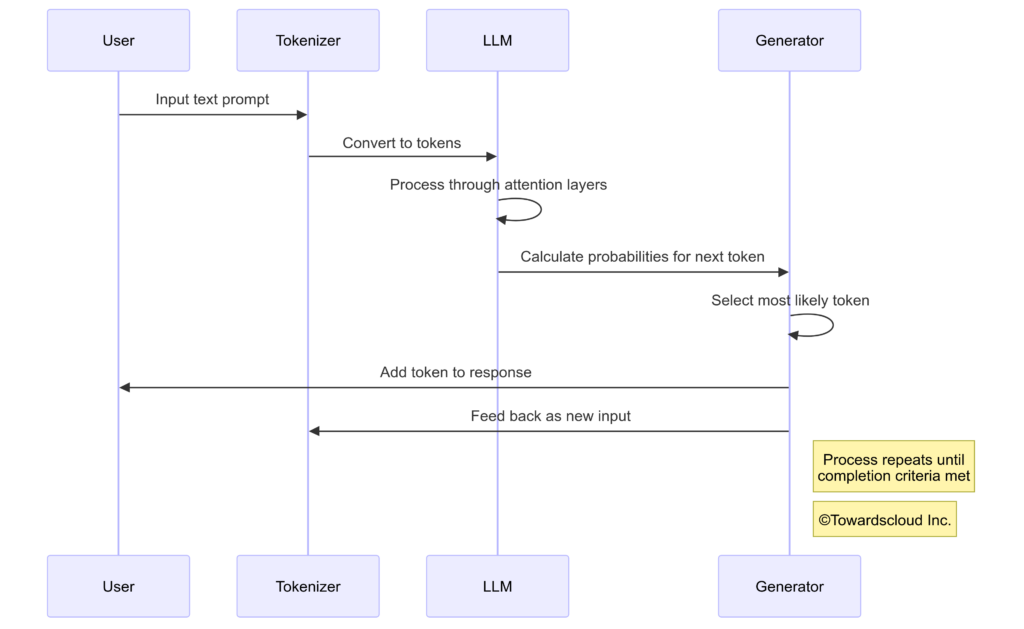

The Technical Side: How LLMs Work

Behind the scenes, LLMs operate through a process that can be broken down into simpler steps:

- Tokenization: Breaking text into smaller pieces called tokens (which can be words, parts of words, or even characters)

- Embedding: Converting these tokens into numbers (vectors) that the model can process

- Processing: Running these numbers through multiple layers of the model, with special mechanisms called “attention” that help the model focus on relevant parts of the input

- Generation: Predicting the most likely next token based on what it has processed

- Repetition: Repeating steps 3-4 until a complete response is generated

Common LLM Examples

Some of the most well-known LLMs include:

- GPT-4 (OpenAI): Powers ChatGPT and many AI applications

- Claude (Anthropic): Known for its helpful, harmless, and honest approach

- Gemini (Google): Google’s advanced model with strong reasoning capabilities

- Llama (Meta): An open-source model family that developers can run locally or fine-tune

These models vary in size (measured in parameters, which are like the adjustable settings in the model), capabilities, and specialized strengths.

💡 CALL TO ACTION: Think about how an LLM might interpret your business’s specialized vocabulary. What industry terms would you need to explain clearly when prompting an LLM?

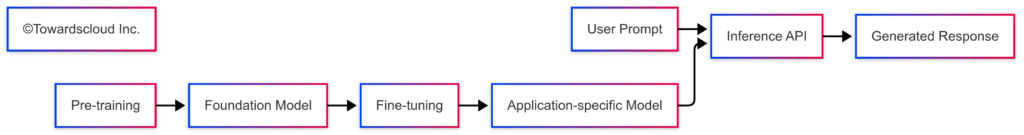

How Does Text Generation Work?

Now that we understand LLMs, let’s explore how they’re used for text generation in practical applications:

- Training: LLMs are trained on massive datasets of text from books, websites, and other sources

- Prompting: You provide a prompt or instruction to the model

- Generation: The model produces text that continues from or responds to your prompt

- Fine-tuning: Models can be customized for specific domains or tasks

Real-World Example: The Content Creation Assistant

Imagine Sarah, a marketing manager at a growing e-commerce company. She needs to create product descriptions, blog posts, and social media content for hundreds of products each month. Manually writing all this content would take weeks.

By implementing a generative AI solution, Sarah can:

- Generate first drafts of all content in minutes instead of weeks

- Maintain consistent brand voice across all materials

- Scale content production without hiring additional writers

- Focus her creative energy on strategy rather than routine writing

💡 CALL TO ACTION: Think about repetitive writing tasks in your organization. Could generative AI help reduce that workload? Share your thoughts in the comments!

Cloud Implementation Comparison

Now, let’s explore how you can implement text generation AI in the three major cloud platforms: AWS, GCP, and Azure.

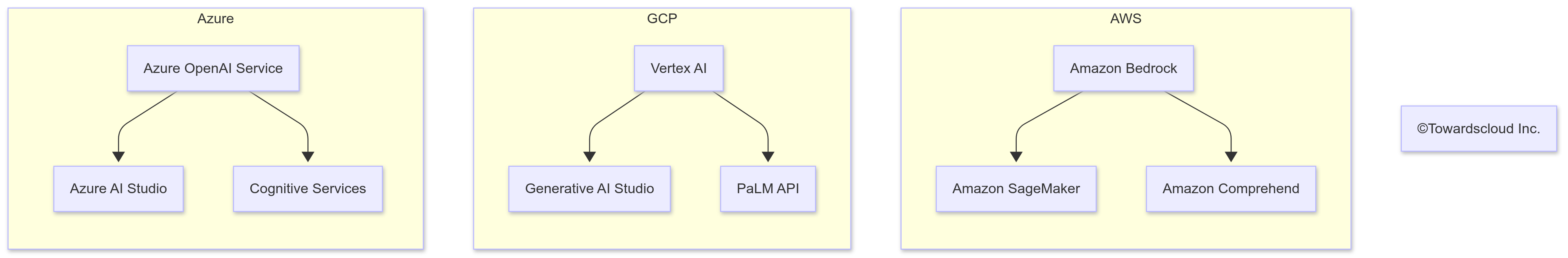

Implementing Text Generation in AWS

AWS offers several services for generative AI text implementation:

- Amazon Bedrock: A fully managed service that provides access to foundation models from Amazon and third parties through a unified API

- Amazon SageMaker: For custom model training and deployment

- Amazon Comprehend: For natural language processing tasks

Let’s look at a simple implementation example using Amazon Bedrock:

import boto3

import json

# Initialize the Bedrock client

bedrock_runtime = boto3.client(

service_name='bedrock-runtime',

region_name='us-west-2'

)

# Define the prompt

prompt = """

Write a product description for an eco-friendly water bottle that keeps

beverages cold for 24 hours and hot for 12 hours.

"""

# Define model parameters

model_id = "anthropic.claude-v2"

body = json.dumps({

"prompt": f"\n\nHuman: {prompt}\n\nAssistant:",

"max_tokens_to_sample": 500,

"temperature": 0.7,

"top_p": 0.9,

})

# Make the API call

response = bedrock_runtime.invoke_model(

modelId=model_id,

body=body

)

# Process and display the response

response_body = json.loads(response['body'].read())

generated_text = response_body.get('completion')

print(generated_text)Implementing Text Generation in GCP

Google Cloud Platform offers:

- Vertex AI: Google’s unified ML platform with access to Gemini and PaLM models

- Generative AI Studio: A visual interface for exploring and customizing generative AI models

- Gemini API: For direct access to Google’s latest models

Here’s a simple implementation example using the Vertex AI PaLM API:

import vertexai

from vertexai.generative_models import GenerativeModel

from vertexai.language_models import TextGenerationModel

# Initialize Vertex AI

vertexai.init(project="your-project-id", location="us-central1")

# Use the Gemini model

model = GenerativeModel("gemini-pro")

# Define the prompt

prompt = """

Write a product description for an eco-friendly water bottle that keeps

beverages cold for 24 hours and hot for 12 hours.

"""

# Generate content

response = model.generate_content(prompt)

# Print the response

print(response.text)

# Alternatively, use the Palm API

palm_model = TextGenerationModel.from_pretrained("text-bison@001")

palm_response = palm_model.predict(

prompt,

temperature=0.7,

max_output_tokens=500,

top_k=40,

top_p=0.8,

)

print(palm_response.text)Implementing Text Generation in Azure

Microsoft Azure offers:

- Azure OpenAI Service: Provides access to OpenAI’s models like GPT-4 with Azure’s security and compliance features

- Azure AI Studio: For building, testing, and deploying AI applications

- Azure Cognitive Services: For specific AI capabilities like language understanding

Here’s a sample implementation using Azure OpenAI Service:

import os

import openai

# Set your Azure OpenAI endpoint information

openai.api_type = "azure"

openai.api_version = "2023-07-01-preview"

openai.api_key = os.getenv("AZURE_OPENAI_KEY")

openai.api_base = os.getenv("AZURE_OPENAI_ENDPOINT")

# Define the prompt

prompt = """

Write a product description for an eco-friendly water bottle that keeps

beverages cold for 24 hours and hot for 12 hours.

"""

# Generate content using Azure OpenAI

response = openai.Completion.create(

engine="gpt-4", # Deployment name

prompt=prompt,

max_tokens=500,

temperature=0.7,

top_p=0.9,

frequency_penalty=0.0,

presence_penalty=0.0,

stop=None

)

# Print the response

print(response.choices[0].text.strip())Cost Comparison

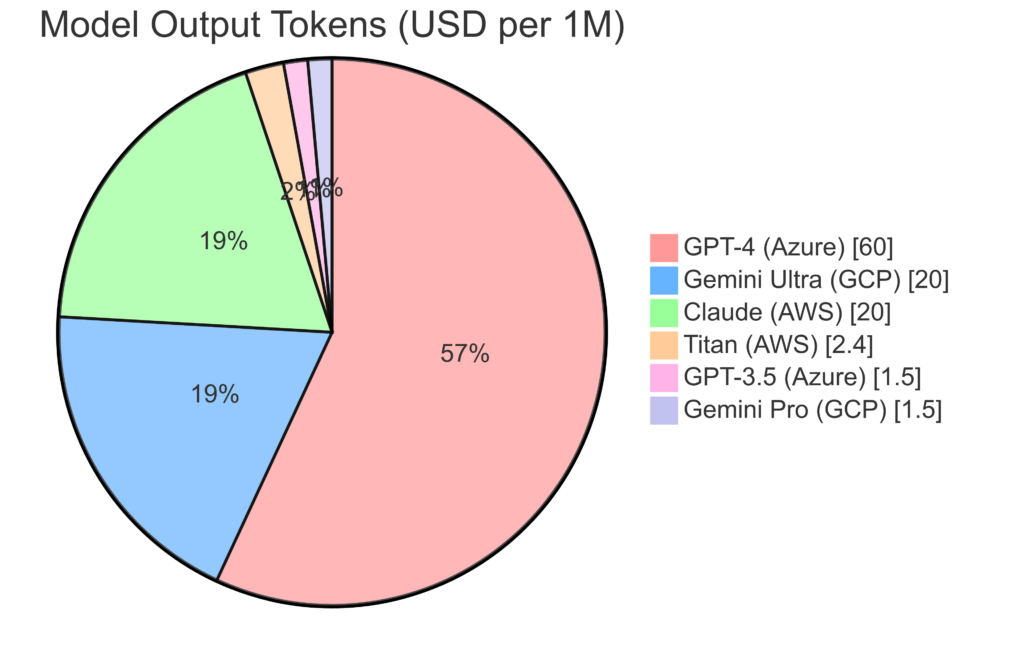

Understanding the cost implications of implementing generative AI across different cloud providers is crucial for budget planning. Here’s a detailed comparison:

| Cloud Provider | Service | Model | Cost (Approximate) | Notes |

|---|---|---|---|---|

| AWS | Amazon Bedrock | Claude (Anthropic) | $3-11 per 1M input tokens, $8-32 per 1M output tokens | Pricing varies by model size |

| AWS | Amazon Bedrock | Titan | $0.80 per 1M input tokens, $2.40 per 1M output tokens | Amazon’s proprietary model |

| GCP | Vertex AI | Gemini Pro | $0.50 per 1M input tokens, $1.50 per 1M output tokens | Good balance of performance and cost |

| GCP | Vertex AI | Gemini Ultra | $5 per 1M input tokens, $20 per 1M output tokens | Most capable model, higher cost |

| Azure | Azure OpenAI | GPT-4 | $30 per 1M input tokens, $60 per 1M output tokens | Premium model with highest capabilities |

| Azure | Azure OpenAI | GPT-3.5 Turbo | $0.50 per 1M input tokens, $1.50 per 1M output tokens | Cost-effective for many applications |

Note: Prices are approximate as of March 2025 and may vary based on region, volume discounts, and other factors. Always check the official pricing pages for the most current information: AWS Pricing, GCP Pricing, Azure Pricing.

💡 CALL TO ACTION: Calculate your estimated monthly token usage based on your use cases. Which cloud provider would be most cost-effective for your specific needs?

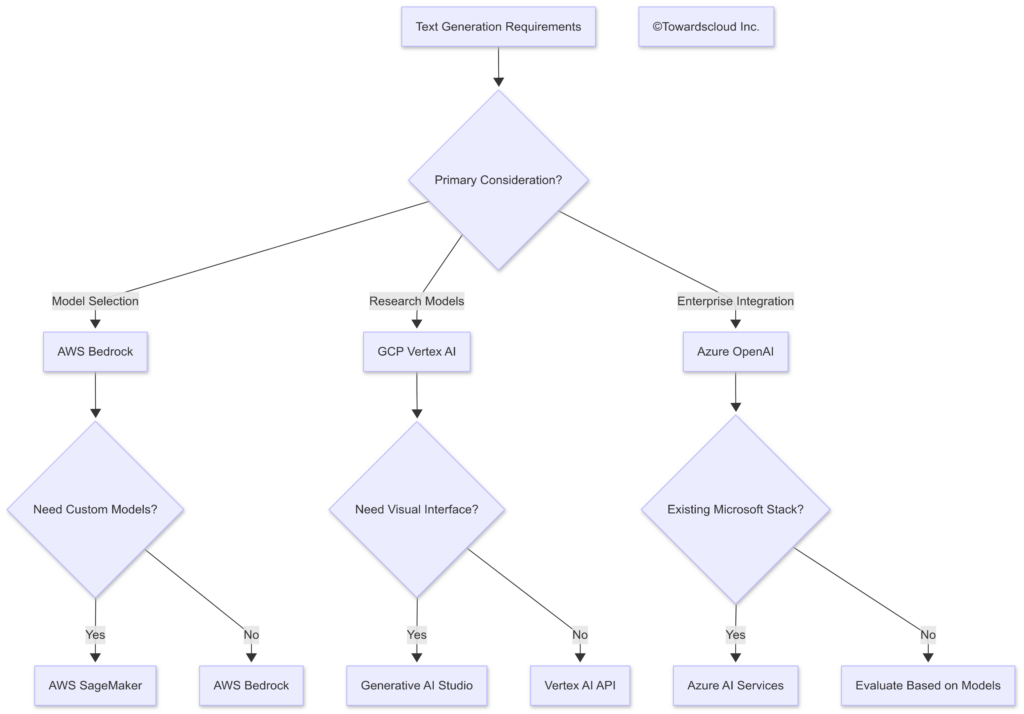

Key Differences Between Cloud Providers

While all three major cloud providers offer text generation capabilities, there are important differences to consider:

AWS Advantages

- Model Variety: Access to multiple model providers (Anthropic, Cohere, AI21, Meta, etc.) through a single API

- Deep Integration: Seamless integration with other AWS services

- Customization: Strong options for model customization via SageMaker

GCP Advantages

- Google’s Research Expertise: Access to state-of-the-art models from Google Research

- Vertex AI: Comprehensive MLOps platform with strong model monitoring and management

- Cost-Effectiveness: Generally competitive pricing, especially for Google’s models

Azure Advantages

- OpenAI Partnership: Exclusive cloud access to OpenAI’s most advanced models

- Enterprise Focus: Strong security, compliance, and governance features

- Microsoft Ecosystem: Tight integration with Microsoft products and services

Implementation Considerations

When implementing text generation AI in any cloud platform, consider these key factors:

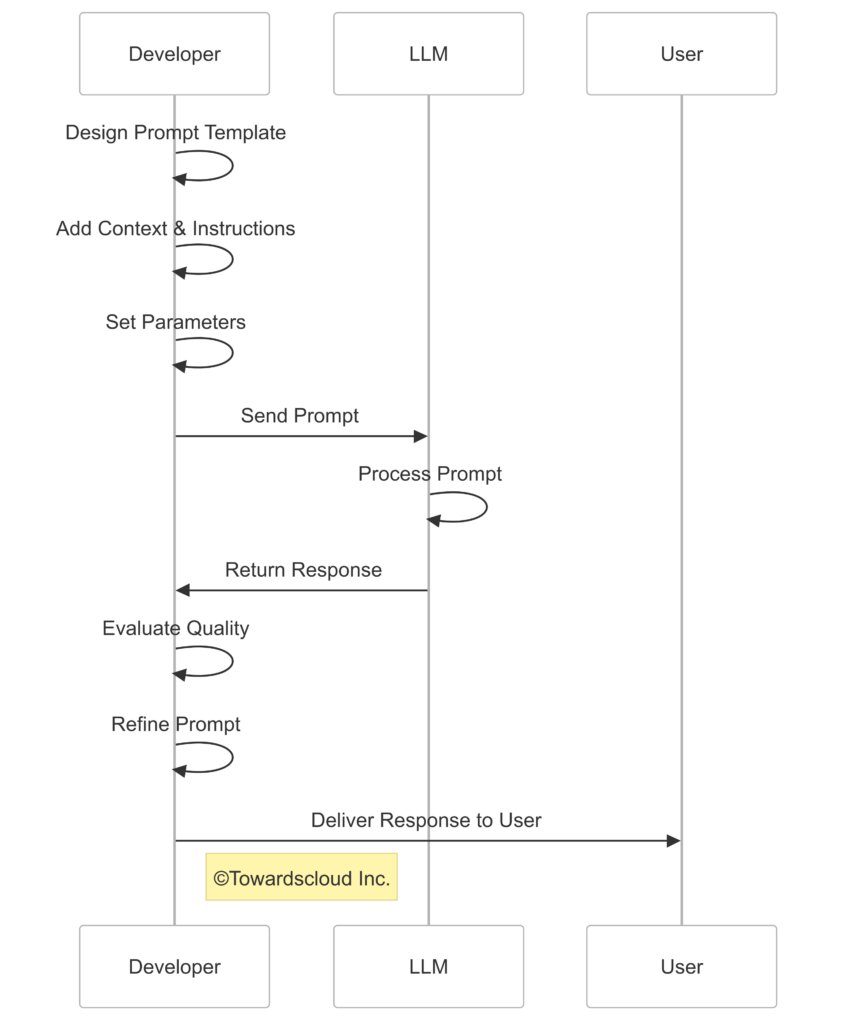

1. Prompt Engineering

The way you formulate prompts significantly impacts the quality of generated text. Good prompt engineering involves:

- Being specific and clear in your instructions

- Providing examples of desired outputs

- Setting context appropriately

- Controlling parameters like temperature and max tokens

2. Model Selection

Each model has different capabilities, costs, and performance characteristics:

- Base Models: Good for general tasks, lower cost

- Specialized Models: Better for specific domains or tasks

- Larger Models: Higher quality output but more expensive

- Smaller Models: Faster, cheaper, but potentially less capable

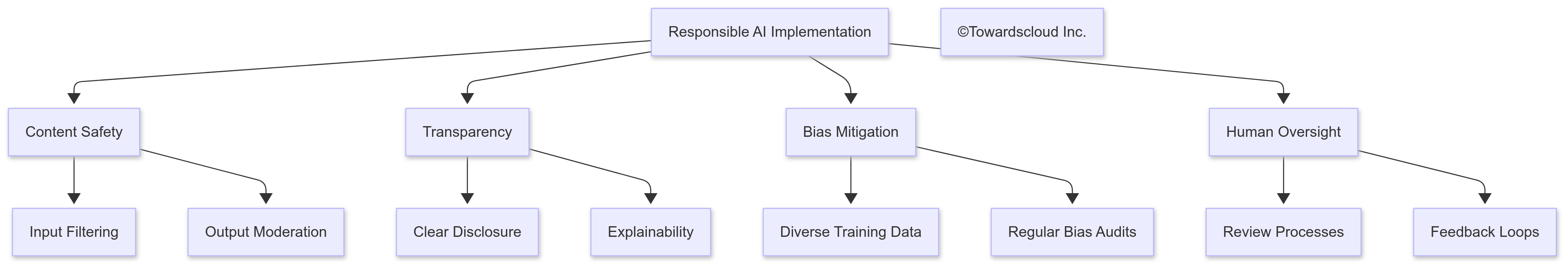

3. Responsible AI Implementation

Implementing generative AI responsibly is crucial:

- Content Filtering: Implement filters to prevent harmful content

- Human Review: Maintain human oversight for sensitive applications

- Bias Mitigation: Be aware of and address potential biases in generated content

- Transparency: Be clear with users when they’re interacting with AI-generated content

💡 CALL TO ACTION: Conduct an initial assessment of your organization’s AI readiness. What governance structures would you need to implement to ensure responsible AI usage?

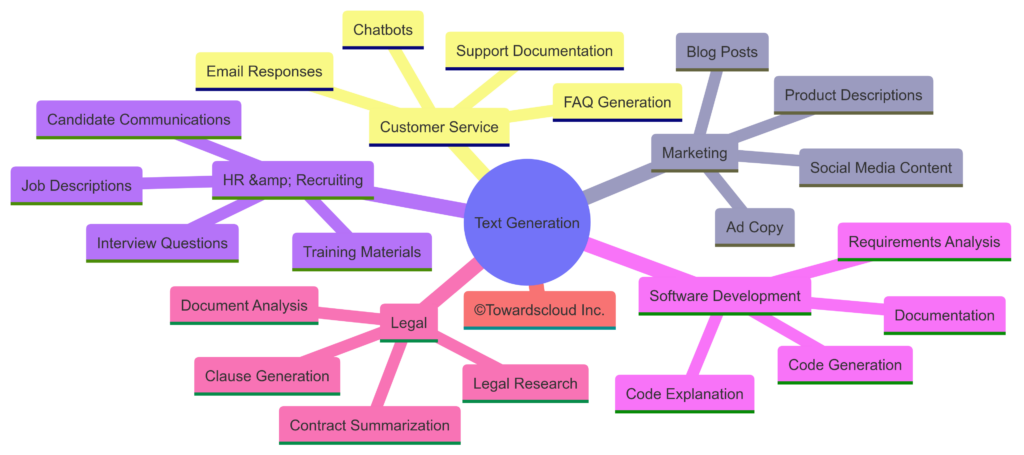

Real-World Use Cases

Let’s explore some practical applications of text generation AI across different industries:

Customer Service

Implement AI-powered chatbots that can:

- Answer frequent customer questions 24/7

- Escalate complex issues to human agents

- Generate personalized responses to customer inquiries

- Draft email responses for customer service representatives

Content Marketing

Use generative AI to:

- Create first drafts of blog posts and articles

- Generate product descriptions at scale

- Produce social media content

- Adapt existing content for different audiences or platforms

Software Development

Assist developers by:

- Generating code snippets and documentation

- Explaining complex code

- Converting requirements into pseudocode

- Suggesting bug fixes and optimizations

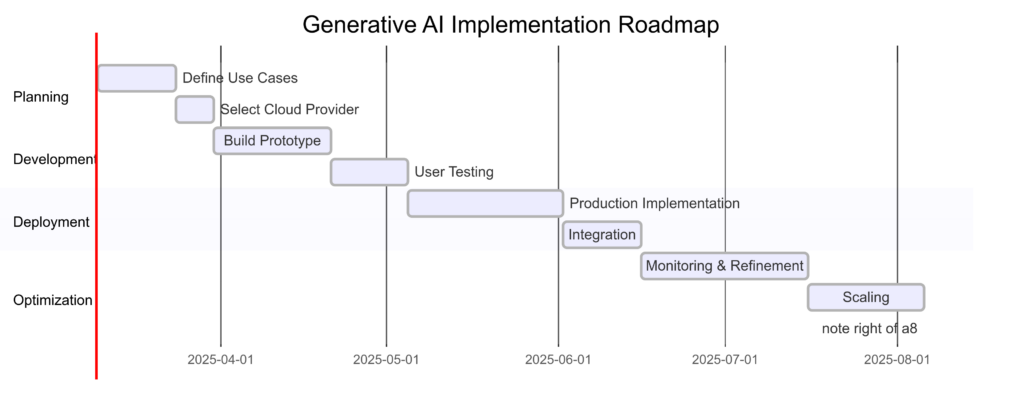

Getting Started: Implementation Roadmap

Here’s a step-by-step guide to implementing generative AI text solutions in your organization:

- Define Your Use Case

- Identify specific problems to solve

- Establish clear success metrics

- Determine required capabilities

- Select Your Cloud Provider

- Consider existing infrastructure

- Evaluate model availability and features

- Compare pricing for your expected usage

- Prototype and Test

- Build small-scale proof of concept

- Test with real data and scenarios

- Gather feedback from stakeholders

- Implement Production Solution

- Develop integration with existing systems

- Establish monitoring and evaluation processes

- Create fallback mechanisms

- Monitor, Learn, and Improve

- Track performance metrics

- Gather user feedback

- Continuously refine prompts and parameters

💡 CALL TO ACTION: Which step in the implementation roadmap do you anticipate being the most challenging for your organization? Share your thoughts in the comments section below!

Conclusion

Generative AI for text is transforming how businesses create and interact with content across all industries. By understanding the capabilities, costs, and implementation considerations of AWS, GCP, and Azure solutions, you can make informed decisions about integrating this powerful technology into your workflows.

The right approach depends on your specific needs, existing infrastructure, and technical expertise. Whether you choose AWS Bedrock’s model variety, GCP Vertex AI’s research-backed models, or Azure OpenAI’s enterprise features, the potential to revolutionize your text-based processes is substantial.

💡 FINAL CALL TO ACTION: Ready to start your generative AI journey? Schedule a 30-minute brainstorming session with your team to identify your first potential use case, then use the code examples in this blog to create a simple prototype. Let us know how it goes!

Stay tuned for our next blog post, where we’ll dive into more interesting topics and explore how to customize these powerful AI tools for your specific industry needs.