Deep Learning Basics: Layers and Learning Rates

Have you ever wondered how your phone recognizes your face to unlock, or how Netflix seems to know exactly what movie you’ll binge next? The secret sauce behind these modern marvels is deep learning, a cutting-edge branch of artificial intelligence (AI) that’s transforming the world around us. At its heart, deep learning relies on two key ingredients: layers that process data like a high-tech assembly line and learning rates that fine-tune how fast a model learns. Whether you’re new to tech or an AWS/GCP-certified pro, this blog will break it all down with real-world examples, diagrams, and interactive fun. At TowardsCloud, we’re all about making complex ideas accessible—so grab a coffee, and let’s explore the magic of deep learning together!

What is Deep Learning?

Deep learning is a souped-up version of machine learning, inspired by how our brains learn from experience. It uses neural networks—those digital brains we covered in our last blog—but takes them to the next level by stacking multiple layers of artificial neurons. These layers work together to spot patterns, make predictions, and solve problems that regular neural networks might struggle with, like translating languages or identifying objects in photos.

Real-World Example: Think of your favorite music app suggesting a playlist that’s just right. Deep learning analyzes your listening habits, song tempos, and even lyrics to nail that perfect vibe.

Call To Action

What’s the coolest thing deep learning has done for you lately? Share your story in the comments!

Layers in Deep Learning

What Are Layers?

In deep learning, layers are like the stages of a factory assembly line. Raw data—like the pixels of a selfie—enters at one end, gets processed step-by-step through various layers, and comes out as a polished result—like “Yep, that’s you!” Each layer has a specific job, refining the data as it moves along.

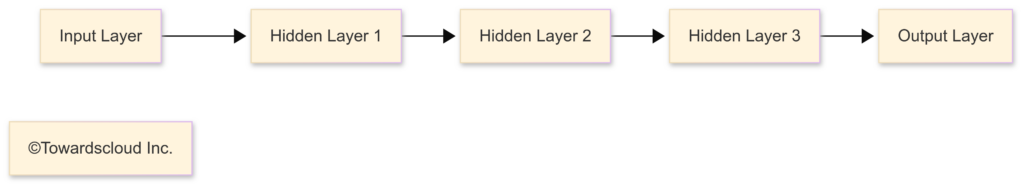

- Input Layer: The starting gate where raw data (e.g., image pixels or sound waves) enters the network.

- Hidden Layers: The heavy lifters! These layers dig into the data, spotting features like edges, shapes, or even emotions in a voice. The “deep” in deep learning comes from having lots of these hidden layers—sometimes dozens or even hundreds!

- Output Layer: The finish line, delivering the final answer—like “This is a dog” or “Play this song.”

Real-World Analogy: Picture a bakery making your favorite cake. The input layer is the raw ingredients (flour, eggs, sugar), hidden layers mix and bake them into delicious layers of sponge and frosting, and the output layer serves up the finished cake—yum!

Here’s a simple diagram of a deep learning model’s layers:

Example in Action: When you upload a photo to social media, deep learning layers might first detect edges (hidden layer 1), then shapes like eyes or a nose (hidden layer 2), and finally decide it’s your friend’s face (output layer) to tag them automatically.

Call To Action

Can you think of another process that works like layers in deep learning? Drop your analogy in the comments!

Learning Rates in Deep Learning

What’s a Learning Rate?

The learning rate is like the throttle on a car—it controls how fast or slow a deep learning model adjusts its internal settings (called weights) to get better at its job. Too fast, and it might crash into a bad solution; too slow, and it’ll take forever to arrive. Finding the right learning rate is key to training a model that’s accurate and efficient.

- High Learning Rate: Big adjustments, fast learning—but it might overshoot the perfect spot, like leaping over a finish line.

- Low Learning Rate: Tiny tweaks, slow and steady—but it could stall out before reaching the goal.

Real-World Analogy: Imagine learning to ride a bike. If you pedal too hard (high learning rate), you might wobble and fall. If you go too slow (low learning rate), you might never balance. The right pace helps you cruise smoothly.

Here’s a table comparing learning rate strategies:

| Strategy | Description | Pros | Cons |

|---|---|---|---|

| Fixed Learning Rate | Same rate throughout training | Easy to set up | May not adapt well |

| Adaptive Learning Rate | Adjusts based on progress | Flexible, often faster | Trickier to tune |

| Scheduled Learning Rate | Decreases over time | Avoids overshooting | Needs careful planning |

Example in Action: Training a model to spot spam emails might start with a higher learning rate to quickly learn obvious clues (like “FREE MONEY!”), then slow down with a scheduled rate to fine-tune for subtle hints (like sneaky phishing links).

Call To Action

Ever tried tweaking something to find the perfect pace—like cooking or gaming? Tell us about it below!

How Layers and Learning Rates Team Up

Deep learning models learn by passing data through layers (forward propagation) and tweaking weights based on mistakes (backpropagation). The learning rate decides how big those tweaks are. Let’s see it in action with a fun example: teaching a model to recognize a handwritten “7.”

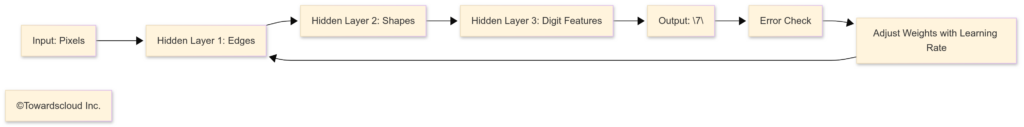

Forward Propagation:

- Input Layer: Takes the pixel values of the “7” image.

- Hidden Layers: First layer spots the vertical line, next layer catches the top slant, and deeper layers confirm it’s a digit.

- Output Layer: Says, “This is a 7!” (hopefully).

Backpropagation:

- If it guesses “1” instead, the model calculates the error.

- The learning rate determines how much to adjust the weights—like turning up the “slant” detector and toning down the “straight line” one.

Visualizing the Flow: Here’s a diagram showing how data moves and gets refined:

Real-World Example: When your fitness tracker learns to tell running from walking, layers spot patterns in motion data, and the learning rate adjusts how quickly it hones in on the difference—fast enough to be useful, slow enough to be accurate.

Let’s test your understanding with some interactive fun:

Interactive Q&A: What do hidden layers do in a deep learning model?

Interactive Q&A: What happens if the learning rate is too high?

Deep Learning in Action

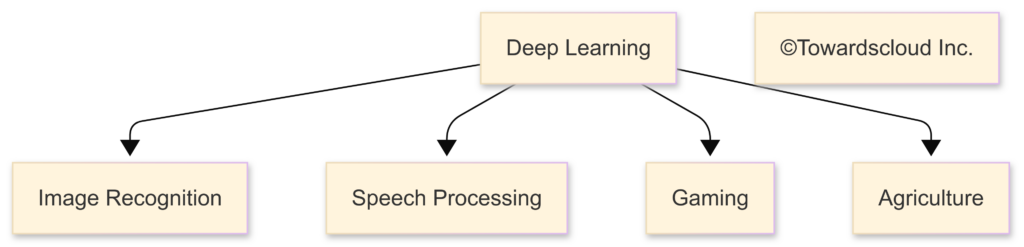

Deep learning shines across industries, thanks to its layers and learning rates:

- Image Recognition: Layers spot faces in your photos; learning rates tweak the model to get better with every snap.

- Speech Processing: Virtual assistants like Alexa use layers to parse your voice, with learning rates ensuring they catch your accent over time.

- Gaming: Deep learning powers AI opponents in video games, adjusting difficulty dynamically to keep you challenged.

- Agriculture: Farmers use it to analyze drone footage, with layers spotting crop health and learning rates optimizing predictions for harvest time.

Here’s a mind map of these applications:

Call To Action

Which deep learning use blows your mind the most? Share your favorite in the comments!

Challenges and What’s Next

Challenges

- Overfitting: Too many layers or a poorly tuned learning rate can make the model memorize data instead of learning patterns.

- Tuning Trouble: Picking the right learning rate is an art—too high or too low, and your model’s off track.

The Future

Smarter algorithms (like adaptive learning rates) and cloud platforms (AWS, GCP, Azure) are making deep learning faster and more accessible. Imagine real-time models that learn on the fly—pretty cool, right?

Try It Yourself on the Cloud

Want to play with layers and learning rates? Platforms like AWS SageMaker, Google Cloud AI, and Azure Machine Learning let you build deep learning models without a supercomputer. Watch for our next blog on “Deep Learning on AWS, GCP, and Azure”!

Conclusion

Deep learning is like a master chef—its layers whip raw data into something amazing, and the learning rate decides how quickly it perfects the recipe. From unlocking phones to growing crops, this tech is everywhere, and we’ve unpacked it all with examples, diagrams, and a bit of fun. At TowardsCloud, we’re thrilled to guide you through this journey—so what’s your take? Rate your grasp of layers and learning rates (1-10) in the comments, and let’s keep the conversation going!

FAQ

- How is deep learning different from regular neural networks?

Deep learning uses many hidden layers to tackle complex tasks, while basic neural networks keep it simpler with fewer layers. - Why do we need so many layers?

More layers let the model learn intricate patterns—like going from “there’s a shape” to “it’s a smiling face.” - How do I pick a learning rate?

Start moderate, then tweak it. Tools like adaptive rates (e.g., Adam optimizer) can help automate this—check out TensorFlow’s guide! - Can deep learning work without cloud platforms?

Sure, but clouds like AWS make it scalable and affordable—perfect for big datasets.

That’s a wrap on “Deep Learning Basics: Layers and Learning Rates”! Stick around for daily tech insights at TowardsCloud.com—see you next time!