In the rapidly evolving landscape of artificial intelligence, generative AI has emerged as a transformative force, capable of creating content ranging from text and images to code and beyond. As organizations race to incorporate these powerful capabilities into their applications and workflows, cloud providers have stepped up with specialized offerings. Today, we’ll explore how AWS—the market leader in cloud services—has built a comprehensive ecosystem to support generative AI development and deployment.

The Generative AI Revolution

Before diving into AWS’s specific offerings, let’s understand why generative AI has gained such momentum. Unlike traditional AI systems that primarily classify or predict based on existing patterns, generative AI creates new content that wasn’t explicitly programmed. This represents a fundamental shift in what computers can do—moving from analysis to creation.

For businesses, this shift opens up entirely new possibilities: customer service chatbots that understand context and nuance, content creation that scales beyond human capacity, code generation that accelerates development, and personalized experiences that adapt to individual users in real-time.

AWS’s Generative AI Strategy

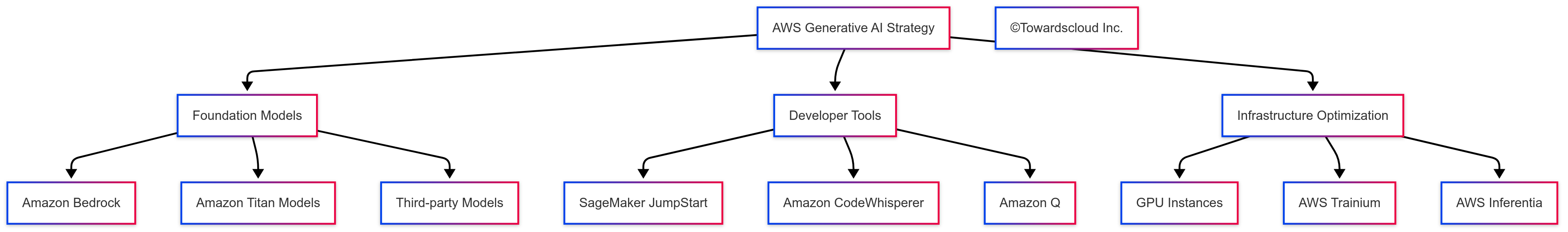

AWS has approached generative AI with a characteristically comprehensive strategy, building a complete stack of services that supports everything from foundation model access to production-grade deployment. Their approach centers on three key pillars:

Now, let’s explore each aspect of AWS’s generative AI offerings in greater detail.

Foundation Models: Amazon Bedrock

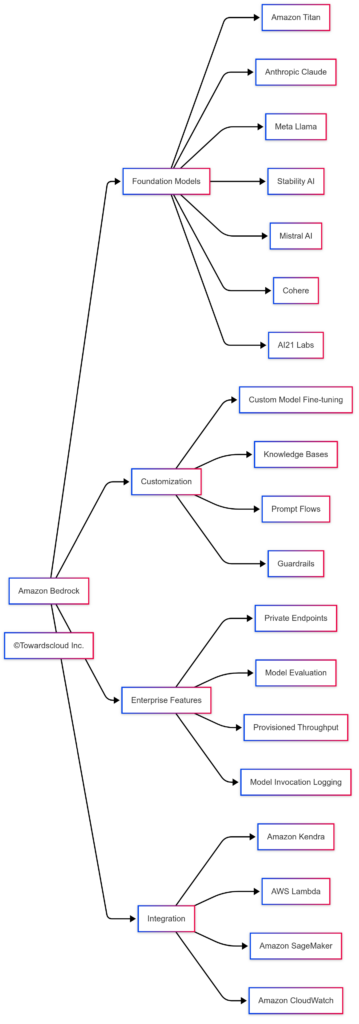

At the heart of AWS’s generative AI strategy is Amazon Bedrock, a fully managed service that provides access to high-performance foundation models (FMs) through a unified API. Launched in general availability in late 2023, Bedrock represents AWS’s answer to the growing demand for easy access to state-of-the-art models.

What Makes Bedrock Special?

Bedrock isn’t just another model hosting service—it’s designed to solve real enterprise challenges with generative AI:

- Model Variety: Bedrock offers access to models from leading AI companies including Amazon’s own Titan models, Anthropic (Claude), AI21 Labs (Jurassic), Cohere, Meta (Llama 2 and Llama 3), Mistral AI, and Stability AI (Stable Diffusion).

- Enterprise-Grade Security: All data processing occurs within your AWS VPC, and data isn’t used to train the underlying models—a critical concern for businesses with sensitive information.

- Customization Capabilities: Bedrock allows you to fine-tune foundation models on your own data without ML expertise through a technique called Retrieval Augmented Generation (RAG).

- Seamless Integration: As part of the AWS ecosystem, Bedrock integrates with services like Amazon Kendra for enterprise search and Lambda for serverless compute.

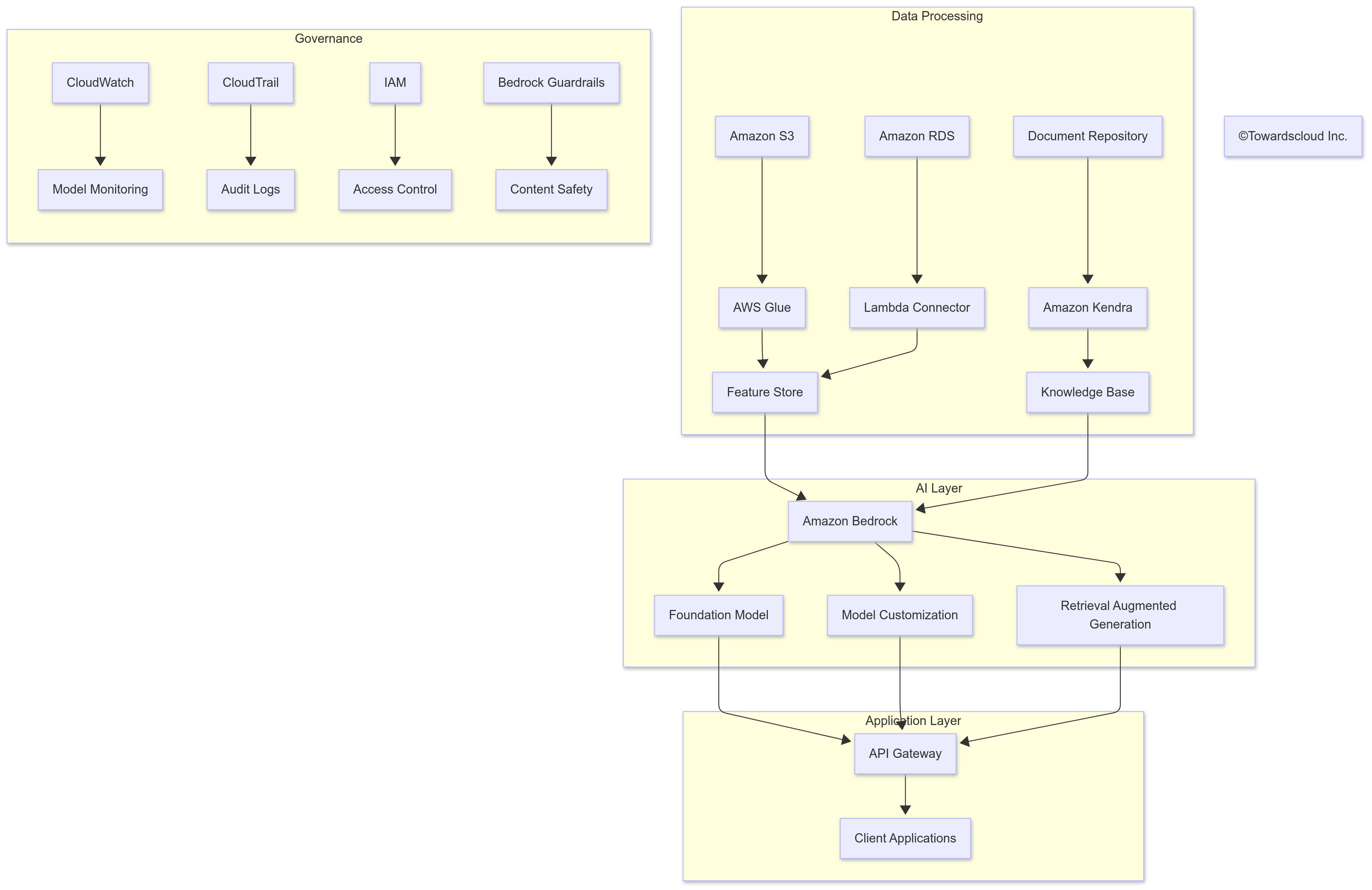

Let’s visualize the Bedrock ecosystem:

Real-World Bedrock Application: Personalized Insurance Agent

Let me share a practical example of how Bedrock can transform business processes. Consider an insurance company that wants to create a personalized policy recommendation system. Using Bedrock, they can:

- Start with the Anthropic Claude model for its strong reasoning and context understanding

- Fine-tune it with thousands of policy documents and customer interaction histories

- Create a knowledge base connecting their product catalog and compliance documentation

- Implement guardrails to ensure recommendations follow regulatory requirements

- Build a conversational interface that translates complex insurance terms into plain language for customers

The result is an AI assistant that can understand nuanced customer needs, explain complex coverage options, and generate personalized policy recommendations—all while maintaining compliance with insurance regulations.

Amazon Titan Models

While AWS offers access to third-party models through Bedrock, they’ve also developed their own family of foundation models called Amazon Titan.

Titan Model Family

The Titan model lineup includes:

- Titan Text: For generating text, summarization, and content creation

- Titan Embeddings: For converting text to vector representations used in semantic search

- Titan Multimodal: For understanding and generating content that combines text and images

- Titan Image Generator: For creating images from text descriptions

What sets Titan models apart is their design for production business use cases rather than experimental or research applications. They’re built with responsible AI principles from the ground up, with features like watermarking for images to address concerns about AI-generated content.

Developer Tools for Generative AI

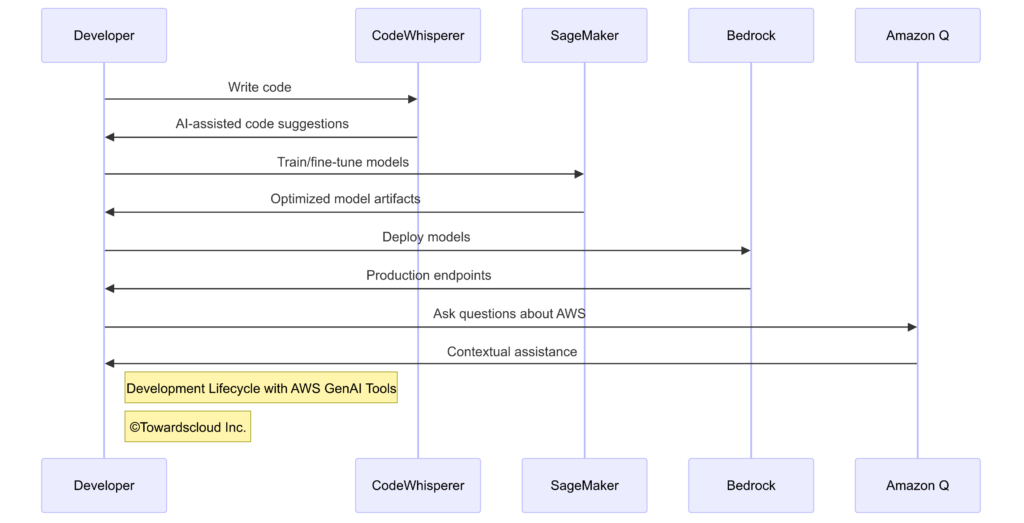

AWS recognizes that accessing foundation models is just the beginning. To build production applications, developers need specialized tools throughout the development lifecycle.

Amazon CodeWhisperer

CodeWhisperer is AWS’s answer to AI-assisted coding, similar to GitHub Copilot but integrated deeply with the AWS ecosystem. It offers:

- Real-time code suggestions as you type in popular IDEs like VS Code, IntelliJ, and AWS Cloud9

- Full function generation from natural language comments

- AWS service integration with specialized knowledge of AWS APIs and best practices

- Security scanning to identify vulnerabilities in generated code

- Reference tracking to attribute code to open source origins

For cloud development teams, CodeWhisperer’s understanding of AWS services provides a significant advantage. For example, when writing Lambda functions, it can suggest the correct IAM permissions, environment variables, and event handling patterns based on the function’s purpose.

Amazon SageMaker for GenAI

SageMaker, AWS’s comprehensive machine learning platform, has been expanded with features specifically designed for generative AI:

- SageMaker JumpStart provides pre-trained foundation models with one-click deployment

- SageMaker Studio offers notebook-based environments for model experimentation

- SageMaker Training Compiler optimizes training for large language models

- SageMaker Inference Recommender helps select the right instance type for cost-effective model serving

Amazon Q: AWS’s AI Assistant

In late 2023, AWS introduced Amazon Q, an AI-powered assistant designed specifically for developers and IT professionals working with AWS. Amazon Q can:

- Answer questions about AWS services and features

- Generate code samples for specific AWS tasks

- Troubleshoot issues with existing AWS deployments

- Suggest architectural improvements based on best practices

- Help navigate the AWS documentation ecosystem

What makes Q particularly powerful is its deep knowledge of AWS’s constantly evolving service catalog—knowledge that even general-purpose models like GPT-4, Claude, or Llama lack.

Infrastructure Optimization for GenAI

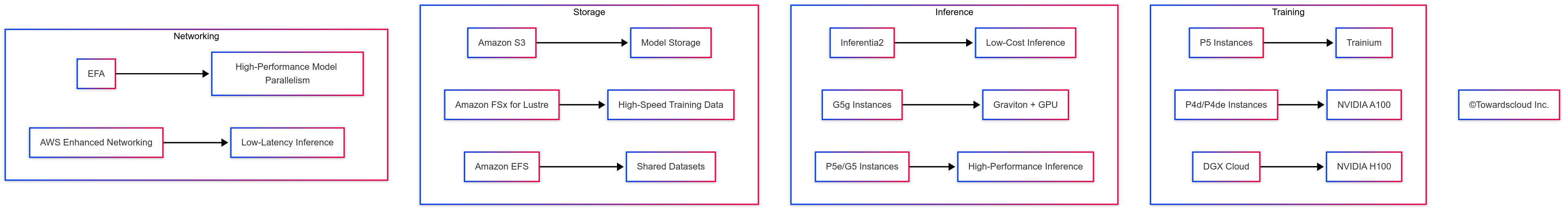

Generative AI models, particularly large language models (LLMs), are computationally intensive. Training these models requires massive GPU clusters, while inference demands low-latency, high-throughput hardware. AWS has invested heavily in purpose-built infrastructure for these workloads.

Purpose-Built Silicon

AWS has developed custom silicon specifically for AI workloads:

- AWS Trainium: Purpose-built accelerators for training deep learning models, offering up to 40% better price-performance compared to comparable GPU-based instances.

- AWS Inferentia2: Second-generation inference accelerators that deliver up to 4x higher throughput and 10x lower latency compared to comparable GPU-based EC2 instances for generative AI inference.

Specialized EC2 Instances

AWS offers a range of EC2 instance types optimized for different generative AI workloads:

- P5 instances: Powered by NVIDIA H100 GPUs, these are AWS’s highest-performance instances for generative AI training.

- P4d/P4de instances: Featuring NVIDIA A100 GPUs, these provide a balance of performance and cost for production training workloads.

- G5 instances: Equipped with NVIDIA A10G GPUs, these are versatile instances suitable for both training smaller models and inference.

- G5g instances: These combine AWS Graviton processors with NVIDIA T4G GPUs for cost-effective inference.

- Inf2 instances: Powered by AWS Inferentia2 accelerators, these are optimized specifically for generative AI inference.

NVIDIA DGX Cloud on AWS

For organizations requiring the absolute highest performance for model training, AWS offers NVIDIA DGX Cloud. This service provides dedicated access to NVIDIA DGX H100 systems, complete with NVIDIA’s optimized AI software stack. While expensive, this option provides the raw compute power needed for training the largest foundation models from scratch.

Building GenAI Applications on AWS: A Practical Approach

Now that we’ve covered AWS’s offerings, let’s walk through a practical approach to building generative AI applications using these services.

Step 1: Data Preparation

The first step in any generative AI project is preparing your data. For most enterprise applications, this means:

- Collecting relevant data from sources like product catalogs, knowledge bases, and customer interactions

- Cleaning and preprocessing this data to remove noise and standardize formats

- Creating high-quality embeddings that represent the semantic meaning of your data

- Building knowledge bases in Bedrock or vector databases like Amazon OpenSearch

AWS services useful at this stage include:

- Amazon S3 for data storage

- AWS Glue for ETL processes

- Amazon OpenSearch for vector storage

- Amazon Kendra for enterprise search capabilities

Step 2: Model Selection and Customization

Next, you’ll need to select the right foundation model for your use case:

- Evaluate models available in Bedrock based on performance, cost, and capabilities

- Fine-tune selected models with your domain-specific data if needed

- Create prompt templates that effectively guide the model to produce desired outputs

- Implement guardrails to ensure outputs meet your standards for accuracy and safety

Step 3: Application Development

With your data prepared and models selected, you can build your application:

- Design APIs that integrate with your selected foundation models

- Implement RAG patterns to enhance model responses with your enterprise knowledge

- Create user interfaces that make AI capabilities accessible to end users

- Set up monitoring and evaluation to track performance and identify issues

AWS services useful at this stage include:

- AWS Lambda for serverless compute

- Amazon API Gateway for API management

- AWS Amplify for frontend development

- Amazon CloudWatch for monitoring

Step 4: Production Deployment

Finally, you’ll deploy your application to production:

- Set up scaling rules to handle variable loads

- Implement caching strategies to improve response times and reduce costs

- Establish CI/CD pipelines for ongoing updates

- Create robust error handling and fallback mechanisms

Real-World Success Stories

Let’s look at how some organizations are using AWS’s generative AI services to transform their businesses:

Intuit: Personalized Financial Advice

Intuit, the company behind TurboTax and QuickBooks, uses Amazon Bedrock to power a personalized financial assistant that helps small business owners interpret their financial data and make better decisions. By combining Bedrock’s foundation models with Intuit’s vast financial dataset, they created an AI that can explain financial concepts, identify potential tax deductions, and suggest cash flow improvements—all tailored to each business’s specific situation.

3M: Accelerating Research and Development

3M uses a combination of SageMaker and Bedrock to accelerate their R&D processes. Their AI system analyzes thousands of research papers, patent filings, and internal documents to identify potential connections between seemingly unrelated scientific fields. This has helped their scientists discover novel material combinations and applications, significantly reducing the time from concept to product.

Wunderman Thompson: Creative Content Generation

Global marketing agency Wunderman Thompson uses Amazon Titan Multimodal and Image Generator models to create initial concepts for advertising campaigns. Their system can generate variations of ad designs based on campaign briefs, product specifications, and target audience profiles. While human creatives still refine and finalize the work, the AI system has reduced the time required for initial concept development by over 60%.

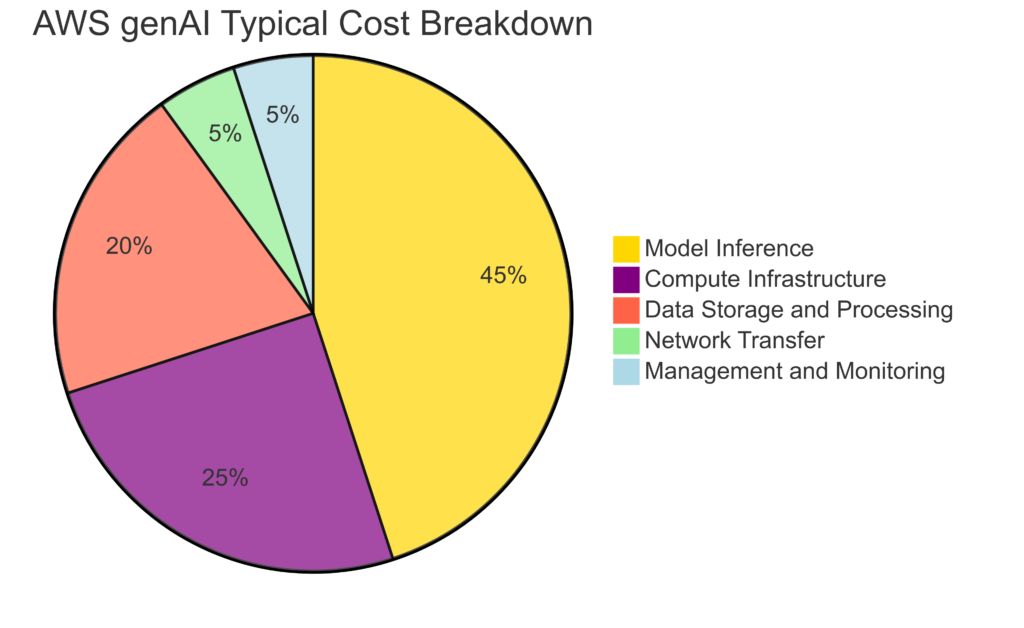

Cost Considerations

A practical discussion of AWS’s generative AI offerings wouldn’t be complete without addressing costs. Generative AI can be expensive, particularly when operating at scale. Here’s a breakdown of key cost factors:

Cost Optimization Strategies

To manage generative AI costs on AWS, consider these strategies:

- Right-size your models: Smaller, more specialized models often perform better than larger general-purpose models for specific tasks.

- Implement caching: Many generative AI applications receive similar queries. Caching common responses can significantly reduce inference costs.

- Use Provisioned Throughput: For predictable workloads, Bedrock’s Provisioned Throughput option can reduce costs by up to 40% compared to on-demand pricing.

- Choose the right instance types: For custom models deployed on SageMaker, selecting optimized instance types like Inferentia2-based instances can dramatically reduce inference costs.

- Monitor and optimize prompt design: Shorter, more efficient prompts reduce token usage and therefore costs.

Future Directions: AWS GenAI Roadmap

AWS continues to evolve its generative AI offerings at a rapid pace. Based on recent announcements and industry trends, here are some developments we can expect:

- More specialized Titan models: AWS is likely to expand its Titan family with domain-specific models for industries like healthcare, finance, and manufacturing.

- Enhanced multimodal capabilities: As generative AI expands beyond text, expect AWS to strengthen support for audio, video, and 3D content generation.

- Simplified RAG implementation: The pattern of Retrieval Augmented Generation is becoming increasingly important, and AWS is likely to provide more streamlined tools for implementing it.

- Edge deployment options: For applications requiring low latency or offline operation, AWS will likely introduce optimized models that can run on edge devices through services like AWS IoT Greengrass.

- Expanded responsible AI features: As regulatory scrutiny of AI increases, expect AWS to bolster its offerings around model explainability, bias detection, and content authenticity.

Conclusion: Getting Started with AWS GenAI

Generative AI on AWS represents a powerful opportunity for organizations to transform their applications and workflows. By combining foundation models with enterprise data and domain expertise, businesses can create AI systems that generate genuine competitive advantage.

For those just beginning their generative AI journey, I recommend this approach:

- Start small: Choose a well-defined use case with clear success metrics

- Experiment with Bedrock: Use the console to explore different models and understand their capabilities

- Build with RAG: Implement Retrieval Augmented Generation to combine models with your domain knowledge

- Iterate rapidly: Collect user feedback and continuously refine your prompts and knowledge bases

- Scale gradually: As you prove value, expand to more complex use cases and larger deployments

Remember that generative AI is still evolving rapidly. What seems cutting-edge today may be standard practice tomorrow. The key is to start building practical experience now so your organization can adapt as the technology matures.

AWS’s comprehensive stack—from foundation models to specialized infrastructure—provides all the tools needed to build production-grade generative AI applications. By understanding these offerings and how they fit together, you’re well-positioned to leverage this transformative technology for your business.

This article is part of our ongoing series exploring cloud technologies and AI innovation. For more insights on implementing cloud solutions, visit TowardsCloud.com.