What is Reinforcement Learning in Generative AI?

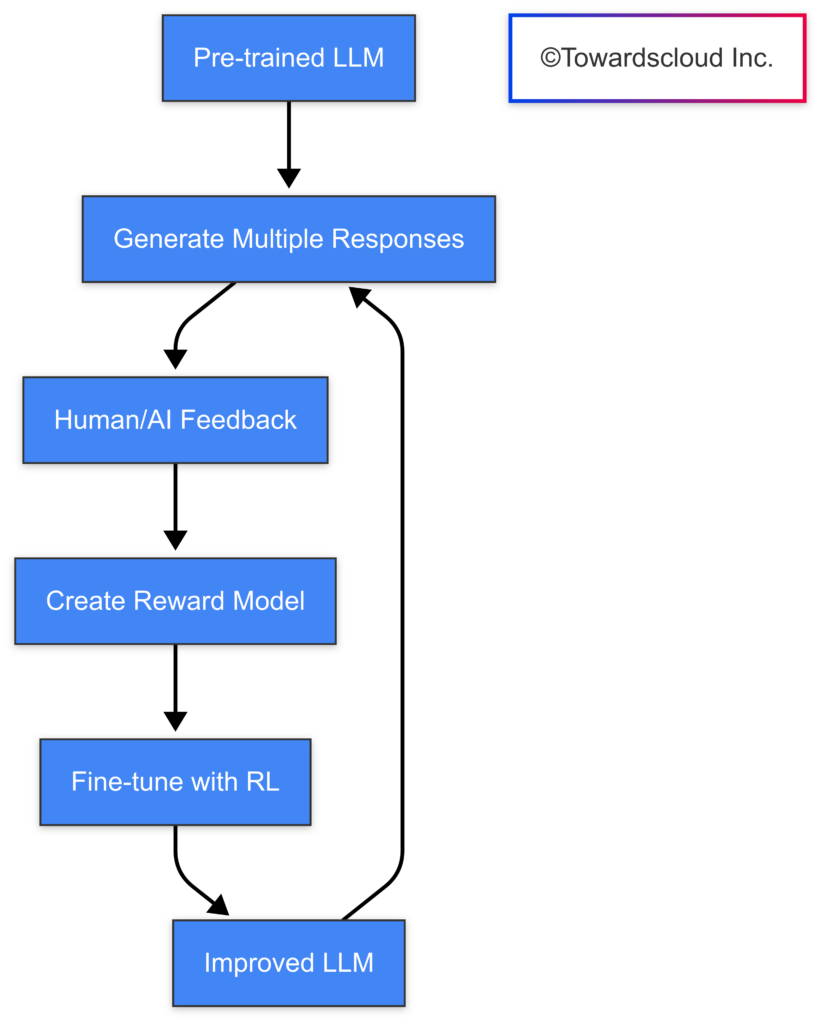

Reinforcement Learning (RL) is a type of machine learning where an agent learns to make decisions by taking actions in an environment to maximize cumulative rewards. When applied to generative AI, this approach is called Reinforcement Learning from Human Feedback (RLHF) or Reinforcement Learning with AI Feedback (RLAIF).

Why Use Reinforcement Learning for Generative AI?

Traditional supervised learning has limitations when training generative models:

- Alignment Problem: Models trained purely on prediction may generate harmful, biased, or incorrect content

- Preference Learning: Hard to capture human preferences through supervised learning alone

- Context Sensitivity: Standard supervised learning doesn’t account for varied contexts where different responses are appropriate

RLHF addresses these issues by incorporating direct feedback on generated outputs to align the model with human preferences.

Core Components of RLHF

1. Base Model

Start with a pre-trained language model (like GPT, PaLM, Claude, or Llama).

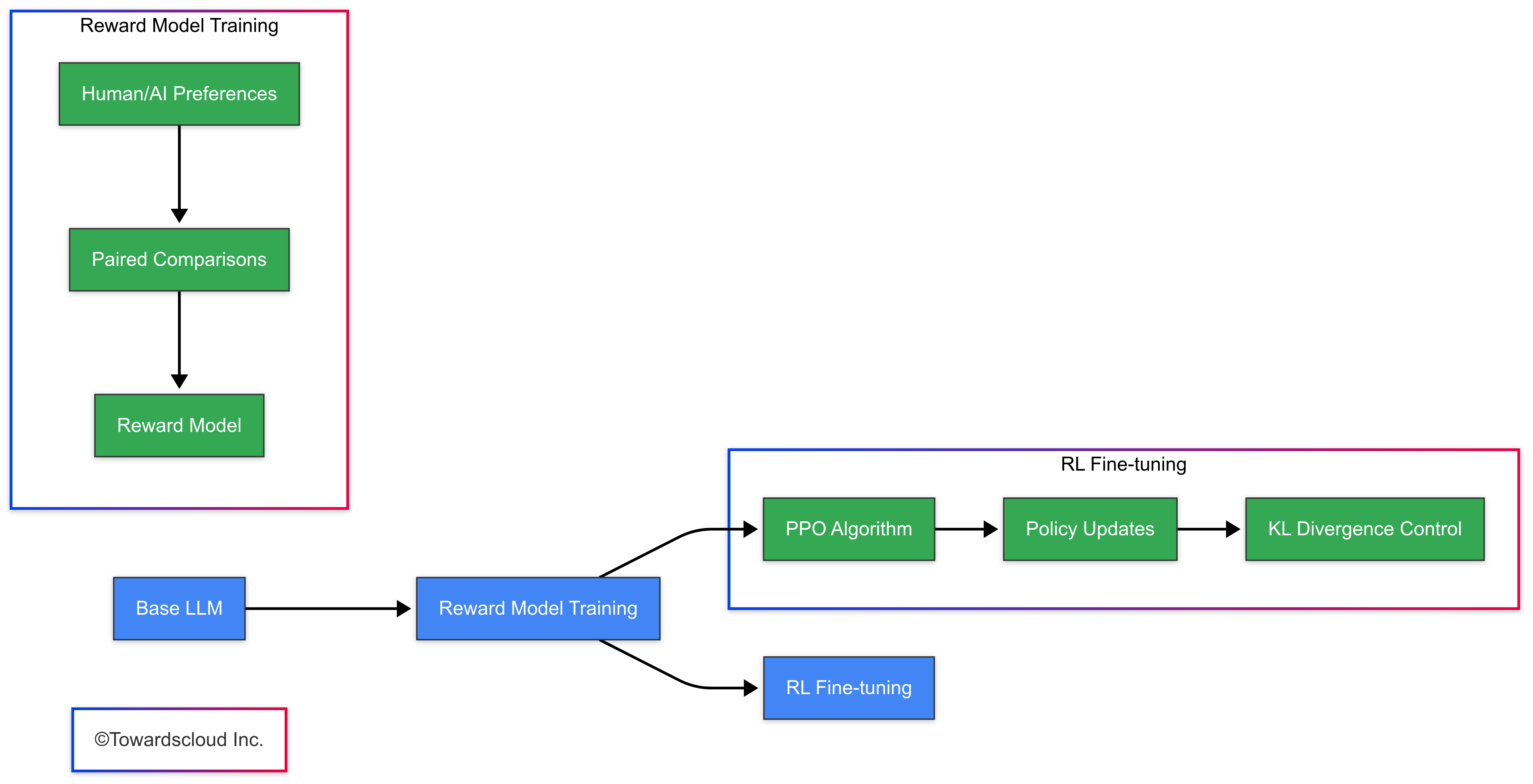

2. Reward Model

Train a model to predict human preferences between different outputs.

3. RL Optimization

Fine-tune the model using RL algorithms (typically Proximal Policy Optimization – PPO).

Implementation for “Word Up! Bot”

Let’s build a simple text enhancement bot using RLHF. Our bot will improve user-provided text by making it more engaging, clear, and grammatically correct based on reinforcement learning.

Independent Implementation

First, let’s look at a Python implementation without cloud-specific features:

Word Up! Bot – Python Implementation

Cloud Implementations Comparison

AWS Implementation

AWS Implementation – WordUpBot

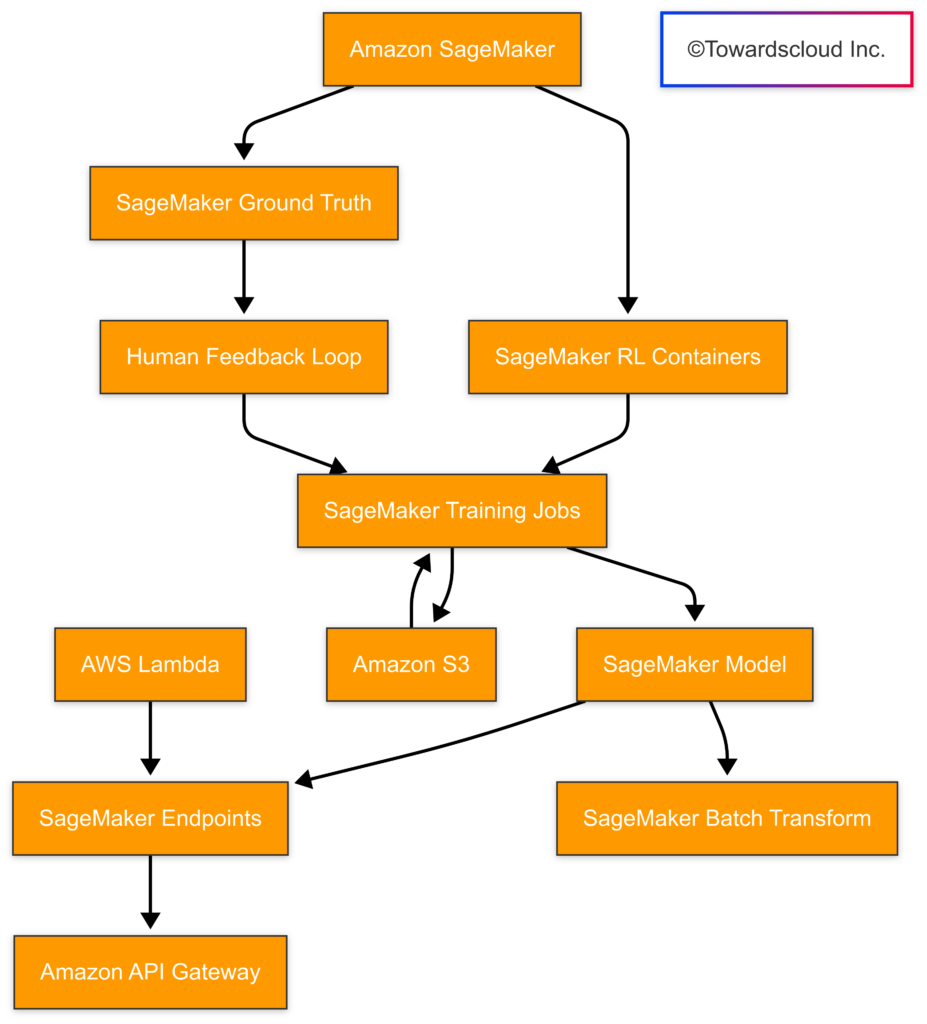

AWS offers several services that facilitate the implementation of RLHF for generative AI applications:

Key AWS Components:

- Amazon SageMaker: Handles model training, deployment, and inference

- Amazon S3: Stores feedback data and model artifacts

- Amazon Comprehend: Provides additional NLP capabilities for text analysis

- AWS Lambda: Can be used for serverless processing of feedback

GCP Implementation

Google Cloud Platform provides a robust ecosystem for implementing RLHF:

Key GCP Components:

- Vertex AI: Manages end-to-end ML workflows

- Cloud Storage: Stores training data and model artifacts

- BigQuery: Can be used for large-scale analysis of feedback data

- Cloud Functions: Enables serverless processing of feedback

- Dataflow: Handles data preprocessing pipeline

Azure Implementation

Microsoft Azure provides comprehensive services for implementing RLHF:

Key Azure Components:

- Azure Machine Learning: Handles training and deployment

- Azure Blob Storage: Stores feedback data and model artifacts

- Azure Text Analytics: Provides NLP capabilities

- Azure Functions: Enables serverless processing

- Azure Data Factory: Manages data preprocessing pipelines

Cloud Implementation Comparison

| Feature | AWS | GCP | Azure |

|---|---|---|---|

| Base Model Services | SageMaker JumpStart, Bedrock | Vertex AI PaLM API | Azure OpenAI Service |

| Training Infrastructure | SageMaker Training Jobs | Vertex AI Training | Azure ML Compute |

| Deployment Options | SageMaker Endpoints | Vertex AI Endpoints | Azure ML Endpoints |

| Storage Solutions | S3 | Cloud Storage | Blob Storage |

| Serverless Computing | Lambda | Cloud Functions | Azure Functions |

| Analytics | Comprehend, Kinesis | Natural Language API | Text Analytics |

| Cost Efficiency | Medium | High | Medium |

| Integration Ease | Good | Excellent | Very Good |

| Documentation Quality | Excellent | Good | Very Good |

Challenges and Best Practices

Common Challenges in RLHF Implementation

- Reward Hacking: Models may find ways to maximize rewards without actually improving output quality

- Feedback Quality: Poor or inconsistent human feedback can lead to suboptimal training

- Computational Resources: RLHF typically requires significant computational resources

- Bias Amplification: Reinforcement can potentially amplify existing biases in the model

Best Practices

- Diverse Feedback Sources: Collect feedback from a diverse set of evaluators

- Robust Reward Models: Design reward models that capture multiple dimensions of quality

- Staged Training: Start with simpler preference models before full RLHF implementation

- Regular Evaluation: Continuously evaluate model outputs against human preferences

- Hybrid Approaches: Consider combining RLHF with other techniques like RLAIF (Reinforcement Learning from AI Feedback)

Word Up! Bot (Example front end not yet connected to backend)

Let’s create a page for the Word Up! Bot that demonstrates how a you might interact with RLHF-powered text enhancement system. Here’s what this interface includes:

Key Features

- Text Input and Enhancement

- Clean input area to paste text

- Enhancement button that triggers the process

- Loading animation to indicate processing

- Result Visualization

- Clear display of enhanced text

- Side-by-side comparison showing improvements in:

- Grammar

- Vocabulary

- Clarity

- Feedback Collection System

- Four-level rating scale (Not Helpful to Very Helpful)

- Optional comment field for specific feedback

- Submission confirmation

Your Text

- Provide complete sentences or paragraphs for better context

- The bot works best on text between 50-500 words

- For specialized content, mention the target audience or purpose

- Your feedback helps the bot improve over time

Enhanced Text

| Category | Before | After |

|---|---|---|

| Grammar | ||

| Vocabulary | ||

| Clarity |

Conclusion

Reinforcement Learning from Human Feedback represents a powerful approach for aligning generative AI models with human preferences and values. All three major cloud providers offer robust solutions for implementing RLHF, each with unique strengths:

- AWS provides the most comprehensive machine learning ecosystem with strong enterprise integration

- GCP offers excellent integration with other Google AI services and potentially lower costs

- Azure provides seamless integration with Microsoft’s productivity stack and strong enterprise features

The optimal choice depends on your specific requirements, existing cloud infrastructure, budget constraints, and team expertise. Regardless of the platform chosen, implementing RLHF can significantly improve the alignment, safety, and usefulness of your generative AI applications.