Let’s create a comprehensive guide for implementing a conversational bot using OpenAI’s GPT models across AWS, GCP, and Azure, with code examples and cost comparisons.

Understanding GPT Models

OpenAI’s Generative Pre-trained Transformer (GPT) models represent some of the most advanced language models available today. These models are trained on vast amounts of text data and can generate human-like text, translate languages, write different kinds of creative content, and answer questions in an informative way.

Key GPT model variations include:

- GPT-3.5 (e.g., ChatGPT)

- GPT-4

- GPT-4 Turbo

- GPT-4o

Each model varies in capabilities, token limits, and cost structures.

Word Up! Bot: A Conversational Assistant

Let’s create a “Word Up! Bot” – a conversational assistant that can:

- Answer questions about cloud technologies

- Generate code examples on demand

- Translate technical concepts across cloud platforms

- Summarize technical documentation

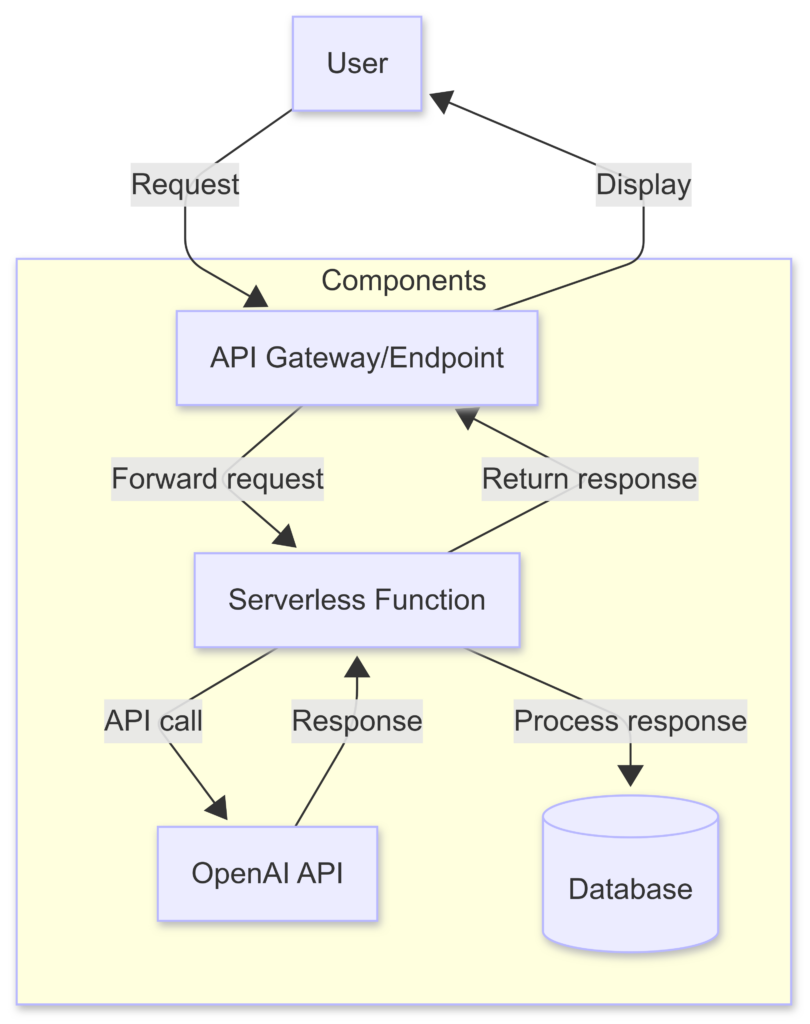

Implementation Across Cloud Platforms

AWS Implementation

AWS Lambda Function for Word Up! Bot

AWS CloudFormation Template for Word Up! Bot

GCP Implementation

GCP Cloud Function for Word Up! Bot

GCP Terraform for Word Up! Bot

Azure Implementation

Azure Function for Word Up! Bot

Azure Bicep Template for Word Up! Bot

Independent Implementation (Docker)

Dockerfile for Word Up! Bot

Python App for Containerized Word Up! Bot

Docker Compose for Word Up! Bot

Requirements.txt for Word Up! Bot

Frontend Implementation (example page-not connected to backend)

Word Up! Bot

Powered by OpenAI GPT

AWS

Ask me about Amazon Web Services, Lambda, S3, EC2, and more.

GCP

Ask me about Google Cloud Platform, Cloud Functions, BigQuery, and more.

Azure

Ask me about Microsoft Azure, Functions, Cosmos DB, and more.

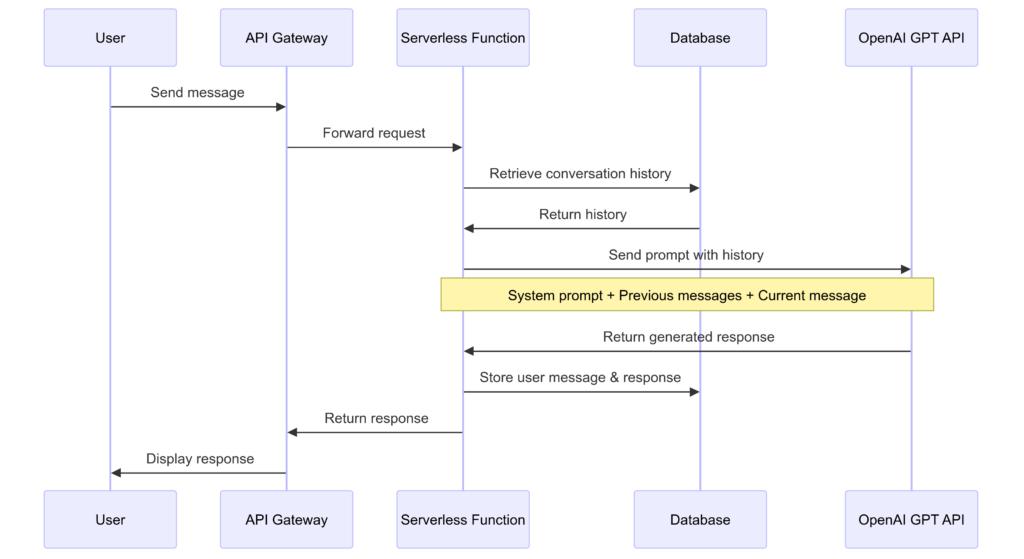

GPT Model Implementation Details

GPT Model Selection Guide

When integrating with OpenAI’s GPT models, there are several options to consider:

- GPT-3.5 Turbo

- Best for: General purpose tasks, cost-efficiency

- Context window: 16K tokens

- Cost: ~$0.0015 per 1K tokens (input), ~$0.002 per 1K tokens (output)

- GPT-4 Turbo

- Best for: Complex reasoning, advanced capabilities

- Context window: 128K tokens

- Cost: ~$0.01 per 1K tokens (input), ~$0.03 per 1K tokens (output)

- GPT-4o

- Best for: Multimodal tasks (text + vision)

- Context window: 128K tokens

- Cost: ~$0.005 per 1K tokens (input), ~$0.015 per 1K tokens (output)

For the Word Up! Bot, GPT-4 Turbo provides the best balance between capabilities and cost for cloud technology discussions.

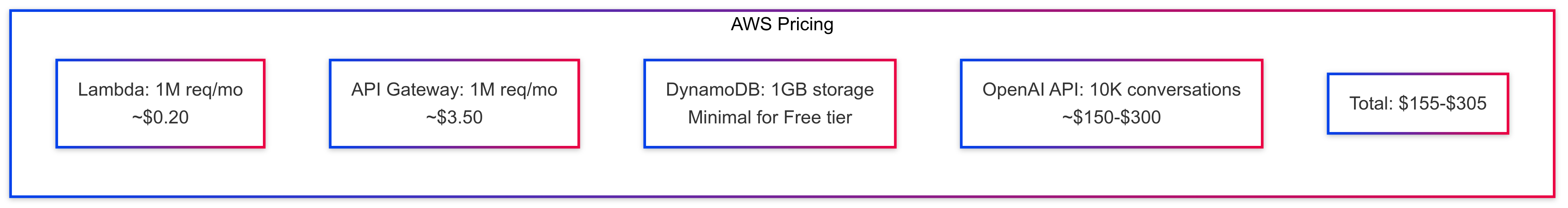

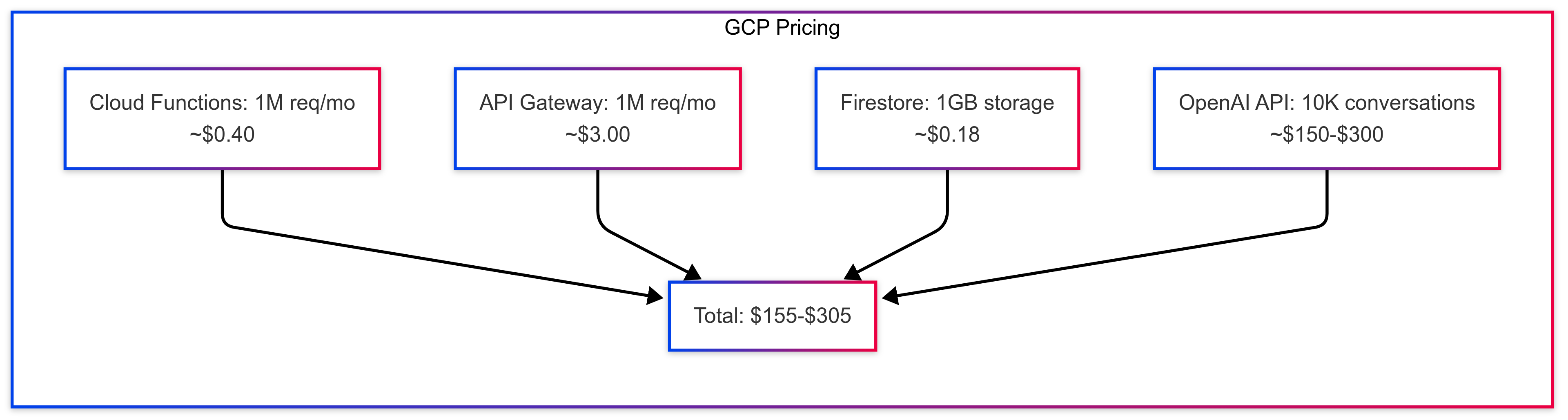

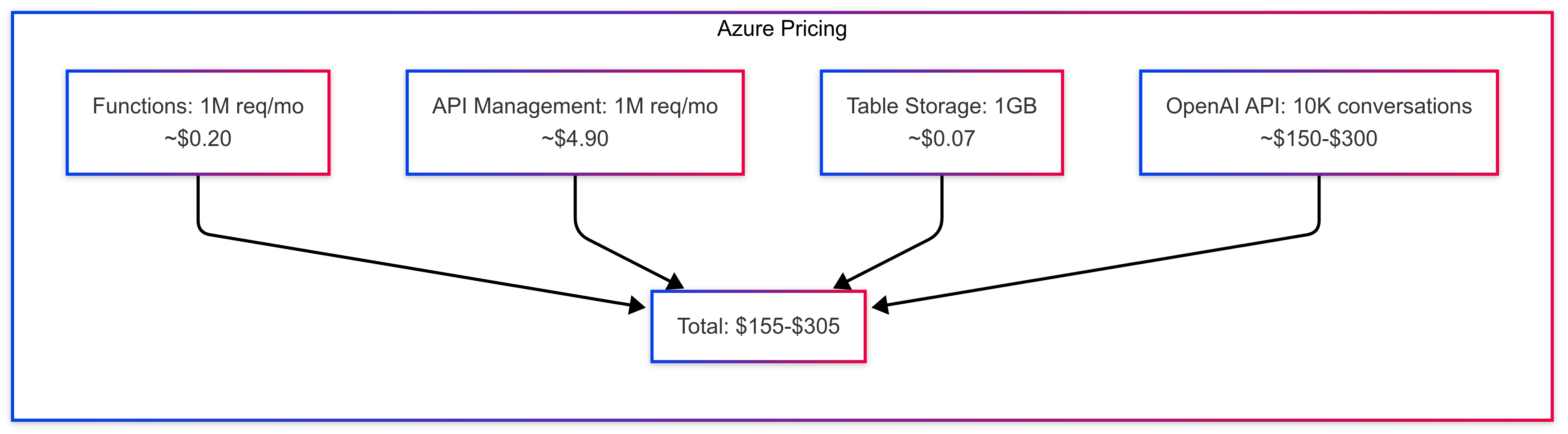

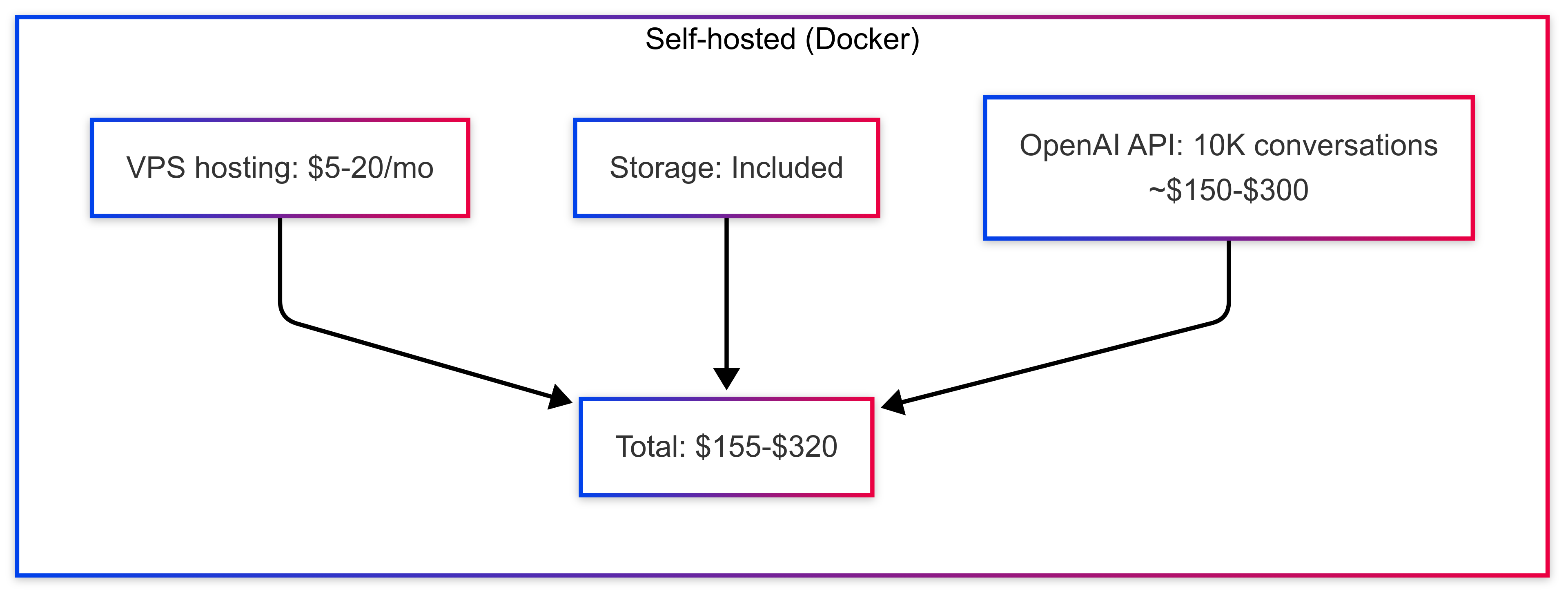

Cost Comparison Across Cloud Platforms

Cost Analysis

The cost breakdown for a Word Up! Bot with approximately 10,000 conversations per month:

- Infrastructure Costs

- AWS: ~$4-5/month (Lambda, API Gateway, DynamoDB)

- GCP: ~$3.60-4.50/month (Cloud Functions, API Gateway, Firestore)

- Azure: ~$5.20-6.00/month (Functions, API Management, Table Storage)

- Self-hosted: ~$5-20/month (VPS)

- OpenAI API Costs (dominate total cost)

- Average cost per conversation (~500 tokens input, ~750 tokens output):

- GPT-3.5 Turbo:

$0.002 per conversation ($20/month) - GPT-4 Turbo:

$0.03 per conversation ($300/month) - GPT-4o:

$0.015 per conversation ($150/month)

- GPT-3.5 Turbo:

- Average cost per conversation (~500 tokens input, ~750 tokens output):

- Key Differences

- AWS offers the strongest free tier and easier scalability

- GCP provides slightly lower storage costs

- Azure has integrated OpenAI options but higher API management costs

- Self-hosted offers more control but requires maintenance

- Recommendation

- For smaller implementations: Start with AWS due to free tier benefits

- For integration with existing cloud services: Match your current provider

- For cost optimization: Use GPT-3.5 for simple queries, GPT-4 for complex ones

Advanced Features and Customizations

Prompt Engineering for Cloud Discussions

Enhance your Word Up! Bot with specialized system prompts:

system_prompt = """

You are Word Up! Bot, a specialized cloud technology assistant focused on AWS, GCP, and Azure.

For each cloud provider question:

1. Explain the service/concept clearly

2. Provide practical code examples when relevant

3. Compare to equivalent services on other cloud platforms

4. Note important pricing considerations

5. Address security best practices

Your specialty is helping users understand cloud services across platforms.

"""Adding Custom Knowledge Base

Implement a vector database to store specialized knowledge:

Vector Database Integration

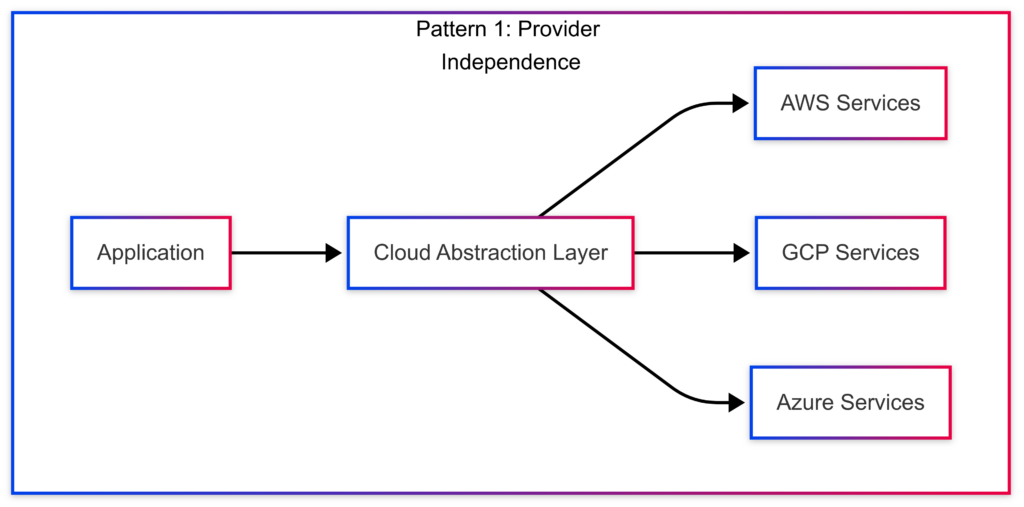

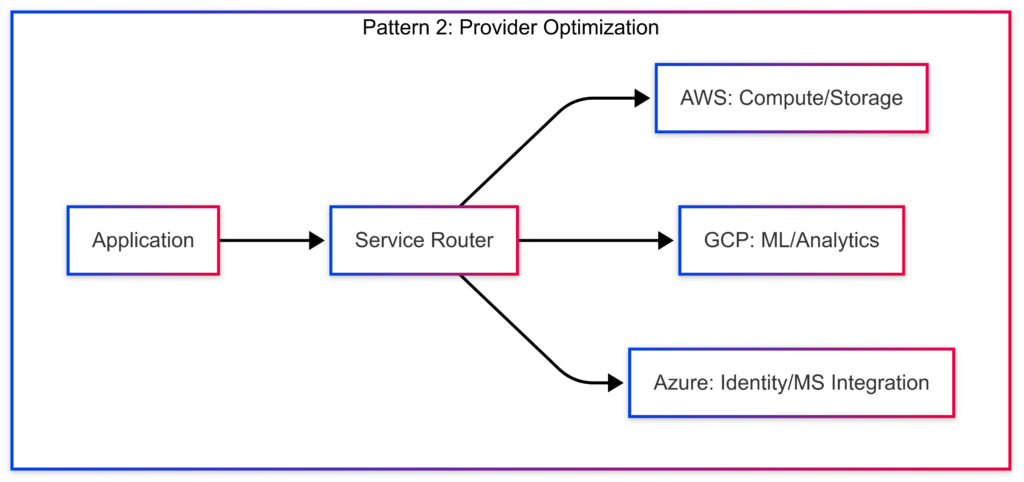

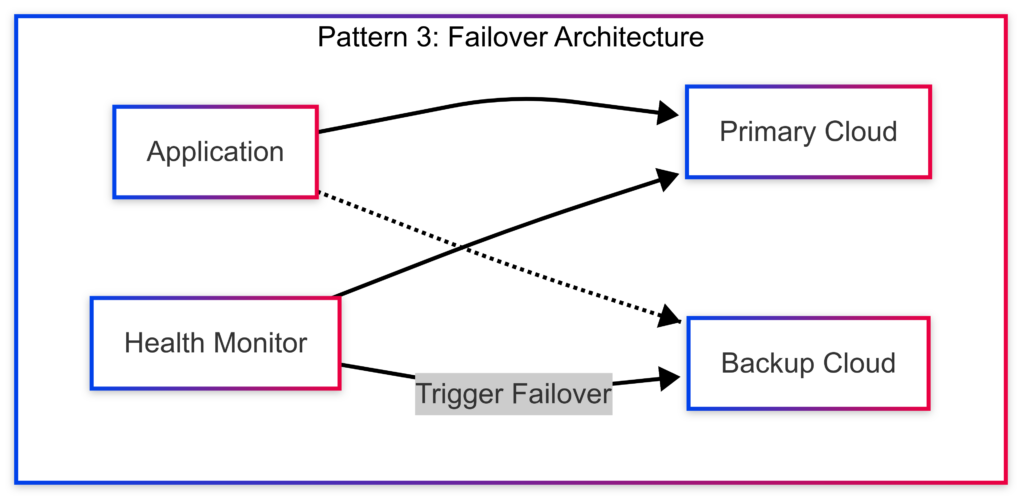

Multi-Cloud Design Patterns

Security Best Practices

OpenAI API Security

- API Key Management

- AWS: Use Secrets Manager

- GCP: Use Secret Manager

- Azure: Use Key Vault

- All: Rotate keys regularly

- Input Validation

- Implement rate limiting

- Validate and sanitize all user inputs

- Apply token limits to prevent abuse

- Content Filtering

- Implement pre-processing filters for harmful inputs

- Use OpenAI’s moderation API

- Have clear escalation processes for problematic content

Cloud-Specific Security

Security Configuration

Conclusion and Next Steps

Word Up! Bot provides a powerful way to engage with cloud technology information using OpenAI’s GPT models. The implementation across AWS, GCP, and Azure demonstrates the flexibility of integrating AI assistants with cloud infrastructure.

For optimal results:

- Choose the GPT model that balances capability with cost for your specific use case

- Start with a cloud provider that aligns with your existing infrastructure

- Implement robust security measures, especially for API key management

- Consider adding a knowledge base for specialized cloud documentation

- Monitor and optimize costs as usage increases

By extending Word Up! Bot with additional features like:

- Support for multi-turn conversations

- User feedback loops for improvement

- Domain-specific knowledge augmentation

- Integration with cloud provider documentation

- Cost optimization based on query complexity

You can create an even more powerful cloud technology assistant that helps users better understand and implement multi-cloud strategies.