Have you ever wondered how your smartphone recognizes your face, how Netflix predicts what movie you’ll love next, or how self-driving cars navigate busy streets? The answer lies in neural networks, the powerhouse behind artificial intelligence (AI). These incredible systems mimic the way our brains work, enabling machines to learn from data and make decisions. Whether you’re new to tech or an IT pro exploring AI’s foundations, this blog post will take you on a journey—from the very basics of neural networks to their real-world magic—all in simple, engaging language. At TowardsCloud, we’re passionate about making complex topics fun and accessible, so let’s dive in with real-world examples, diagrams, and a few surprises along the way!

What is a Neural Network?

At its core, a neural network is a set of algorithms designed to find patterns and relationships in data, much like how our brain processes information. Think of it as a digital brain: it takes in inputs (like an image or a sentence), processes them, and produces an output (like identifying a cat or translating a phrase).

The Brain Analogy

Imagine your brain as a network of tiny workers—neurons—passing messages to each other. When you see a dog, some neurons detect its fur, others its ears, and together they shout, “It’s a dog!” A neural network does the same with artificial neurons, connecting and collaborating to solve problems. This brain-inspired design is why neural networks are the backbone of AI, powering everything from voice assistants to medical diagnoses.

Real-World Example: Your email app filters spam and prioritizes important messages using neural networks to analyze content and sender patterns, keeping your inbox organized.

Q&A: How does a neural network mimic the human brain?

Components of a Neural Network

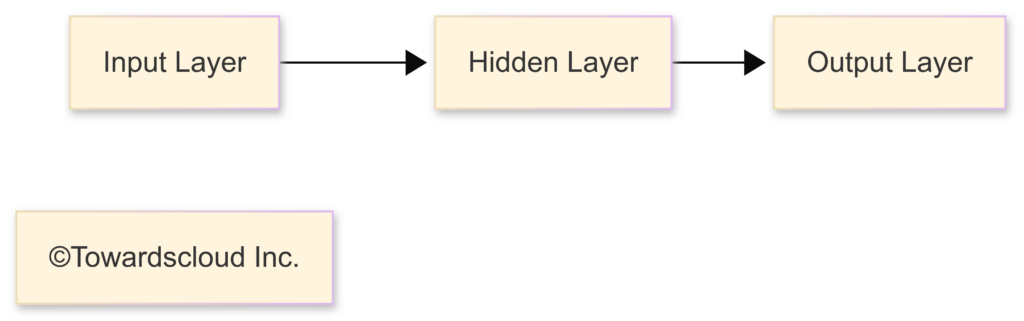

To understand how neural networks work, let’s break them down into their building blocks.

- Neurons (Nodes): A neuron is the basic unit, acting like a tiny decision-maker. In an email spam filter, a neuron might flag words like “free” or “win” as suspicious.

- Layers:

- Input Layer: Receives raw data—like pixel values of an image.

- Hidden Layers: Process patterns and features.

- Output Layer: Delivers the final answer—like “yes, it’s spam.”

- Weights and Biases: Weights control input influence, while biases fine-tune the output. Imagine baking a cake: ingredients (inputs) have different amounts (weights), and a pinch of salt (bias) perfects the flavor. Or think of a video game that gets harder as you improve—neural networks tweak difficulty by adjusting weights based on your actions.

- Activation Functions: These decide if a neuron “fires.” Common types include Sigmoid (0 to 1), ReLU (positive or 0), and Tanh (-1 to 1).

Here’s a simple diagram to visualize the structure:

Q&A: What is the role of weights in a neural network?

Q&A: What happens if an activation function doesn’t “fire”?

How Neural Networks Work

Forward Propagation

Data flows from input to output through the network. Each neuron processes inputs, applies weights and biases, and passes the result through an activation function.

Visualizing Data Flow: Let’s explore how data moves through a neural network with the example of recognizing a handwritten digit, such as a ‘9’.

- Input Layer: The network starts by receiving the pixel values of the image. Each pixel is a tiny dot of light or dark, and collectively, these dots sketch out the digit’s shape—like the curve of a ‘9’.

- Hidden Layers: The first hidden layer analyzes these pixels to detect basic features, such as edges or lines. For a ‘9’, it might spot the vertical stem or the top loop. The next hidden layer builds on this, combining those features to recognize more complex shapes—like the full loop connected to the tail. Each layer refines the understanding step-by-step.

- Output Layer: Finally, the output layer takes all this processed information and makes a decision. It weighs the evidence from the hidden layers and concludes, “This is most likely a ‘9’.”

This process—transforming raw pixel data into a meaningful prediction—shows how neural networks turn chaos into clarity, layer by layer.

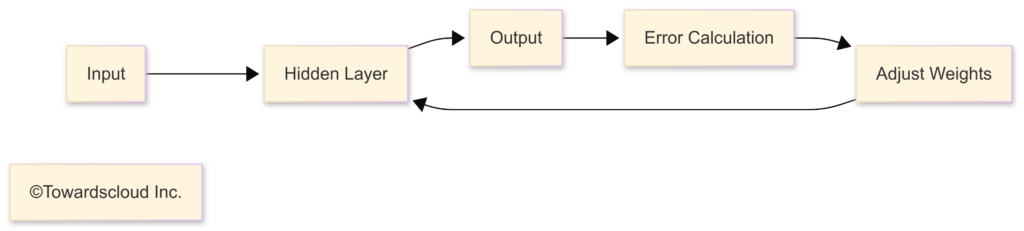

Backpropagation and Learning

If the output is wrong (say, it guesses ‘4’ instead of ‘9’), the network calculates the error and adjusts weights backward through the layers, learning from its mistakes. Over time, it gets better at recognizing patterns.

Here’s the process in a flowchart:

Real-World Example: When you browse online, neural networks predict ads you’ll like (forward propagation), then refine their strategy based on your clicks (backpropagation). For instance, if you click on hiking gear ads but skip fashion ones, the network learns to prioritize outdoor products for you.

Q&A: Why is forward propagation important?

Types of Neural Networks

- Feedforward Neural Networks: Data flows one way, from input to output. Supermarkets use them to predict inventory needs based on past sales data—for example, stocking extra ice cream during a heatwave.

- Convolutional Neural Networks (CNNs): Built for images, CNNs excel at recognizing visual patterns. Security cameras use them to spot suspicious behavior, like identifying a loiterer from a crowd.

- Recurrent Neural Networks (RNNs): Perfect for sequences, RNNs remember past inputs. Weather apps leverage them to forecast rain by analyzing trends in temperature and humidity over time.

| Type | Description | Use Cases |

|---|---|---|

| Feedforward | Data flows one way | Classification, regression |

| CNN | For grid-like data (e.g., images) | Image recognition |

| RNN | For sequential data | NLP, time series |

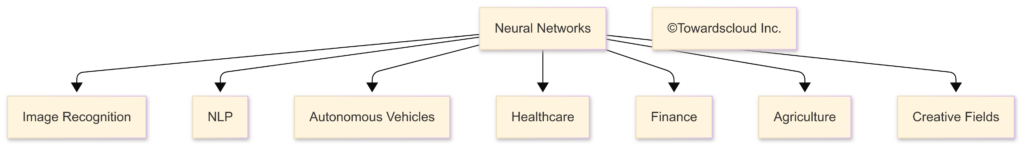

Applications of Neural Networks

Neural networks are transforming industries in ways both practical and imaginative. Here are some standout examples:

- Image and Speech Recognition: CNNs power facial recognition in smartphones (unlocking your phone with a glance) and voice assistants like Siri, which transcribe your commands into action, making tech seamless.

- Natural Language Processing (NLP): RNNs and Transformers drive chatbots (like customer service bots), translate languages instantly (e.g., Google Translate), and even generate text, such as auto-completing your emails.

- Autonomous Vehicles: Neural networks process sensor data—cameras, radar, LIDAR—to detect pedestrians, traffic lights, and road signs, ensuring a Tesla can brake for a jaywalker or navigate a busy intersection.

- Healthcare: They analyze X-rays to spot tumors (faster than human eyes) or predict heart attack risks by studying patient histories, revolutionizing diagnostics.

- Finance: Neural networks detect fraudulent credit card charges by flagging unusual spending patterns—like a sudden spree in a foreign country—or forecast stock trends to guide investments.

- Agriculture: Farmers use neural networks to monitor crops via drone imagery, soil sensors, and weather data. For instance, they predict corn yields, spot early signs of blight on tomato plants, or suggest the best day to harvest wheat, boosting efficiency and sustainability.

- Creative Fields: AI is redefining creativity. Neural networks analyze thousands of paintings to generate art (like a new Monet-style landscape), compose music by learning from Beethoven’s symphonies, or write short stories with coherent plots—think of an AI crafting a sci-fi tale about sentient robots.

Here’s a mind map of these applications:

Q&A: Which application likely uses a neural network to understand spoken commands?

Let’s Hear From You: Share in the comments—which neural network application excites you the most: healthcare, finance, agriculture, or art?

Challenges and Future of Neural Networks

Challenges

- Overfitting: The network might memorize training data—like a student cramming for a test—but struggle with new data, like a pop quiz.

- Interpretability: Figuring out why a network flagged your loan application as risky can be a mystery, limiting trust in critical decisions.

The Future

Research into explainable AI aims to make decisions transparent (e.g., “It flagged the loan due to high debt”), while efficient models promise faster learning—think real-time translation on your phone.

Getting Started with Neural Networks on Cloud Platforms

Ready to build your own neural network? Cloud platforms like AWS SageMaker, Google Cloud AI, and Azure Machine Learning offer user-friendly tools. Stay tuned for our next blog on “Building Neural Networks on AWS, GCP, and Azure”!

Conclusion

Neural networks are the beating heart of AI, turning raw data into incredible feats—recognizing voices, driving cars, diagnosing diseases, and even painting masterpieces. We’ve explored their building blocks (neurons and layers), how they learn (forward and backpropagation), and their vast applications, all in a way that’s simple and fun. At TowardsCloud, we’re here to guide you through tech’s wonders, so stick around for more insights on AWS, GCP, Azure, and beyond.

Reflect: Before you go, take a moment to think—on a scale of 1 to 10, how would you rate your understanding of neural networks now? Share your rating in the comments and see how others feel!

FAQ

- What’s the difference between AI, machine learning, and neural networks?

AI is the big umbrella—making machines smart. Machine learning is a subset where machines learn from data. Neural networks are a specific type of machine learning model inspired by the brain. - Do I need advanced math to understand neural networks?

Not at all! While calculus powers them, you can grasp the basics with curiosity and examples—like this blog! - How are neural networks different from traditional algorithms?

Traditional algorithms follow fixed rules; neural networks learn patterns from data, tackling complex, unpredictable tasks. - Can neural networks think like humans?

No—they’re powerful pattern-finders but lack human awareness or creativity beyond their training.

That’s it for “Understanding Neural Networks: The Backbone of AI”! Whether you’re an IT pro or just starting out, we hope this guide sparked your interest. See you tomorrow for more tech adventures on TowardsCloud.com!