Unlocking the Magic: Key Definitions and Concepts in Generative AI

Generative AI. It sounds like something straight out of a science fiction movie, doesn’t it? Robots painting masterpieces, computers composing symphonies, algorithms dreaming up new worlds. While it might seem like magic, the reality is even more fascinating. Generative AI is rapidly changing the world around us, from the art we consume to the way we work, and even how we understand ourselves.

But what is it, really? And how does it work? This blog post is your friendly, jargon-busting guide to the core concepts and definitions that underpin this revolutionary technology. We’ll break down the complex ideas into bite-sized pieces, use real-world examples, and even visualize things with some cool diagrams. No Ph.D. in computer science required!

Why Should You Care About Generative AI?

Before we dive into the technical details, let’s talk about why you should even bother learning about this stuff. Generative AI isn’t just a niche topic for tech enthusiasts; it’s becoming increasingly relevant to everyone. Here’s why:

- It’s Everywhere (and Will Be Even More So): From the auto-suggestions in your email to the filters on your favorite social media apps, Generative AI is already subtly influencing your daily life. And this is just the beginning.

- It’s Changing Industries: Generative AI is poised to disrupt numerous industries, including art, music, writing, design, marketing, pharmaceuticals, and even coding. Understanding its capabilities can help you adapt and thrive in a changing job market.

- It’s Empowering Creativity: Generative AI tools can be powerful partners for creative expression. Whether you’re a professional artist or a casual hobbyist, these tools can help you explore new ideas and push the boundaries of your imagination.

- It’s Shaping the Future: Generative AI raises profound questions about the nature of creativity, intelligence, and what it means to be human. Understanding these concepts will help you engage in informed discussions about the ethical and societal implications of this technology.

- It’s powering innovative solutions: The rapid growth of Gen AI opens immense opportunities in the field of Cloud technology.

So, buckle up and get ready to explore the exciting world of Generative AI!

1. The Foundation: Artificial Intelligence (AI)

Before we can understand Generative AI, we need to grasp the basics of Artificial Intelligence (AI) itself.

AI, in a nutshell, is the ability of a computer or machine to mimic human intelligence. This includes tasks like:

- Learning: Acquiring information and rules for using that information.

- Reasoning: Using the rules to reach conclusions (either definite or approximate).

- Problem-solving: Finding solutions to complex challenges.

- Perception: Understanding and interpreting sensory input (like images or sounds).

- Language understanding: Comprehending and responding to human language.

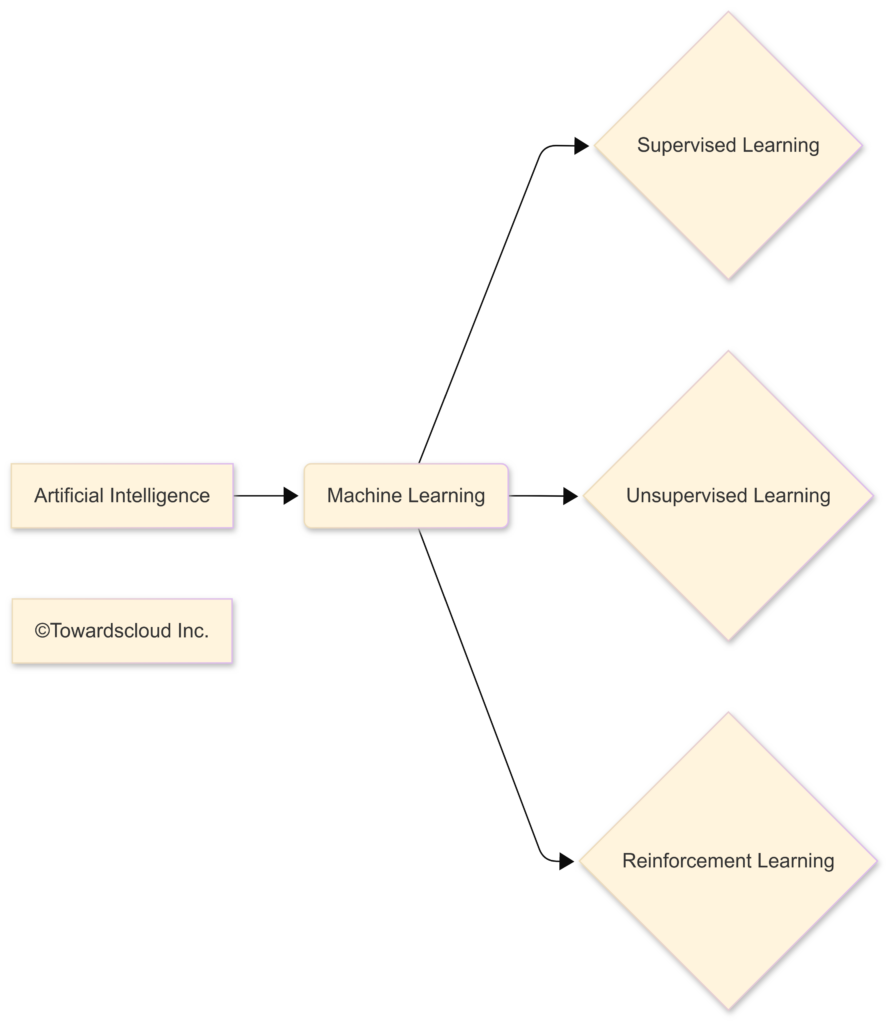

Think of AI as a broad umbrella, and under that umbrella, we find many different approaches and techniques. One of those techniques is Machine Learning.

2. Machine Learning (ML): Learning from Data

Machine Learning (ML) is a specific type of AI where computers learn from data without being explicitly programmed. Instead of a programmer writing out every single rule, the machine learns the rules itself by analyzing vast amounts of data.

Real-life Analogy: Imagine you’re teaching a child to identify cats. You wouldn’t give them a detailed list of every possible cat characteristic (fur color, eye shape, tail length, etc.). Instead, you’d show them lots of pictures of cats, and they’d gradually learn to recognize the patterns that define “cat-ness.” Machine learning works similarly.

There are three main types of machine learning:

- Supervised Learning: The machine is trained on labeled data, meaning each data point has a corresponding “correct answer.” (Example: Showing a machine thousands of pictures labeled “cat” or “not cat”.)

- Unsupervised Learning: The machine is given unlabeled data and must find patterns and structures on its own. (Example: Giving a machine a dataset of customer purchase histories and asking it to identify distinct customer groups.)

- Reinforcement Learning: The machine learns through trial and error, receiving rewards for correct actions and penalties for incorrect ones. (Example: Training a robot to navigate a maze by rewarding it for reaching the exit.)

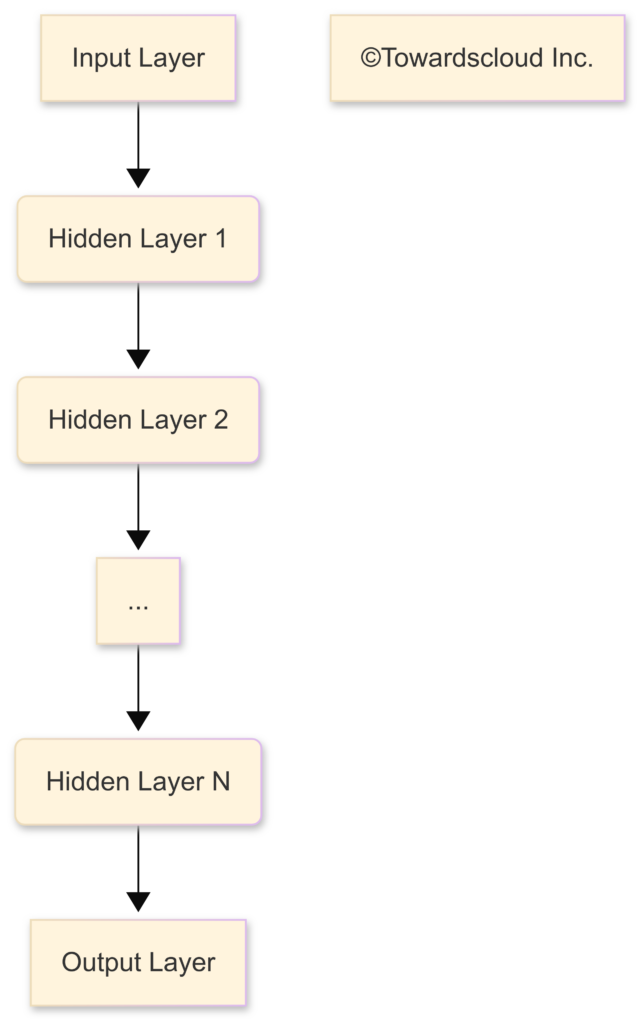

3. Deep Learning (DL): The Power of Neural Networks

Deep Learning (DL) is a subset of Machine Learning that uses artificial neural networks with multiple layers (hence “deep”) to analyze data. These neural networks are inspired by the structure and function of the human brain.

Real-life Analogy: Imagine a complex assembly line. Each worker on the line performs a simple task, and the output of one worker becomes the input for the next. Deep learning networks work similarly, with each layer of the network extracting increasingly abstract features from the data.

- Neurons: The basic building blocks of a neural network. Each neuron receives input, performs a simple calculation, and produces an output.

- Layers: Neurons are organized into layers. The input layer receives the initial data, the output layer produces the final result, and the hidden layers in between perform the complex processing.

- Weights and Biases: These are adjustable parameters that control the strength of connections between neurons and influence the network’s output. The learning process involves adjusting these weights and biases to improve the network’s accuracy.

4. Generative AI: Creating Something New

Now we finally get to the star of the show: Generative AI!

Generative AI is a type of AI that can create new content, rather than just analyzing or acting on existing data. This content can take many forms, including:

- Text: Articles, poems, scripts, code, emails, etc.

- Images: Photorealistic images, artwork, designs, etc.

- Audio: Music, speech, sound effects, etc.

- Video: Short clips, animations, even entire movies (though this is still in its early stages).

- 3D Models: Objects, environments, characters for games and simulations.

- Code: Generate codes in different programming languages.

- Data: Synthetic datasets for training other AI models.

Real-life Analogy: Think of a chef. A traditional AI might be able to analyze a recipe (existing data) and tell you its ingredients or nutritional value. A Generative AI, on the other hand, could create a brand new recipe based on its understanding of flavor combinations and cooking techniques.

Key Difference: Traditional AI is primarily focused on discrimination (classifying or predicting), while Generative AI is focused on creation.

5. Key Generative AI Models and Techniques

Now, let’s explore some of the most important models and techniques used in Generative AI.

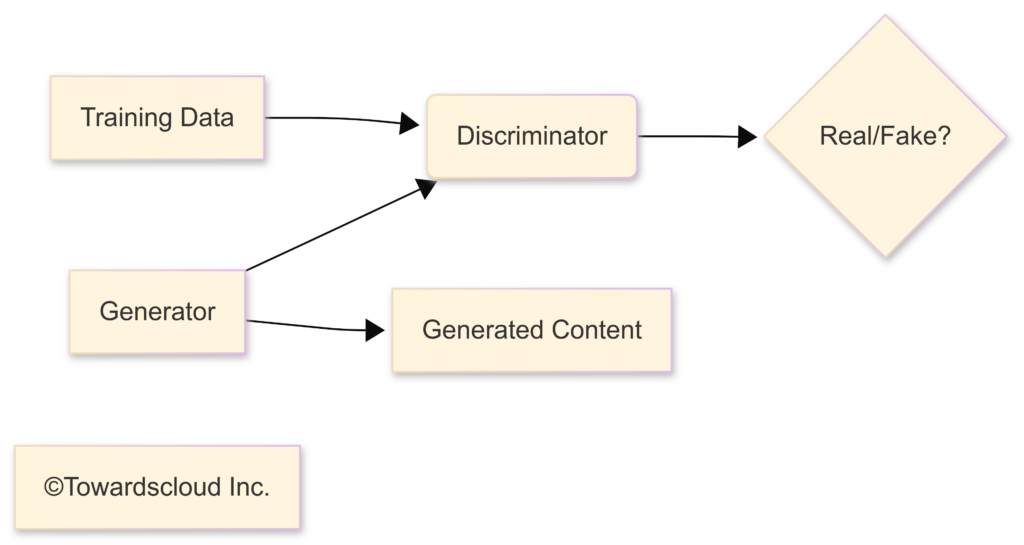

a) Generative Adversarial Networks (GANs)

GANs are a powerful and fascinating type of Generative AI model. They consist of two neural networks that work against each other (hence “adversarial”):

- Generator: This network creates new content (e.g., images).

- Discriminator: This network tries to distinguish between real content (from a training dataset) and fake content generated by the Generator.

Real-life Analogy: Imagine a forger (Generator) trying to create fake paintings, and an art expert (Discriminator) trying to spot the fakes. The forger gets better and better at creating realistic fakes, and the expert gets better and better at detecting them. This constant competition drives both networks to improve, ultimately resulting in the Generator being able to create incredibly realistic output.

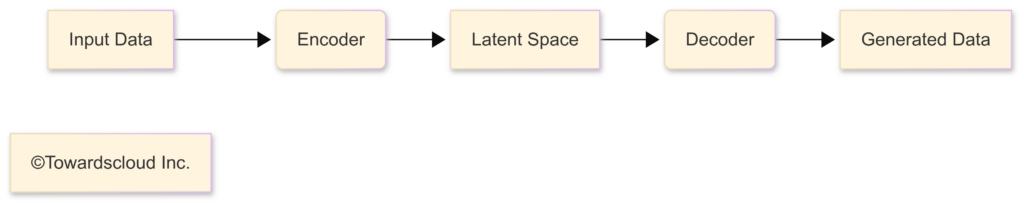

b) Variational Autoencoders (VAEs)

VAEs are another type of Generative AI model that learns a compressed, lower-dimensional representation of the input data (called a “latent space”). They can then generate new data by sampling from this latent space.

Real-life Analogy: Imagine you have a huge collection of LEGO bricks. A VAE could learn to represent each LEGO creation as a set of a few key parameters (e.g., size, color, shape). It could then generate new LEGO creations by tweaking those parameters.

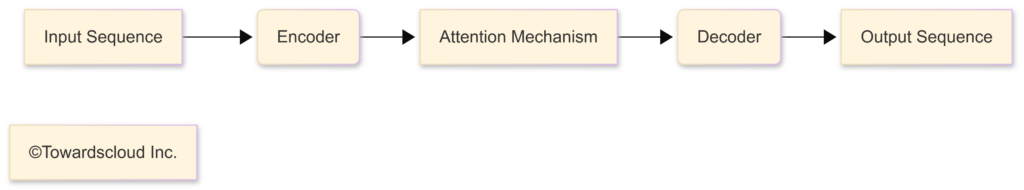

c) Transformers (and Large Language Models – LLMs)

Transformers are a revolutionary neural network architecture that has become the foundation for many of the most impressive Generative AI models, particularly in the field of natural language processing (NLP).

Key Innovation: Attention Mechanism: Transformers use an “attention mechanism” that allows them to focus on the most relevant parts of the input sequence when generating output. This is crucial for understanding long-range dependencies in text (e.g., how a pronoun earlier in a sentence relates to a noun later on).

Large Language Models (LLMs): These are transformer-based models that have been trained on massive amounts of text data. They can perform a wide range of language-related tasks, including:

- Text generation: Writing articles, poems, scripts, etc.

- Translation: Converting text from one language to another.

- Summarization: Creating concise summaries of longer texts.

- Question answering: Providing answers to questions based on a given context.

- Chatbots: Engaging in conversations with users.

- Code generation: Generate codes based on prompts.

Examples of LLMs include:

- GPT (Generative Pre-trained Transformer) series (OpenAI): GPT-3, GPT-4, and their successors are among the most famous LLMs.

- BERT (Bidirectional Encoder Representations from Transformers) (Google): BERT is widely used for various NLP tasks.

- LaMDA (Language Model for Dialogue Applications) (Google): LaMDA is specifically designed for dialogue.

- PaLM (Pathways Language Model) (Google): PaLM is another very large and powerful LLM.

- LLaMA (Meta): LLaMA models are also open source.

- Gemini (Google): Gemini is the latest and most advanced LLM.

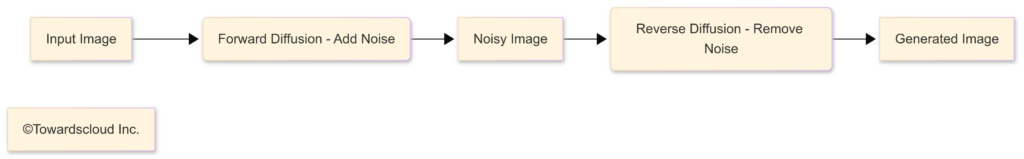

d) Diffusion Models

Diffusion models are a newer class of generative models that have achieved state-of-the-art results in image generation. They work by gradually adding noise to an image until it becomes pure noise, and then learning to reverse this process to generate new images from noise.

Real-life Analogy: Imagine taking a clear photograph and slowly blurring it out until it’s just a random mess of pixels. A diffusion model learns to “unblur” the image, step by step, until it reconstructs a clear and realistic image.

6. Key Concepts and Terms

Here’s a table summarizing some key terms and concepts, with links to reliable sources:

| Term | Definition | Real-life Analogy | Reliable Source |

|---|---|---|---|

| Prompt Engineering | The art of crafting effective input prompts to guide a Generative AI model towards the desired output. | Giving a chef specific instructions (“Make a spicy vegetarian pasta dish with tomatoes and basil”) instead of just saying “Make dinner.” | Prompt Engineering Guide |

| Hallucination | When a Generative AI model produces output that is factually incorrect, nonsensical, or not grounded in the input data. | A chef inventing ingredients that don’t exist or claiming a dish is from a fictional country. | Understanding Hallucinations in LLMs |

| Fine-tuning | Adapting a pre-trained Generative AI model to a specific task or dataset by further training it on a smaller, more focused dataset. | Taking a chef who’s trained in all types of cuisine and having them specialize in Italian food by practicing with Italian recipes. | Fine-tuning LLMs |

| Tokenization | The process of breaking down text into smaller units (tokens) that can be processed by a Generative AI model. Tokens can be words, sub-words, or characters. | Breaking down a recipe into individual ingredients and steps. | Tokenization in NLP |

| Latent Space | A compressed, lower-dimensional representation of data learned by some Generative AI models (like VAEs). It captures the underlying structure and relationships within the data. | Representing a complex LEGO creation with a few key parameters (size, color, shape). | Understanding Latent Space |

| Zero-Shot Learning | The ability of a Generative AI model to perform a task without having been explicitly trained on that task. | A chef being able to make a decent pizza even if they’ve never made one before, based on their general knowledge of cooking and flavors. | Zero-Shot Learning in NLP |

| Few-Shot Learning | The ability of a Generative AI model to perform a task after being given only a few examples. | A chef learning to make a new type of cake after seeing just a couple of examples. | Few-Shot Learning |

| Multimodal AI | AI models that can process and generate content from multiple modalities, such as text and images, or audio and video. | A chef who can understand both written recipes and pictures of the dish. | Multimodal AI |

| Synthetic Data | Artificially generated data that mimics the properties of real data. It can be used to train AI models when real data is scarce, sensitive, or expensive to obtain. | Creating fake customer data to train a marketing model without using real customer information. | Synthetic Data Generation |

| Reinforcement Learning from Human Feedback (RLHF) | A technique for training AI models by using human feedback to guide the learning process. This is often used to align the model’s behavior with human preferences and values. | Teaching a robot to walk by giving it positive feedback when it takes successful steps and negative feedback when it falls. | RLHF |

| Chain-of-Thought Prompting | This technique improve reasoning capability of LLMs by generating a series of intermediate reasoning steps. | A student show their work when solving a mathematics equation. | Chain-of-Thought |

7. The Future of Generative AI

Generative AI is a rapidly evolving field, and we can expect to see even more incredible advancements in the coming years. Some potential future directions include:

- More Realistic and Controllable Generation: Models will become even better at generating high-quality, realistic content, and users will have more control over the specific details of the output. Imagine being able to say, “Generate an image of a cat sitting on a windowsill, but make it a calico cat with green eyes, and make the lighting warm and golden,” and getting exactly what you envisioned. This fine-grained control will be a major focus. We’re moving beyond just “generate an image” to “generate a specific image with these precise characteristics.”

- Improved Multimodal Capabilities: We’ll see more models that can seamlessly integrate and generate content across different modalities (text, images, audio, video). Think of a system where you could describe a scene in text (“A bustling marketplace in Marrakech at sunset, with vendors selling spices and textiles, and the sound of traditional music playing”), and the AI would generate not just an image, but also accompanying sound effects and even a short video clip. This seamless blending of modalities will make for richer and more immersive experiences.

- Personalized and Customized Content: Generative AI will be used to create highly personalized content tailored to individual users’ needs and preferences. This goes beyond simple product recommendations. Imagine:

- Personalized Education: AI tutors that adapt to your learning style and pace, generating custom exercises and explanations.

- Personalized Entertainment: AI-generated stories, games, and music tailored to your specific tastes.

- Personalized Medicine: AI-designed drug therapies customized to your individual genetic makeup.

- New Applications in Science and Discovery: Generative AI could be used to design new materials, discover new drugs, and accelerate scientific research. For example:

- Materials Science: Designing new alloys with specific properties (strength, conductivity, etc.) by having the AI explore vast chemical combinations.

- Drug Discovery: Generating potential drug candidates that target specific diseases, drastically reducing the time and cost of pharmaceutical research.

- Climate Modeling: Creating more accurate and detailed climate models to better understand and predict climate change.

- Addressing Ethical Concerns: As Generative AI becomes more powerful, it’s crucial to address the ethical challenges it poses. This includes:

- Bias: Ensuring that models are trained on diverse and representative datasets to avoid perpetuating harmful stereotypes.

- Misinformation: Developing techniques to detect and combat AI-generated fake news and propaganda.

- Copyright and Ownership: Establishing clear guidelines for the ownership and use of AI-generated content.

- Job Displacement: Preparing for the potential impact of AI on the workforce and developing strategies for retraining and upskilling.

- Security Risks: Protecting against malicious uses of generative AI, such as deepfakes used for fraud or impersonation.

- Explainable and Interpretable AI: Moving beyond “black box” models, there will be a growing emphasis on understanding how generative AI models arrive at their outputs. This is crucial for building trust and ensuring accountability. Techniques like attention visualization and model probing will become more sophisticated.

- Edge Computing and Generative AI: We’ll see more generative AI models running on edge devices (smartphones, IoT devices) rather than solely in the cloud. This will enable faster response times, lower latency, and improved privacy.

- Human-AI Collaboration: The most exciting future may not be about AI replacing humans, but about humans and AI working together. Generative AI can be a powerful tool for augmenting human creativity and productivity. Imagine:

- Writers collaborating with AI to brainstorm ideas and overcome writer’s block.

- Designers using AI to generate variations on their designs and explore new possibilities.

- Scientists using AI to analyze complex data and generate hypotheses.

- Programmers using AI to generate codes faster.

- Quantum Generative AI: The intersection of quantum computing and generative AI is a nascent but potentially transformative area. Quantum computers could potentially train and run generative models much faster and more efficiently than classical computers, leading to breakthroughs in areas like drug discovery and materials science. This is very long-term, but the research is already beginning.

8. The Challenges and Limitations of Generative AI

While the potential of Generative AI is immense, it’s important to be aware of its current limitations and challenges:

- Computational Cost: Training large generative models can be extremely expensive, requiring vast amounts of computing power and energy. This creates a barrier to entry for smaller organizations and researchers.

- Data Dependence: Generative AI models are only as good as the data they are trained on. Biased or incomplete data can lead to biased or inaccurate outputs.

- Lack of Common Sense: While models can generate impressive text and images, they often lack true understanding of the world and can produce outputs that are nonsensical or illogical.

- Difficulty with Abstract Reasoning: Generative AI models can struggle with tasks that require complex reasoning, planning, or abstract thought.

- Control and Predictability: It can be difficult to precisely control the output of generative models, and their behavior can sometimes be unpredictable.

- Ethical Concerns: As mentioned earlier, generative AI raises a number of ethical concerns that need to be carefully addressed.

9. Generative AI and the Cloud

The cloud plays a massive role in the development and deployment of Generative AI. Here’s why:

- Scalable Compute Power: Cloud providers like AWS, Google Cloud, and Microsoft Azure offer the vast computational resources needed to train and run large generative models. This eliminates the need for organizations to invest in and maintain expensive on-premises infrastructure.

- Data Storage and Management: Generative AI models require massive datasets. Cloud platforms provide scalable and cost-effective storage solutions for managing these datasets.

- Pre-trained Models and APIs: Cloud providers offer access to pre-trained generative models and APIs, making it easier for developers to integrate Generative AI capabilities into their applications without having to train models from scratch. This is a huge enabler.

- Machine Learning Platforms: Cloud platforms provide comprehensive machine learning platforms that streamline the entire Generative AI workflow, from data preparation and model training to deployment and monitoring. Examples include:

- Amazon SageMaker (AWS)

- Vertex AI (Google Cloud)

- Azure Machine Learning (Microsoft Azure)

- Collaboration and Sharing: Cloud platforms facilitate collaboration among researchers and developers working on Generative AI projects.

10. Conclusion: Embrace the Generative AI Revolution

Generative AI is not just a futuristic fantasy; it’s a rapidly evolving reality that is already transforming the world around us. From creating art and music to accelerating scientific discovery and revolutionizing industries, its potential is truly staggering.

While there are challenges and ethical considerations to address, the opportunities presented by Generative AI are too significant to ignore. By understanding the key concepts and definitions outlined in this blog post, you’re well-equipped to navigate this exciting new landscape and participate in the ongoing conversation about the future of AI.

Call To Action

Don’t just be a passive observer – become an active participant in the Generative AI revolution! Here’s how:

Explore Generative AI Tools: Experiment with readily available tools like DALL-E 2 (image generation), ChatGPT (text generation), or other platforms listed by cloud providers. Get hands-on experience and see what’s possible.

Learn More: Dive deeper into specific areas of Generative AI that interest you. There are countless online courses, tutorials, and research papers available.

Join the Conversation: Follow thought leaders and researchers in the field on social media, attend webinars and conferences, and engage in discussions about the ethical and societal implications of Generative AI.

Consider the Possibilities: Think about how Generative AI could be applied to your own work, hobbies, or personal life. What problems could it help you solve? What new creative avenues could it open up?

Share Your Thoughts: What excites you most about Generative AI? What are your biggest concerns? Leave a comment below and share your perspective! Let’s build a community of informed and engaged individuals who are shaping the future of this powerful technology.

Start a Project: Use the concepts that you have learnt and come up with your idea that leverages Generative AI.