Overview of Generative Models: VAEs, GANs, and More

Introduction

Welcome to another exciting exploration in our cloud and AI series! Today, we’re diving deep into the fascinating world of generative models—a cornerstone of modern artificial intelligence that’s revolutionizing how machines create content.

Imagine if computers could not just analyze data but actually create new, original content that resembles what they’ve learned—from realistic images and music to synthetic text and even 3D models. This isn’t science fiction; it’s the reality of today’s generative AI.

In this comprehensive guide, we’ll explore the inner workings of generative models, focusing particularly on Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and other groundbreaking architectures. We’ll break down complex concepts into digestible parts, illustrate them with real-world examples, and help you understand how these technologies are shaping our digital landscape.

🔍 Call to Action: As you read through this guide, try to think of potential applications of generative models in your own field. How might these technologies transform your work or industry? Keep a note of ideas that spark your interest—we’d love to hear them in the comments!

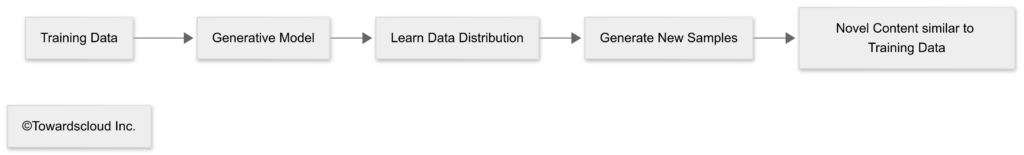

What Are Generative Models?

At their core, generative models are a class of machine learning systems designed to learn the underlying patterns and distributions of input data, then generate new samples that could plausibly belong to that same distribution.

The Real-World Analogy

Think of generative models like a chef who studies countless recipes of a particular dish. After learning the patterns, ingredients, and techniques, the chef can create new recipes that maintain the essence of the original dish while offering something novel and creative.

For example:

- A generative model trained on thousands of landscape paintings might create new, original landscapes

- One trained on music can compose new melodies in similar styles

- A model trained on written text can generate new stories or articles

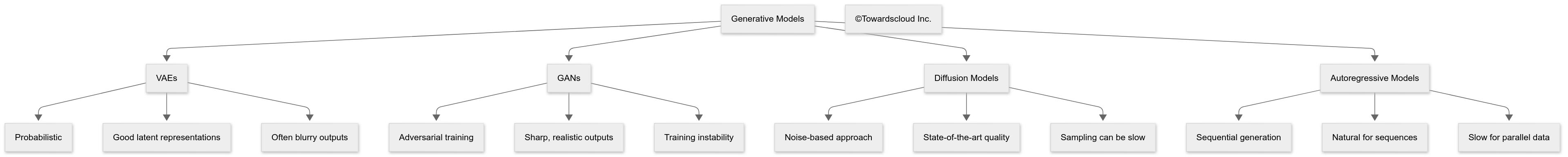

Types of Generative Models

There are several approaches to building generative models, each with unique strengths and applications:

| Model Type | Key Characteristics | Typical Applications |

|---|---|---|

| Variational Autoencoders (VAEs) | Probabilistic, encode data into compressed latent representations | Image generation, anomaly detection, data compression |

| Generative Adversarial Networks (GANs) | Two competing networks (generator vs discriminator) | Photorealistic images, style transfer, data augmentation |

| Diffusion Models | Gradually add and remove noise from data | High-quality image generation, audio synthesis |

| Autoregressive Models | Generate sequences one element at a time | Text generation, time series prediction, music composition |

| Flow-based Models | Sequence of invertible transformations | Efficient exact likelihood estimation, image generation |

🤔 Call to Action: Which of these model types sounds most interesting to you? As we explore each in detail, consider which might be most relevant to problems you’re trying to solve!

Variational Autoencoders (VAEs): Creating Through Compression

Let’s begin with Variational Autoencoders—one of the earliest and most fundamental generative model architectures.

How VAEs Work

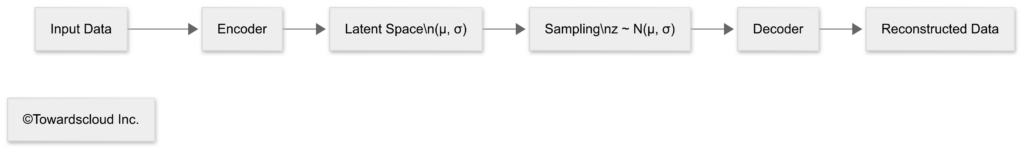

VAEs consist of two primary components:

- Encoder: Compresses input data into a lower-dimensional latent space

- Decoder: Reconstructs data from the latent space back to the original format

What makes VAEs special is that they don’t just compress data to a fixed point in latent space—they encode data as a probability distribution (usually Gaussian). This enables:

- Smoother transitions between points in latent space

- Better generalization to new examples

- The ability to generate new samples by sampling from the latent space

The Math Behind VAEs (Simplified)

VAEs optimize two components simultaneously:

- Reconstruction loss: How well the decoder can reconstruct the original input

- KL divergence: Forces the latent space to resemble a normal distribution

This dual optimization allows VAEs to create a meaningful, continuous latent space that captures the essential features of the training data.

Real-World Example: Face Generation

Imagine a VAE trained on thousands of human faces. The encoder learns to compress each face into a small set of values in latent space, capturing features like facial structure, expression, and lighting. The decoder learns to reconstruct faces from these compressed representations.

Once trained, we can:

- Generate entirely new faces by sampling random points in latent space

- Interpolate between faces by moving from one point to another in latent space

- Modify specific attributes by learning which directions in latent space correspond to features like “smiling” or “adding glasses”

💡 Call to Action: Think of an application where encoding complex data into a simpler representation would be valuable. How might a VAE help solve this problem? Share your thoughts in the comments section!

Generative Adversarial Networks (GANs): Learning Through Competition

While VAEs focus on encoding and reconstruction, GANs take a fundamentally different approach based on competition between two neural networks.

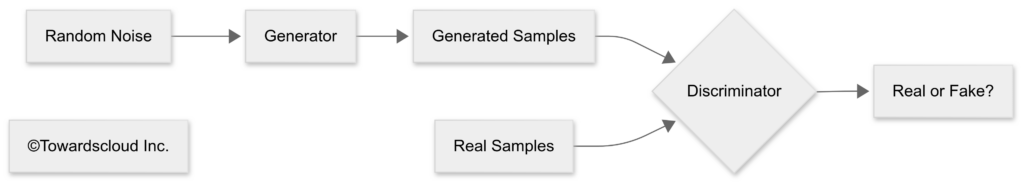

The Two Players in the GAN Game

GANs consist of two neural networks locked in a minimax game:

- Generator: Creates samples (like images) from random noise

- Discriminator: Tries to distinguish real samples from generated ones

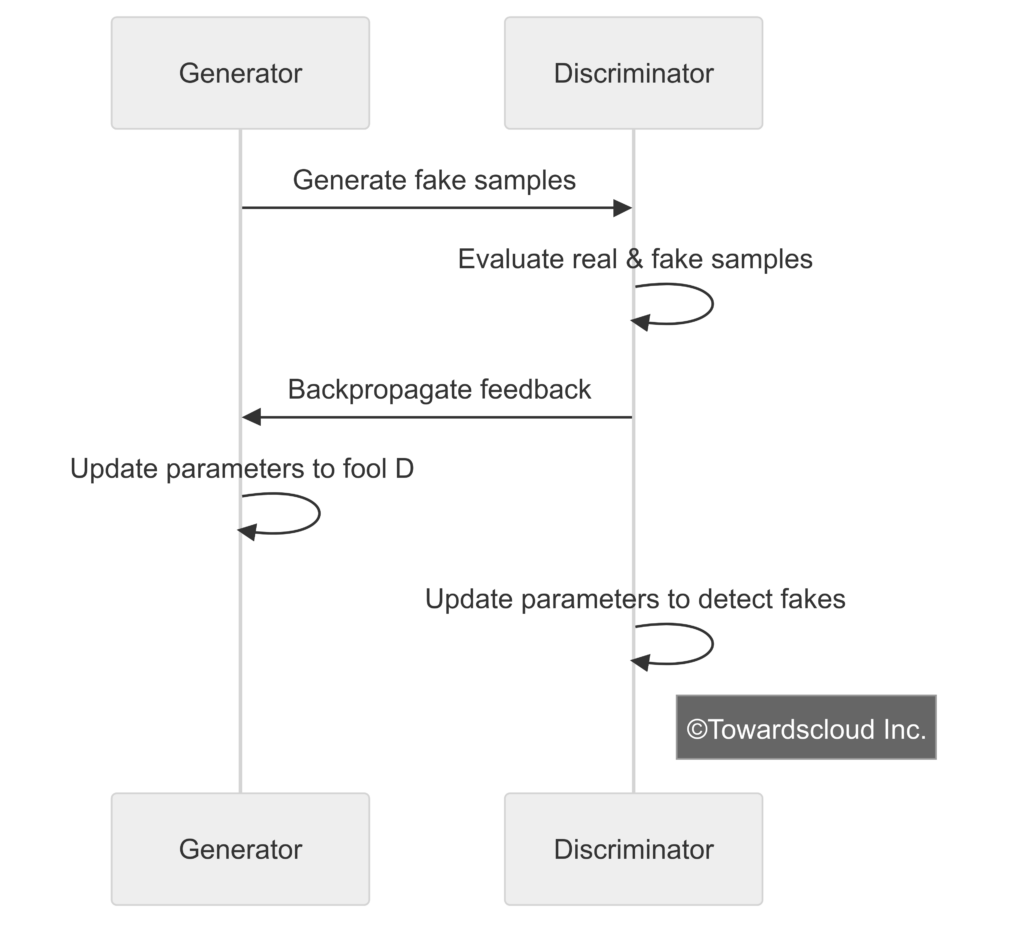

As training progresses:

- The generator gets better at creating realistic samples

- The discriminator gets better at spotting fakes

- Eventually, the generator creates samples so realistic that the discriminator can’t tell the difference

The Competitive Learning Process

Real-World Example: Art Generation

Consider a GAN trained on thousands of oil paintings from the Renaissance period:

- The generator initially creates random, noisy images

- The discriminator learns to identify authentic Renaissance paintings from the generator’s creations

- Over time, the generator learns to produce increasingly convincing Renaissance-style paintings

- Eventually, the generator can create new, original artwork that captures the style, color palette, and composition typical of Renaissance paintings

Challenges in GAN Training

GAN training faces several notable challenges:

| Challenge | Description | Common Solutions |

| Training Instability | Generator produces limited varieties of samples | Modified loss functions, minibatch discrimination |

| Evaluation Difficulty | Oscillations, failure to converge | Gradient penalties, spectral normalization |

| Disentanglement | Hard to quantitatively assess quality | Inception Score, FID, human evaluation |

| Disentanglement | Controlling specific features | Conditional GANs, InfoGAN |

Notable GAN Variants

Several specialized GAN architectures have emerged for specific tasks:

- StyleGAN: Creates high-resolution images with control over style at different scales

- CycleGAN: Performs unpaired image-to-image translation (e.g., horses to zebras)

- StackGAN: Generates images from textual descriptions in multiple stages

- BigGAN: Scales to high-resolution, diverse image generation

🔧 Call to Action: GANs excel at creating realistic media. Can you think of an industry problem where generating synthetic but realistic data would be valuable? Consider areas like healthcare, product design, or entertainment!

Diffusion Models: The New Frontier

More recently, diffusion models have emerged as a powerful alternative to VAEs and GANs, achieving state-of-the-art results in image and audio generation.

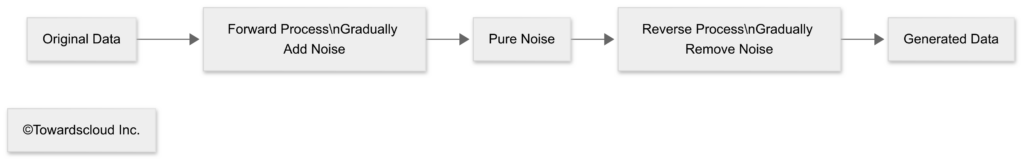

How Diffusion Models Work

Diffusion models operate on a unique principle:

- Forward process: Gradually add random noise to training data until it becomes pure noise

- Reverse process: Learn to gradually remove noise, starting from random noise, to generate data

The model essentially learns how to denoise data, which implicitly teaches it the underlying data distribution.

Real-World Example: Text-to-Image Generation

Stable Diffusion and DALL-E are prominent examples of diffusion models that can generate images from text descriptions:

- The user provides a text prompt like “a cat sitting on a windowsill at sunset”

- The model starts with random noise

- Step by step, the model removes noise while being guided by the text prompt

- Eventually, a clear image emerges that matches the description

These models can generate remarkably detailed and creative images that follow complex instructions, often blending concepts in novel ways.

Comparison of Generative Model Approaches

Let’s compare the key generative model architectures:

| Model Type | Strengths | Weaknesses | Best Use Cases |

| VAEs | – Stable training – Good latent space – Explicit likelihood | – Often blurry outputs – Less complex distributions | Medical imaging, anomaly detection, data compression |

| GANs | – Sharp, realistic outputs – Flexible architecture | – Mode collapse – Training instability – No explicit likelihood | Photorealistic images, style transfer, data augmentation |

| Diffusion | – State-of-the-art quality – Stable training – Flexible conditioning | – Slow sampling (improving) – Computationally intensive | High-quality image generation, text-to-image, inpainting |

| Autoregressive | – Natural for sequential data – Tractable likelihood | – Slow generation – No latent space | Text generation, music, language models |

📊 Call to Action: Based on this comparison, which model type seems most suitable for your specific use case? Consider the trade-offs between quality, speed, and stability for your particular application!

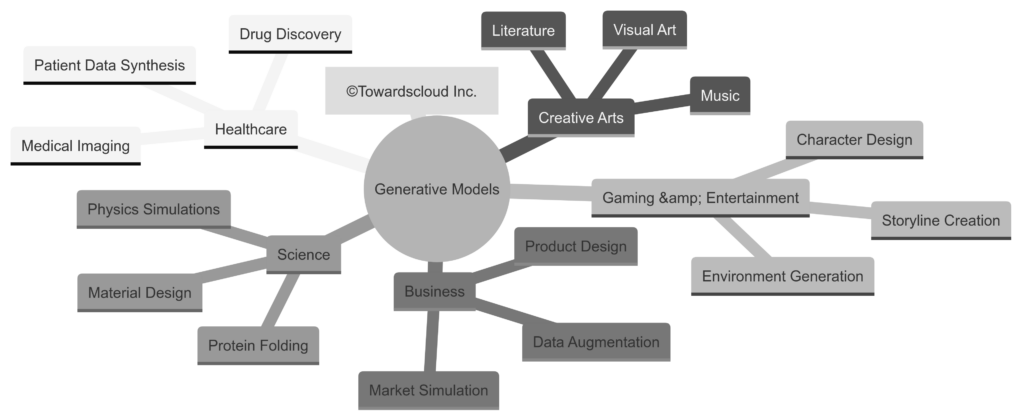

Real-World Applications

Generative models have found applications across numerous industries:

Healthcare

- Medical Image Synthesis: Generating synthetic X-rays, MRIs, and CT scans for training algorithms with limited data

- Drug Discovery: Designing new molecular structures with specific properties

- Anomaly Detection: Identifying unusual patterns in medical scans that might indicate disease

Creative Industries

- Art Generation: Creating new artwork in specific styles or based on text descriptions

- Music Composition: Generating original melodies, harmonies, and even full compositions

- Content Creation: Assisting writers with story ideas, dialogue, and plot development

Business and Finance

- Data Augmentation: Expanding limited datasets for better model training

- Synthetic Data Generation: Creating realistic but privacy-preserving datasets

- Fraud Detection: Learning normal patterns to identify unusual activities

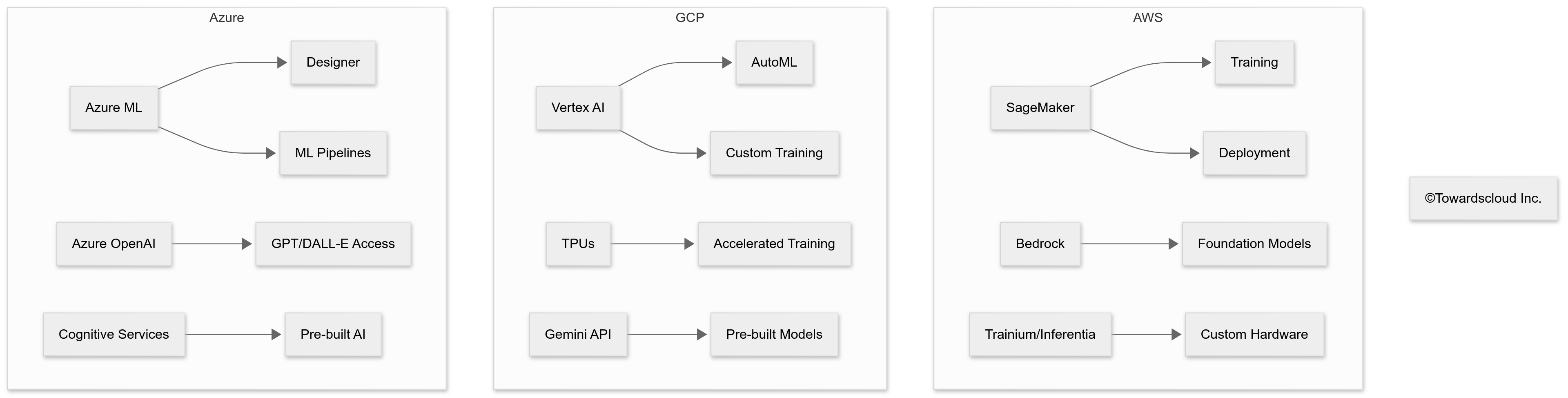

Cloud Implementation of Generative Models

Implementing generative models in cloud environments offers significant advantages in terms of scalability, resource management, and accessibility. Let’s examine how AWS, GCP, and Azure support generative model deployment:

AWS Implementation

AWS offers several services for deploying generative models:

- Amazon SageMaker: Provides managed infrastructure for training and deploying generative models with built-in support for popular frameworks

- AWS Deep Learning AMIs: Pre-configured virtual machines with deep learning frameworks installed

- Amazon Bedrock: A fully managed service that makes foundation models available via API

- AWS Trainium/Inferentia: Custom chips optimized for AI training and inference

GCP Implementation

Google Cloud Platform provides:

- Vertex AI: End-to-end platform for building and deploying ML models, including generative models

- TPU (Tensor Processing Units): Specialized hardware that accelerates deep learning workloads

- Cloud AI Platform: Managed services for model training and serving

- Gemini API: Access to Google’s advanced multimodal models

Azure Implementation

Microsoft Azure offers:

- Azure Machine Learning: Comprehensive service for building and deploying models

- Azure OpenAI Service: Provides access to advanced models like GPT and DALL-E

- Azure Cognitive Services: Pre-built AI capabilities that can be integrated with custom generative models

- Azure ML Compute: Scalable compute targets optimized for machine learning

Cloud Platform Comparison

| Feature | AWS | GCP | Azure |

| Model Training | SageMaker, EC2 | Vertex AI, Cloud TPU | Azure ML, AKS |

| Pre-built Models | Bedrock, Textract | Vertex AI, Gemini | Azure OpenAI, Cognitive Services |

| Custom Hardware | Trainium, Inferentia | TPU | Azure GPU VMs, NDv4 |

| Serverless Inference | SageMaker Serverless | Vertex AI Predictions | Azure Container Instances |

| Development Tools | SageMaker Studio | Colab Enterprise, Vertex Workbench | Azure ML Studio |

☁️ Call to Action: Which cloud provider’s approach to generative AI aligns best with your organization’s existing infrastructure and needs? Consider factors like integration capabilities, cost structure, and available AI services when making your decision!

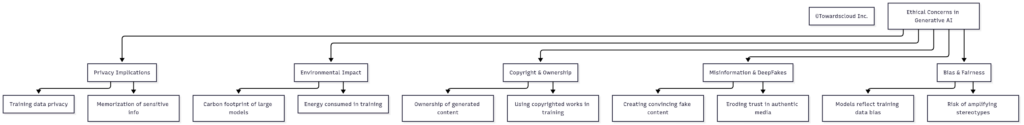

Ethical Considerations and Challenges

The power of generative models brings significant ethical considerations:

| Concern | Description | Potential Solutions |

| Bias & Fairness | Generative models can perpetuate or amplify biases present in training data | Diverse training data, bias detection tools, fairness metrics |

| Misinformation | Realistic fake content can be used to spread misinformation | Content provenance techniques, watermarking, detection tools |

| Privacy | Models may memorize and expose sensitive training data | Differential privacy, federated learning, careful data curation |

| Copyright | Questions around ownership of AI-generated content | Clear usage policies, attribution mechanisms, licensing frameworks |

| Environmental Impact | Large model training consumes significant energy | More efficient architectures, carbon-aware training, model distillation |

🔎 Call to Action: Consider the ethical implications of implementing generative AI in your context. What safeguards could you put in place to ensure responsible use? Share your thoughts on balancing innovation with ethical considerations!

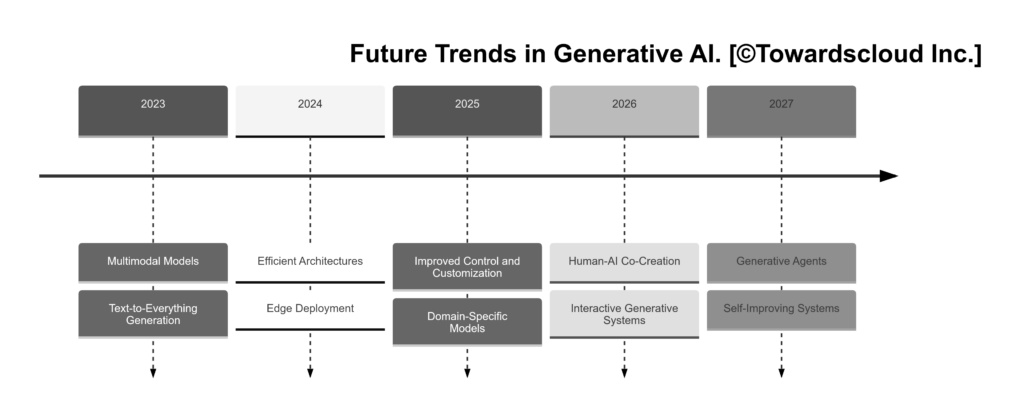

The Future of Generative Models

The field of generative models continues to evolve rapidly:

Key Trends to Watch

- Multimodal Generation: Models that work across text, images, audio, and video simultaneously

- Human-AI Collaboration: Tools designed specifically for co-creation between humans and AI

- Efficient Architectures: More compact models that can run on edge devices

- Controllable Generation: Finer-grained control over generated outputs

- Domain Specialization: Models fine-tuned for specific industries and applications

Getting Started with Generative Models

Ready to experiment with generative models yourself? Here are some resources to get started:

Learning Resources

- TensorFlow Generative Models – Tutorials on various generative techniques

- PyTorch Tutorials – Implementation guides for GANs and other models

- Hugging Face Diffusers – Library for state-of-the-art diffusion models

- Papers With Code – Latest research with implementations

Cloud-Based Starting Points

- AWS: SageMaker Generative AI Example Notebooks

- GCP: Vertex AI Generative AI Examples

- Azure: Azure OpenAI Service Quickstarts

🚀 Call to Action: Start with a small project to build your understanding. Perhaps try implementing a simple VAE for image generation or experiment with a pre-trained diffusion model. Share your progress and questions in the comments!

Conclusion

Generative models represent one of the most exciting frontiers in artificial intelligence, enabling machines to create content that was once the exclusive domain of human creativity. From VAEs to GANs to diffusion models, we’ve explored the key architectures driving this revolution.

As these technologies continue to evolve and become more accessible through cloud platforms like AWS, GCP, and Azure, the potential applications will only expand. Whether you’re interested in creative applications, business solutions, or scientific research, understanding generative models provides valuable tools for innovation.

Remember that with great power comes great responsibility—as you implement these technologies, consider the ethical implications and work to ensure responsible, beneficial applications that enhance rather than replace human creativity.

💬 Call to Action: What aspect of generative models most interests you? Are you planning to implement any of these technologies in your work? We’d love to hear about your experiences and questions in the comments below!

Stay tuned for our next detailed exploration in the cloud and AI series, where we’ll dive into practical implementations of these generative models on specific cloud platforms.